Edge detection isolates significant boundaries within images by identifying sharp discontinuities in intensity, which helps simplify the visual data and highlight object contours. Feature extraction goes beyond edges by capturing key attributes such as texture, shape, and color patterns, enabling deeper analysis and recognition in robotics applications. Combining edge detection with robust feature extraction enhances object identification accuracy and efficiency in autonomous robotic systems.

Table of Comparison

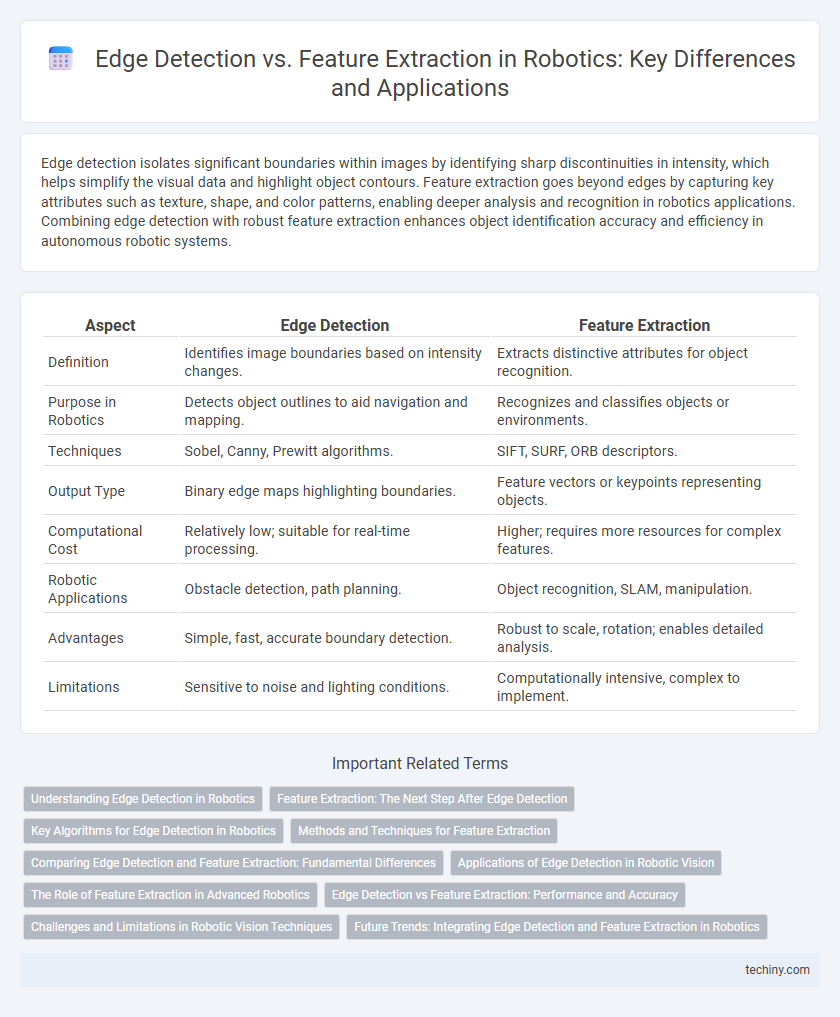

| Aspect | Edge Detection | Feature Extraction |

|---|---|---|

| Definition | Identifies image boundaries based on intensity changes. | Extracts distinctive attributes for object recognition. |

| Purpose in Robotics | Detects object outlines to aid navigation and mapping. | Recognizes and classifies objects or environments. |

| Techniques | Sobel, Canny, Prewitt algorithms. | SIFT, SURF, ORB descriptors. |

| Output Type | Binary edge maps highlighting boundaries. | Feature vectors or keypoints representing objects. |

| Computational Cost | Relatively low; suitable for real-time processing. | Higher; requires more resources for complex features. |

| Robotic Applications | Obstacle detection, path planning. | Object recognition, SLAM, manipulation. |

| Advantages | Simple, fast, accurate boundary detection. | Robust to scale, rotation; enables detailed analysis. |

| Limitations | Sensitive to noise and lighting conditions. | Computationally intensive, complex to implement. |

Understanding Edge Detection in Robotics

Edge detection in robotics involves identifying significant intensity changes in sensor data, such as images or point clouds, to outline object boundaries and shapes critical for navigation and manipulation. Techniques like the Canny, Sobel, and Laplacian operators enable robots to detect edges efficiently, facilitating real-time environmental understanding and obstacle avoidance. Unlike feature extraction, which focuses on identifying distinctive patterns or key points, edge detection provides foundational structural information essential for accurate scene interpretation and robotic decision-making.

Feature Extraction: The Next Step After Edge Detection

Feature extraction builds upon edge detection by identifying and quantifying meaningful patterns within robotic vision data, such as shapes, textures, and specific object characteristics. This process enhances a robot's ability to interpret complex environments and supports higher-level tasks like object recognition, navigation, and manipulation. Leveraging advanced algorithms such as Scale-Invariant Feature Transform (SIFT) and Speeded-Up Robust Features (SURF) optimizes the accuracy and robustness of feature extraction in dynamic robotic applications.

Key Algorithms for Edge Detection in Robotics

Key algorithms for edge detection in robotics include the Canny edge detector, Sobel operator, and Laplacian of Gaussian, which efficiently identify boundaries within sensor data and images. These algorithms enable robots to perceive object outlines and environmental changes crucial for navigation, manipulation, and object recognition. Implementing edge detection enhances robotic vision systems by providing precise spatial information essential for autonomous decision-making and obstacle avoidance.

Methods and Techniques for Feature Extraction

Feature extraction methods in robotics predominantly utilize techniques such as Scale-Invariant Feature Transform (SIFT), Speeded-Up Robust Features (SURF), and Oriented FAST and Rotated BRIEF (ORB) to identify and describe key points within images. These methods enable robots to recognize objects and environments by extracting robust, invariant features across varying scales and rotations. Edge detection, while essential for outlining object boundaries, serves mainly as a preliminary step that enhances the accuracy of complex feature extraction algorithms.

Comparing Edge Detection and Feature Extraction: Fundamental Differences

Edge detection identifies boundaries between regions in images by detecting sharp changes in intensity, serving as a low-level image processing technique crucial for segmenting objects. Feature extraction captures more complex image attributes like shapes, textures, or key points, enabling higher-level interpretation and matching tasks in robotic vision systems. The fundamental difference lies in edge detection emphasizing pixel-level intensity gradients, while feature extraction synthesizes these signals into distinctive descriptors for object recognition and scene understanding.

Applications of Edge Detection in Robotic Vision

Edge detection plays a crucial role in robotic vision by enabling robots to identify object boundaries and shapes for navigation and manipulation tasks. Applications include obstacle avoidance, object recognition, and environment mapping, where precise edge contours improve scene understanding and decision-making accuracy. This method enhances real-time processing efficiency, making it essential for autonomous robots operating in dynamic environments.

The Role of Feature Extraction in Advanced Robotics

Feature extraction plays a critical role in advanced robotics by transforming raw sensor data into meaningful representations that enable environments to be understood and navigated effectively. Unlike basic edge detection that highlights object boundaries, feature extraction captures complex patterns such as textures, shapes, and spatial relations crucial for object recognition, localization, and decision-making in real-time robotic systems. Robust feature extraction algorithms improve robot autonomy, enhance perception accuracy, and facilitate tasks like manipulation, path planning, and human-robot interaction in dynamic environments.

Edge Detection vs Feature Extraction: Performance and Accuracy

Edge detection in robotics captures sharp intensity changes, enabling precise object boundary identification critical for navigation and manipulation tasks. Feature extraction goes beyond edges by identifying distinct image patterns such as textures, corners, and shapes, enhancing object recognition and scene understanding capabilities. Performance-wise, edge detection offers faster processing with lower computational overhead, while feature extraction provides higher accuracy and robustness in complex environments, making their choice dependent on specific robotic application requirements.

Challenges and Limitations in Robotic Vision Techniques

Edge detection in robotic vision struggles with noise sensitivity and lighting variations, leading to unreliable boundary identification in complex environments. Feature extraction faces challenges in scaling and rotation invariance, often resulting in missed or distorted key points critical for object recognition. Both techniques encounter computational constraints on embedded robotic systems, limiting real-time processing capabilities essential for autonomous navigation and manipulation.

Future Trends: Integrating Edge Detection and Feature Extraction in Robotics

Future trends in robotics emphasize the integration of edge detection and feature extraction to enhance perception accuracy in autonomous systems. Advanced algorithms leveraging deep learning enable real-time processing of visual data at the edge, reducing latency and computational load. This convergence supports improved object recognition, environment mapping, and decision-making in complex robotic applications.

Edge detection vs feature extraction Infographic

techiny.com

techiny.com