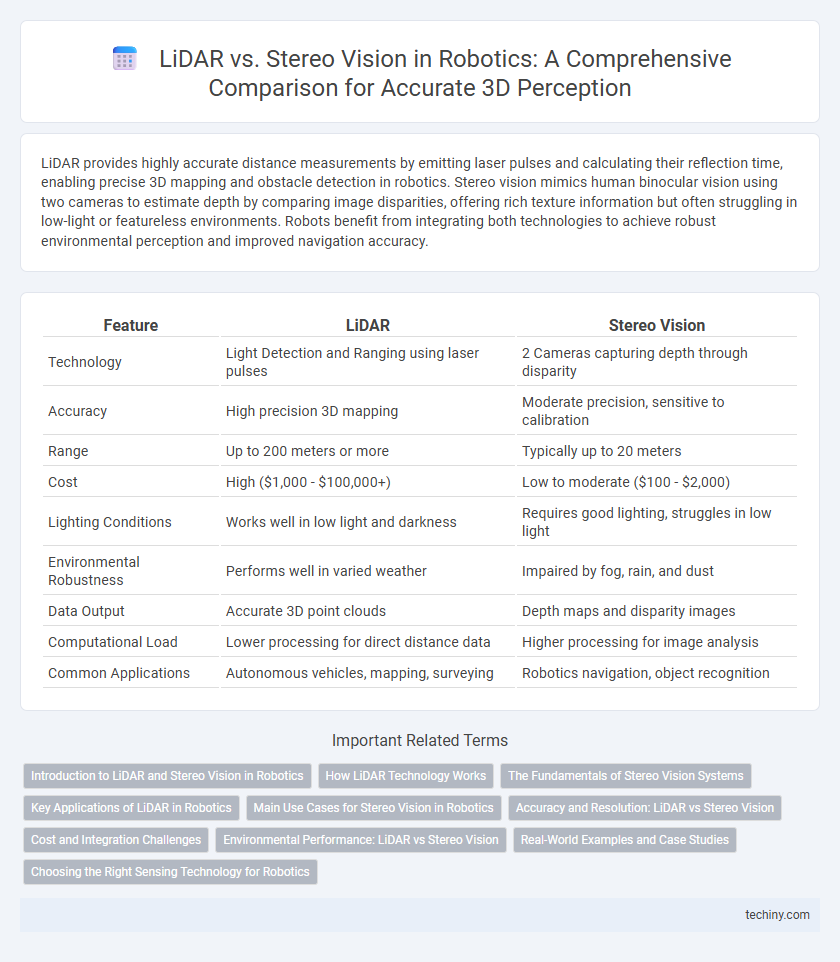

LiDAR provides highly accurate distance measurements by emitting laser pulses and calculating their reflection time, enabling precise 3D mapping and obstacle detection in robotics. Stereo vision mimics human binocular vision using two cameras to estimate depth by comparing image disparities, offering rich texture information but often struggling in low-light or featureless environments. Robots benefit from integrating both technologies to achieve robust environmental perception and improved navigation accuracy.

Table of Comparison

| Feature | LiDAR | Stereo Vision |

|---|---|---|

| Technology | Light Detection and Ranging using laser pulses | 2 Cameras capturing depth through disparity |

| Accuracy | High precision 3D mapping | Moderate precision, sensitive to calibration |

| Range | Up to 200 meters or more | Typically up to 20 meters |

| Cost | High ($1,000 - $100,000+) | Low to moderate ($100 - $2,000) |

| Lighting Conditions | Works well in low light and darkness | Requires good lighting, struggles in low light |

| Environmental Robustness | Performs well in varied weather | Impaired by fog, rain, and dust |

| Data Output | Accurate 3D point clouds | Depth maps and disparity images |

| Computational Load | Lower processing for direct distance data | Higher processing for image analysis |

| Common Applications | Autonomous vehicles, mapping, surveying | Robotics navigation, object recognition |

Introduction to LiDAR and Stereo Vision in Robotics

LiDAR (Light Detection and Ranging) uses laser pulses to create precise 3D maps of environments, enabling accurate distance measurement and object detection in robotics. Stereo vision employs two cameras to mimic human depth perception by comparing images from different angles, facilitating depth estimation and obstacle recognition. Both technologies are essential for navigation and environment understanding, with LiDAR offering higher accuracy and stereo vision providing cost-effective spatial information.

How LiDAR Technology Works

LiDAR technology operates by emitting laser pulses and measuring the time it takes for the light to reflect back from objects, enabling precise distance calculations through Time-of-Flight (ToF) measurements. This method generates highly accurate 3D point clouds, crucial for mapping and obstacle detection in autonomous robots and vehicles. Unlike stereo vision that relies on image disparity, LiDAR provides direct depth information, enhancing performance in varying lighting and weather conditions.

The Fundamentals of Stereo Vision Systems

Stereo vision systems utilize two cameras positioned at a fixed distance apart to emulate human binocular vision, generating depth maps by triangulating corresponding points in each image. Unlike LiDAR, which actively emits laser pulses to measure distance, stereo vision depends on identifying matching features within passive image data, making it sensitive to lighting and texture conditions. Fundamental challenges include precise camera calibration, robust feature matching algorithms, and real-time processing to accurately reconstruct 3D environments for robotic navigation.

Key Applications of LiDAR in Robotics

LiDAR technology is crucial in robotics for precise 3D mapping and real-time environmental perception, enabling autonomous navigation and obstacle avoidance in complex environments. Its high-resolution distance measurements support applications like autonomous vehicles, robotic vacuum cleaners, and drone-based surveying with exceptional accuracy. LiDAR also enhances SLAM (Simultaneous Localization and Mapping) systems by offering robust spatial data that stereo vision systems may struggle to provide under varying light conditions.

Main Use Cases for Stereo Vision in Robotics

Stereo vision in robotics is primarily used for real-time depth perception and 3D mapping in environments where precise spatial awareness is critical. It excels in applications such as autonomous navigation, obstacle detection, and robotic manipulation within dynamic or unstructured settings. This technology leverages dual cameras to generate disparity maps, enabling robots to interpret complex scenes and perform tasks requiring accurate distance measurements without the high cost of LiDAR systems.

Accuracy and Resolution: LiDAR vs Stereo Vision

LiDAR sensors provide superior accuracy and resolution by emitting laser pulses to create precise 3D point clouds, often achieving centimeter-level spatial precision crucial for robotic navigation. Stereo vision relies on dual cameras to estimate depth through disparity but is limited by lighting conditions and texture variability, resulting in lower accuracy and resolution compared to LiDAR. The high-resolution depth data from LiDAR enhances obstacle detection and mapping in robotics, outperforming stereo vision in complex or low-light environments.

Cost and Integration Challenges

LiDAR systems generally incur higher costs due to expensive sensors and complex hardware requirements, making them less accessible for budget-constrained robotics projects. Stereo vision leverages dual cameras, which are more affordable and offer easier integration with existing computer vision frameworks but may struggle with depth accuracy in low-texture environments. Integration challenges for LiDAR include extensive calibration and power consumption, while stereo vision requires sophisticated algorithms for disparity estimation and can be sensitive to lighting conditions.

Environmental Performance: LiDAR vs Stereo Vision

LiDAR sensors provide superior environmental performance in robotics by offering accurate, high-resolution 3D mapping regardless of lighting conditions, which enhances obstacle detection and navigation. Stereo vision relies on image disparity and can struggle in low-light, low-texture, or highly reflective environments, leading to less reliable depth perception. Combining LiDAR with stereo vision can optimize environmental sensing by leveraging LiDAR's precision and stereo vision's contextual color information.

Real-World Examples and Case Studies

LiDAR technology, used in autonomous vehicles like Waymo, provides precise 3D mapping and effective obstacle detection in diverse lighting conditions, outperforming stereo vision systems that rely on image disparity. Stereo vision, exemplified by drones such as DJI models, offers cost-effective depth perception but struggles with textureless environments and low light, limiting real-world applicability compared to LiDAR's robustness. Case studies from urban mapping projects reveal LiDAR's superior accuracy and reliability in complex environments, making it the preferred choice for high-stakes industrial and research robotics applications.

Choosing the Right Sensing Technology for Robotics

LiDAR offers high-precision distance measurements and reliable performance in low-light or complex environments, making it ideal for accurate 3D mapping and obstacle detection in robotics. Stereo vision provides rich color information and cost-effective depth perception by mimicking human binocular vision, suitable for applications requiring texture recognition and faster processing. Selecting the right sensing technology depends on factors such as environmental conditions, required accuracy, computational resources, and budget constraints to optimize robotic perception and navigation capabilities.

LiDAR vs stereo vision Infographic

techiny.com

techiny.com