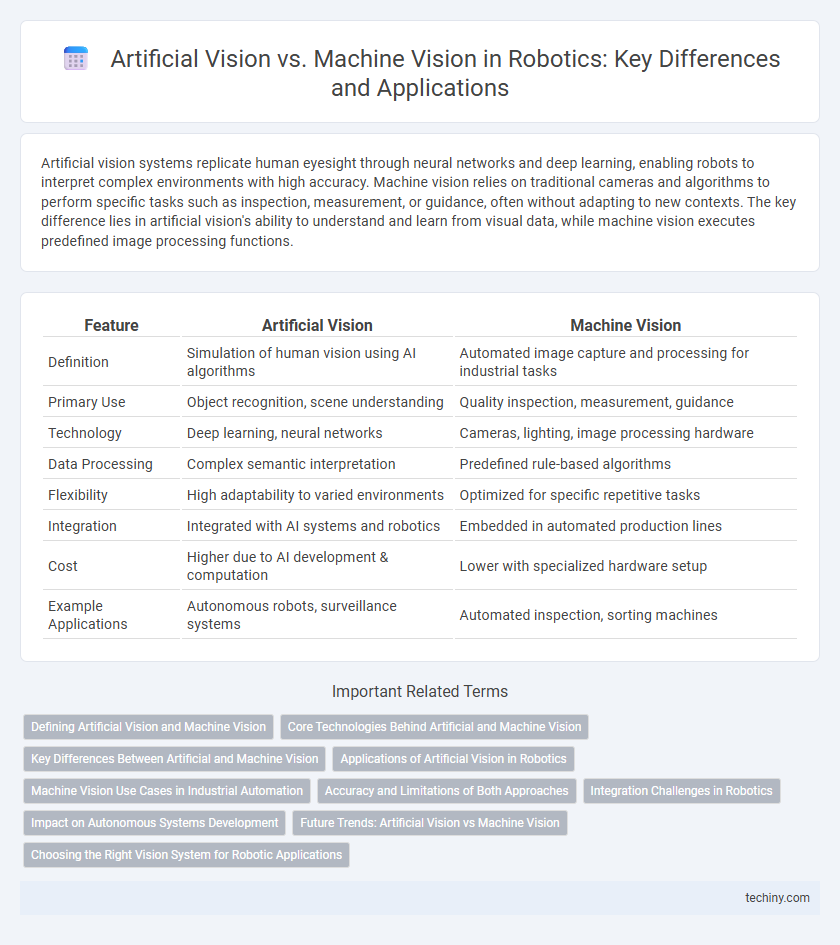

Artificial vision systems replicate human eyesight through neural networks and deep learning, enabling robots to interpret complex environments with high accuracy. Machine vision relies on traditional cameras and algorithms to perform specific tasks such as inspection, measurement, or guidance, often without adapting to new contexts. The key difference lies in artificial vision's ability to understand and learn from visual data, while machine vision executes predefined image processing functions.

Table of Comparison

| Feature | Artificial Vision | Machine Vision |

|---|---|---|

| Definition | Simulation of human vision using AI algorithms | Automated image capture and processing for industrial tasks |

| Primary Use | Object recognition, scene understanding | Quality inspection, measurement, guidance |

| Technology | Deep learning, neural networks | Cameras, lighting, image processing hardware |

| Data Processing | Complex semantic interpretation | Predefined rule-based algorithms |

| Flexibility | High adaptability to varied environments | Optimized for specific repetitive tasks |

| Integration | Integrated with AI systems and robotics | Embedded in automated production lines |

| Cost | Higher due to AI development & computation | Lower with specialized hardware setup |

| Example Applications | Autonomous robots, surveillance systems | Automated inspection, sorting machines |

Defining Artificial Vision and Machine Vision

Artificial vision refers to the capability of robots to interpret and understand visual information using AI-driven algorithms that mimic human sight, enabling complex pattern recognition and decision-making. Machine vision involves the use of cameras and specialized hardware combined with software to capture and process images for tasks such as inspection, guidance, and measurement in automated systems. Both technologies play critical roles in robotics, with artificial vision emphasizing cognitive interpretation and machine vision focusing on precise image acquisition and analysis.

Core Technologies Behind Artificial and Machine Vision

Artificial vision systems leverage deep learning algorithms and neural networks to interpret complex visual data with cognitive capabilities similar to human vision, enabling advanced object recognition and scene understanding. Machine vision relies on traditional image processing techniques, including edge detection, pattern recognition, and structured light, combined with industrial cameras and sensors to perform precise inspection and measurement tasks. Core technologies in artificial vision emphasize AI-driven data interpretation and adaptive learning, while machine vision prioritizes hardware integration and deterministic image analysis for real-time industrial automation.

Key Differences Between Artificial and Machine Vision

Artificial vision mimics human sight by using complex algorithms to interpret visual data, allowing robots to recognize objects, colors, and shapes in dynamic environments. Machine vision relies on specialized cameras and image processing techniques for automated inspection, measurement, and quality control in industrial settings. Key differences include the adaptability and cognitive processing of artificial vision compared to the task-specific, rule-based nature of machine vision systems.

Applications of Artificial Vision in Robotics

Artificial vision in robotics enables advanced perception capabilities such as object recognition, scene understanding, and autonomous navigation, facilitating tasks like robotic pick-and-place, quality inspection, and obstacle avoidance. Unlike traditional machine vision, which relies on predefined algorithms and simple image processing, artificial vision employs deep learning and neural networks to interpret complex visual data in dynamic environments. This enhanced sensory processing drives innovations in industrial automation, service robots, and autonomous vehicles, improving accuracy and adaptability in real-time decision-making.

Machine Vision Use Cases in Industrial Automation

Machine vision systems are pivotal in industrial automation for tasks such as quality inspection, object recognition, and assembly verification. They utilize cameras and image processing algorithms to detect defects on production lines, ensuring consistent product quality and reducing human error. This technology enhances efficiency and precision by enabling real-time monitoring and automated decision-making in manufacturing environments.

Accuracy and Limitations of Both Approaches

Artificial vision systems rely heavily on deep learning algorithms to interpret complex visual data, offering high accuracy in recognizing patterns and objects, but their performance can degrade under variable lighting or occlusion. Machine vision, typically using traditional image processing techniques and structured lighting, delivers precise measurements and defect detection with consistent accuracy in controlled environments but struggles with adaptability to unexpected scenarios. Both approaches face limitations: artificial vision demands extensive training data and computational resources, while machine vision lacks the flexibility to generalize across diverse and dynamic visual inputs.

Integration Challenges in Robotics

Artificial vision systems in robotics rely on deep learning algorithms to interpret complex visual data, whereas machine vision typically depends on structured image processing techniques for specific tasks. Integration challenges arise from the need to synchronize these diverse technologies with robotic control systems, requiring real-time data processing and precise calibration. Compatibility issues between software frameworks and hardware limitations often hinder seamless operation and scalability in automated environments.

Impact on Autonomous Systems Development

Artificial vision enhances autonomous systems by enabling robots to interpret complex environments through advanced neural networks, improving decision-making and adaptability. Machine vision relies on traditional image processing techniques that offer high accuracy for specific tasks but lacks the flexibility needed for dynamic scenarios in autonomous navigation. Integrating artificial vision into autonomous systems results in more robust obstacle detection, real-time environment mapping, and improved interaction with unpredictable surroundings.

Future Trends: Artificial Vision vs Machine Vision

Future trends in robotics indicate artificial vision will increasingly leverage deep learning algorithms for enhanced object recognition and scene understanding, enabling more adaptive and autonomous systems. Machine vision systems are expected to integrate advanced sensor fusion and real-time processing capabilities, improving precision tasks in manufacturing and quality control. The convergence of artificial and machine vision technologies promises smarter robots with superior environmental awareness and decision-making efficiency.

Choosing the Right Vision System for Robotic Applications

Artificial vision systems utilize AI and deep learning algorithms to interpret complex visual data, enabling robots to adapt to dynamic environments. Machine vision relies on traditional image processing techniques for precise and consistent inspection tasks in controlled settings. Selecting the right vision system depends on factors like application complexity, environmental variability, real-time processing requirements, and budget constraints.

Artificial vision vs Machine vision Infographic

techiny.com

techiny.com