SLAM integrates sensor data to build a map of an unknown environment while simultaneously tracking the robot's position, offering greater accuracy compared to odometry alone. Odometry estimates position by measuring wheel rotations or motor data, but it accumulates errors over time due to wheel slippage and uneven surfaces. Combining SLAM with odometry enhances localization and mapping reliability in robotics applications.

Table of Comparison

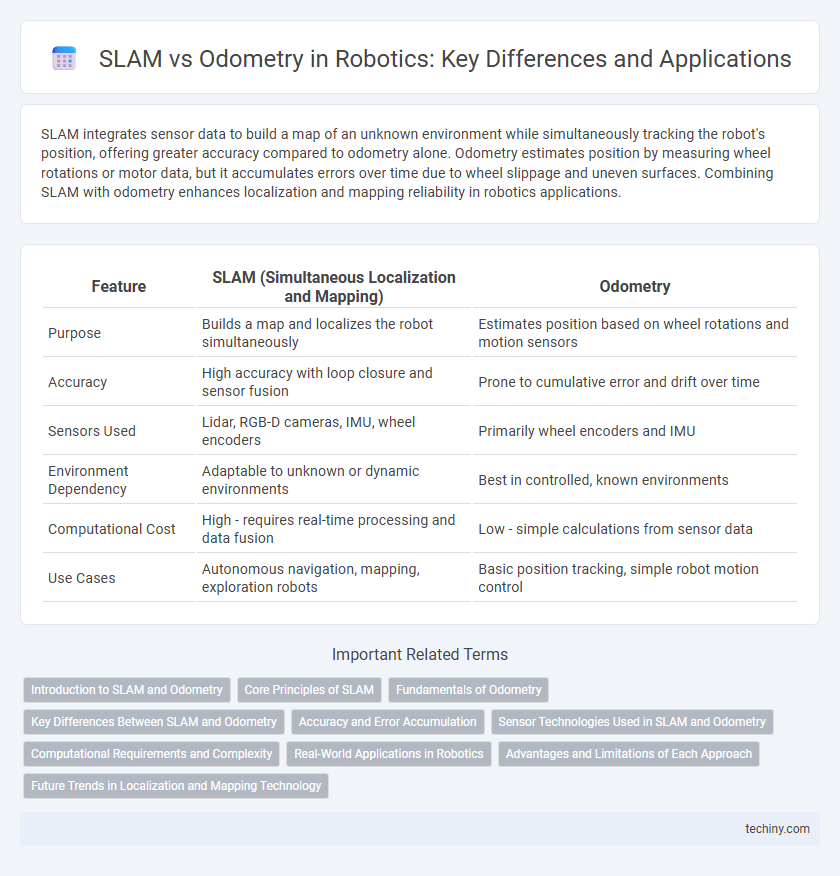

| Feature | SLAM (Simultaneous Localization and Mapping) | Odometry |

|---|---|---|

| Purpose | Builds a map and localizes the robot simultaneously | Estimates position based on wheel rotations and motion sensors |

| Accuracy | High accuracy with loop closure and sensor fusion | Prone to cumulative error and drift over time |

| Sensors Used | Lidar, RGB-D cameras, IMU, wheel encoders | Primarily wheel encoders and IMU |

| Environment Dependency | Adaptable to unknown or dynamic environments | Best in controlled, known environments |

| Computational Cost | High - requires real-time processing and data fusion | Low - simple calculations from sensor data |

| Use Cases | Autonomous navigation, mapping, exploration robots | Basic position tracking, simple robot motion control |

Introduction to SLAM and Odometry

Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while simultaneously tracking their position within it, essential for autonomous navigation in dynamic or unstructured spaces. Odometry relies on data from wheel encoders and inertial sensors to estimate the robot's position by measuring its displacement over time, but it accumulates errors leading to drift without external references. SLAM integrates sensor data such as LiDAR, cameras, and IMUs to correct odometry drift, producing accurate and reliable spatial awareness necessary for tasks like path planning and obstacle avoidance.

Core Principles of SLAM

SLAM (Simultaneous Localization and Mapping) integrates sensor data to build a map of an unknown environment while simultaneously tracking the robot's position within that map, overcoming limitations of odometry which relies solely on wheel encoder data subject to cumulative errors. Core principles of SLAM involve probabilistic state estimation techniques such as Extended Kalman Filter (EKF), Particle Filter, and Graph-Based SLAM to fuse sensory inputs from LiDAR, cameras, or IMUs for accurate localization and mapping. This approach enables autonomous robots to navigate complex environments with higher accuracy and robustness compared to odometry-based navigation, which lacks environmental awareness and drift correction.

Fundamentals of Odometry

Odometry is a fundamental technique in robotics used to estimate a robot's position by integrating data from wheel encoders and inertial measurement units (IMUs). Unlike SLAM, which simultaneously constructs a map and localizes the robot within it, odometry relies solely on incremental motion data, making it susceptible to cumulative errors such as wheel slip and sensor drift. Precise odometry provides essential motion estimates that serve as input for more complex localization methods like SLAM, enabling improved navigation accuracy in autonomous robots.

Key Differences Between SLAM and Odometry

SLAM (Simultaneous Localization and Mapping) integrates sensor data to build a map of an unknown environment while simultaneously tracking the robot's position within it, enhancing accuracy through loop closure and sensor fusion. Odometry relies solely on wheel encoder data or internal sensors to estimate the robot's trajectory, often accumulating significant drift over time due to wheel slippage and sensor noise. Unlike odometry, SLAM corrects positional errors by incorporating environmental landmarks, enabling robust navigation in complex and dynamic settings.

Accuracy and Error Accumulation

Simultaneous Localization and Mapping (SLAM) offers greater accuracy in robotic navigation by continuously correcting position estimates through environmental feature recognition, whereas odometry relies solely on wheel encoders or inertial sensors, leading to cumulative error over time. SLAM algorithms mitigate error accumulation by integrating sensor data with probabilistic models, enabling real-time map updates and drift correction. Odometry error accumulation results in significant localization drift during extended operation, making SLAM indispensable for robust and precise autonomous navigation.

Sensor Technologies Used in SLAM and Odometry

SLAM integrates sensor technologies such as LiDAR, RGB-D cameras, and IMUs to simultaneously create maps and localize the robot in real-time, offering higher accuracy and environmental awareness than odometry alone. Odometry primarily relies on wheel encoders and inertial sensors, which are susceptible to cumulative errors like slippage and drift over time, limiting its precision. The fusion of multiple sensors in SLAM enhances robustness and compensates for the shortcomings of individual sensor modalities, making it essential for complex navigation tasks in dynamic environments.

Computational Requirements and Complexity

Simultaneous Localization and Mapping (SLAM) demands significantly higher computational resources compared to odometry due to its dual task of building maps while simultaneously estimating the robot's position. SLAM algorithms integrate sensor data, perform loop closure detection, and optimize pose graphs, resulting in complex processing overhead often requiring powerful onboard processors or dedicated hardware acceleration. In contrast, odometry relies on simpler kinematic models and incremental position updates from wheel encoders, thus maintaining low computational complexity but accumulating error over time.

Real-World Applications in Robotics

SLAM (Simultaneous Localization and Mapping) offers robust real-world application benefits by enabling robots to create and update maps while simultaneously tracking their location, essential for dynamic, unstructured environments. Odometry, while useful for short-term positioning through wheel or sensor displacement data, often suffers from cumulative errors, limiting its effectiveness in large-scale or long-duration tasks. Integrating SLAM with odometry enhances autonomous navigation precision in robotics applications such as autonomous vehicles, warehouse automation, and exploration drones.

Advantages and Limitations of Each Approach

SLAM (Simultaneous Localization and Mapping) offers robust environment mapping and localization by integrating sensor data, enabling autonomous robots to navigate unknown spaces with high accuracy and adaptability. Odometry relies on wheel encoder data for position estimation, providing simplicity and real-time performance but suffering from cumulative drift and reduced accuracy over time. While SLAM addresses odometry's limitations by correcting positional errors through mapping, it requires greater computational resources and complex sensor fusion, posing challenges for resource-constrained robotic platforms.

Future Trends in Localization and Mapping Technology

Future trends in localization and mapping technology emphasize the integration of SLAM with advanced sensor fusion techniques, combining LiDAR, visual, and inertial data to enhance accuracy and robustness in dynamic environments. Machine learning algorithms are increasingly utilized to improve real-time data processing and semantic understanding, enabling robots to adapt to complex scenarios more effectively. The evolution of edge computing and 5G connectivity accelerates SLAM system performance, facilitating scalable applications in autonomous navigation and smart robotics.

SLAM (simultaneous localization and mapping) vs odometry Infographic

techiny.com

techiny.com