SLAM integrates sensor data to build a map of an unknown environment while simultaneously tracking the robot's location within it, offering robust navigation in dynamic or unstructured settings. Visual Odometry estimates the robot's motion by analyzing visual input from cameras, providing precise real-time position updates but without generating a comprehensive environmental map. Combining SLAM and Visual Odometry enhances autonomous navigation by leveraging accurate localization with continuous environmental awareness.

Table of Comparison

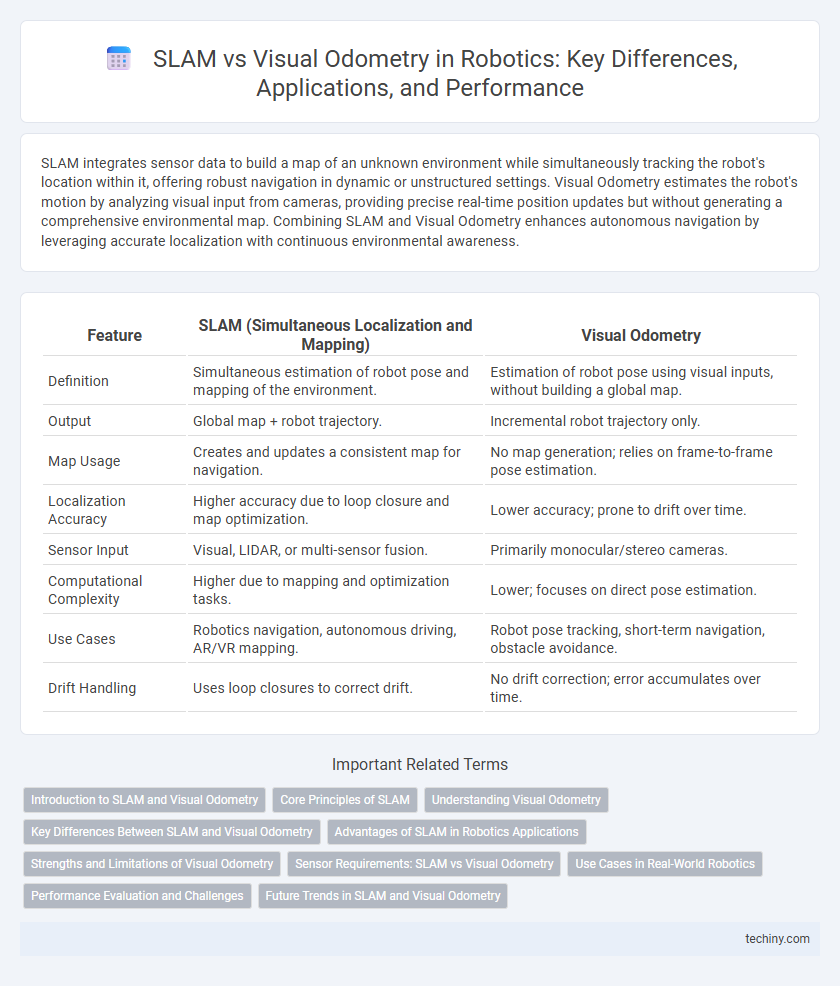

| Feature | SLAM (Simultaneous Localization and Mapping) | Visual Odometry |

|---|---|---|

| Definition | Simultaneous estimation of robot pose and mapping of the environment. | Estimation of robot pose using visual inputs, without building a global map. |

| Output | Global map + robot trajectory. | Incremental robot trajectory only. |

| Map Usage | Creates and updates a consistent map for navigation. | No map generation; relies on frame-to-frame pose estimation. |

| Localization Accuracy | Higher accuracy due to loop closure and map optimization. | Lower accuracy; prone to drift over time. |

| Sensor Input | Visual, LIDAR, or multi-sensor fusion. | Primarily monocular/stereo cameras. |

| Computational Complexity | Higher due to mapping and optimization tasks. | Lower; focuses on direct pose estimation. |

| Use Cases | Robotics navigation, autonomous driving, AR/VR mapping. | Robot pose tracking, short-term navigation, obstacle avoidance. |

| Drift Handling | Uses loop closures to correct drift. | No drift correction; error accumulates over time. |

Introduction to SLAM and Visual Odometry

SLAM (Simultaneous Localization and Mapping) is a computational technique enabling robots to build a map of an unknown environment while simultaneously tracking their own position within it, crucial for autonomous navigation. Visual Odometry estimates a robot's position and orientation using sequential camera images, relying primarily on visual data without creating a global map. Both methods are foundational in robotics, with SLAM providing comprehensive spatial awareness and Visual Odometry offering efficient, real-time motion tracking.

Core Principles of SLAM

SLAM (Simultaneous Localization and Mapping) integrates sensor data to construct a map of an unknown environment while simultaneously tracking the robot's location within it, leveraging probabilistic algorithms like Extended Kalman Filters or Particle Filters for real-time pose estimation. Unlike Visual Odometry, which solely estimates motion by analyzing camera images, SLAM combines diverse sensor inputs such as LiDAR, IMU, and visual cues to reduce drift and improve accuracy over time. Core principles of SLAM emphasize loop closure detection, map optimization, and robust data association to maintain coherent spatial understanding during autonomous navigation.

Understanding Visual Odometry

Visual Odometry (VO) estimates a robot's position and orientation by analyzing sequential camera images, enabling real-time motion tracking without relying on external references. Unlike SLAM, which simultaneously maps the environment and localizes the robot, VO focuses primarily on incremental motion estimation, offering faster computation but lacking a global map. VO algorithms often utilize feature detection, optical flow, or direct methods to track visual changes that infer relative motion.

Key Differences Between SLAM and Visual Odometry

SLAM (Simultaneous Localization and Mapping) simultaneously builds a map of an unknown environment while tracking the robot's location within it, ensuring consistent global positioning using loop closure techniques. Visual odometry estimates the robot's movement by analyzing sequential camera images to provide relative pose changes, but it lacks direct map-building and may accumulate drift over time without global correction. SLAM integrates sensor fusion and optimization processes to improve accuracy, whereas visual odometry is computationally simpler, relying primarily on visual data for local motion estimation.

Advantages of SLAM in Robotics Applications

SLAM offers robust environment mapping and accurate robot localization by integrating sensor data over time, enabling long-term navigation in dynamic and complex settings. Unlike visual odometry, which primarily estimates motion from camera images and can accumulate drift, SLAM continuously corrects positional errors through loop closure and map optimization. These capabilities make SLAM essential for autonomous robots requiring precise spatial awareness and reliable operation in unstructured environments.

Strengths and Limitations of Visual Odometry

Visual Odometry excels in real-time pose estimation by analyzing sequential camera images, enabling precise motion tracking without relying on pre-existing maps. However, its accuracy diminishes in feature-poor or dynamic environments due to sensitivity to illumination changes and motion blur. Unlike SLAM, Visual Odometry does not inherently build a consistent global map, limiting its use in large-scale or long-term navigation tasks.

Sensor Requirements: SLAM vs Visual Odometry

SLAM requires a combination of sensors such as LiDAR, RGB-D cameras, or stereo vision systems to create accurate environmental maps and perform localization simultaneously. Visual Odometry primarily depends on monocular or stereo cameras to estimate camera motion by analyzing sequential images, which limits its capability to build comprehensive maps. Sensor fusion in SLAM enhances robustness and accuracy compared to the single-sensor reliance generally seen in Visual Odometry implementations.

Use Cases in Real-World Robotics

SLAM excels in complex environments requiring consistent map updates and robot localization, such as autonomous drones navigating dynamic indoor spaces or warehouse robots optimizing path planning. Visual Odometry is preferred for real-time, short-term navigation tasks in less structured settings like outdoor delivery robots or autonomous vehicles where lightweight computation and quick pose estimation are crucial. Both approaches contribute to robotic perception, but SLAM provides a more comprehensive spatial understanding critical for long-duration missions and map reuse.

Performance Evaluation and Challenges

SLAM integrates sensor data to construct maps while simultaneously tracking the robot's position, offering robust performance in dynamic and large-scale environments despite computational complexity. Visual Odometry estimates motion solely from camera images, providing faster real-time localization but often suffering from scale ambiguity and sensitivity to lighting changes. Performance evaluation highlights SLAM's higher accuracy and reliability in diverse scenarios, whereas Visual Odometry excels in simpler, controlled settings where computational resources are limited.

Future Trends in SLAM and Visual Odometry

Future trends in SLAM and Visual Odometry emphasize enhanced sensor fusion, integrating LiDAR, RGB-D cameras, and inertial measurement units for improved accuracy and robustness in diverse environments. Advances in deep learning and neural networks enable real-time semantic mapping and dynamic scene understanding, significantly augmenting robot autonomy. Research is also focused on scalability and adaptability of SLAM systems for large-scale, multi-robot applications in autonomous vehicles and augmented reality.

SLAM (Simultaneous Localization and Mapping) vs Visual Odometry Infographic

techiny.com

techiny.com