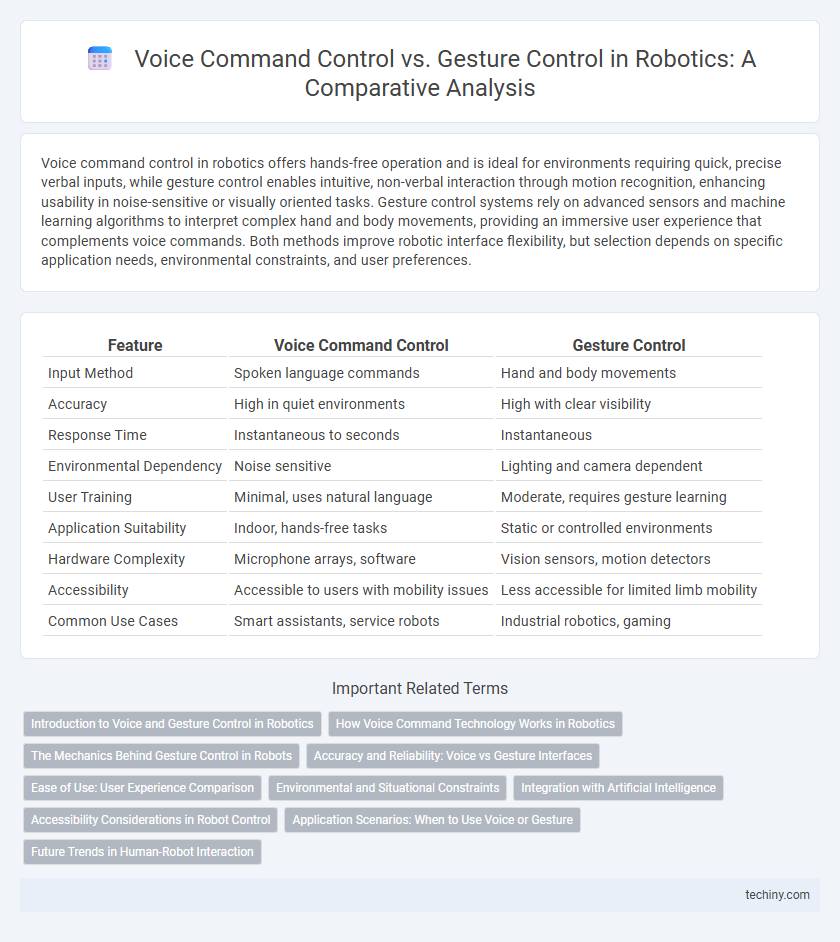

Voice command control in robotics offers hands-free operation and is ideal for environments requiring quick, precise verbal inputs, while gesture control enables intuitive, non-verbal interaction through motion recognition, enhancing usability in noise-sensitive or visually oriented tasks. Gesture control systems rely on advanced sensors and machine learning algorithms to interpret complex hand and body movements, providing an immersive user experience that complements voice commands. Both methods improve robotic interface flexibility, but selection depends on specific application needs, environmental constraints, and user preferences.

Table of Comparison

| Feature | Voice Command Control | Gesture Control |

|---|---|---|

| Input Method | Spoken language commands | Hand and body movements |

| Accuracy | High in quiet environments | High with clear visibility |

| Response Time | Instantaneous to seconds | Instantaneous |

| Environmental Dependency | Noise sensitive | Lighting and camera dependent |

| User Training | Minimal, uses natural language | Moderate, requires gesture learning |

| Application Suitability | Indoor, hands-free tasks | Static or controlled environments |

| Hardware Complexity | Microphone arrays, software | Vision sensors, motion detectors |

| Accessibility | Accessible to users with mobility issues | Less accessible for limited limb mobility |

| Common Use Cases | Smart assistants, service robots | Industrial robotics, gaming |

Introduction to Voice and Gesture Control in Robotics

Voice command control in robotics enables machines to interpret and execute spoken instructions, enhancing hands-free operation and accessibility in complex environments. Gesture control utilizes motion sensors and cameras to detect human movements, allowing intuitive and contactless interaction with robotic systems. Both technologies improve robot responsiveness and user experience by leveraging natural communication methods for efficient task execution.

How Voice Command Technology Works in Robotics

Voice command control in robotics operates through natural language processing (NLP) algorithms that interpret spoken instructions, converting them into actionable commands for the robot. Advanced speech recognition systems capture vocal input via microphones, then utilize machine learning models to distinguish commands from ambient noise with high accuracy. This technology enhances human-robot interaction by enabling hands-free operation and improving accessibility in various robotic applications.

The Mechanics Behind Gesture Control in Robots

Gesture control in robots relies on advanced motion sensors and cameras to detect and interpret human hand or body movements, translating them into precise robotic actions. Techniques such as computer vision and machine learning enable real-time recognition and processing of complex gestures, enhancing responsiveness and accuracy. These systems often utilize depth sensors like LiDAR or infrared to capture spatial information, allowing robots to operate seamlessly in dynamic environments.

Accuracy and Reliability: Voice vs Gesture Interfaces

Voice command control often provides higher accuracy in diverse environments due to advanced speech recognition algorithms and noise-cancellation technologies. Gesture control, while intuitive, can suffer from reliability issues caused by lighting conditions, occlusions, and sensor limitations. Both interfaces require continuous improvements in machine learning models to enhance precision and consistency in real-world robotics applications.

Ease of Use: User Experience Comparison

Voice command control offers intuitive interaction with robots, allowing users to perform complex tasks hands-free and reducing physical effort. Gesture control relies on precise movements, which can be challenging for some users but provides a natural, non-verbal way to communicate commands, especially in noisy environments. Overall, voice command generally scores higher in ease of use due to its accessibility and minimal learning curve, enhancing user experience in diverse robotics applications.

Environmental and Situational Constraints

Voice command control in robotics often struggles in noisy environments where ambient sound interferes with speech recognition accuracy, limiting its reliability. Gesture control excels in such settings by enabling silent, precise commands without relying on audio input, making it ideal for loud or communication-sensitive areas. However, gesture recognition can be hindered by poor lighting, occlusions, or limited sensor range, which restricts its effectiveness in cluttered or dimly lit environments.

Integration with Artificial Intelligence

Voice command control in robotics leverages natural language processing and speech recognition algorithms powered by AI to enable intuitive, hands-free interaction, allowing robots to understand and execute complex instructions. Gesture control integrates computer vision and machine learning models to interpret human movements, providing a non-verbal communication channel for robot operation that adapts to user behavior over time. Combining AI-driven voice and gesture recognition enhances multimodal interfaces, improving accuracy and responsiveness in dynamic environments.

Accessibility Considerations in Robot Control

Voice command control enhances accessibility for users with limited motor skills or physical disabilities by enabling hands-free operation. Gesture control systems can be challenging for individuals with restricted mobility or impaired coordination, potentially limiting usability. Incorporating multimodal interfaces that combine voice and gesture controls improves overall accessibility and user experience in robotic applications.

Application Scenarios: When to Use Voice or Gesture

Voice command control excels in environments where hands-free operation and clear verbal communication are essential, such as industrial automation, smart homes, and healthcare settings. Gesture control is ideal for scenarios requiring silent interaction or when users wear gloves, like surgical rooms, virtual reality gaming, and hazardous workplaces. Selecting between voice and gesture depends on factors like ambient noise, user mobility, and task precision required.

Future Trends in Human-Robot Interaction

Voice command control in robotics is evolving with advancements in natural language processing, allowing robots to understand complex instructions and adapt to diverse linguistic nuances. Gesture control technology is becoming more precise through enhanced computer vision and machine learning algorithms, enabling robots to interpret non-verbal cues in dynamic environments. Future trends in human-robot interaction emphasize multimodal interfaces that combine voice, gesture, and even facial expressions to create more intuitive and seamless communication between humans and robots.

Voice command control vs Gesture control Infographic

techiny.com

techiny.com