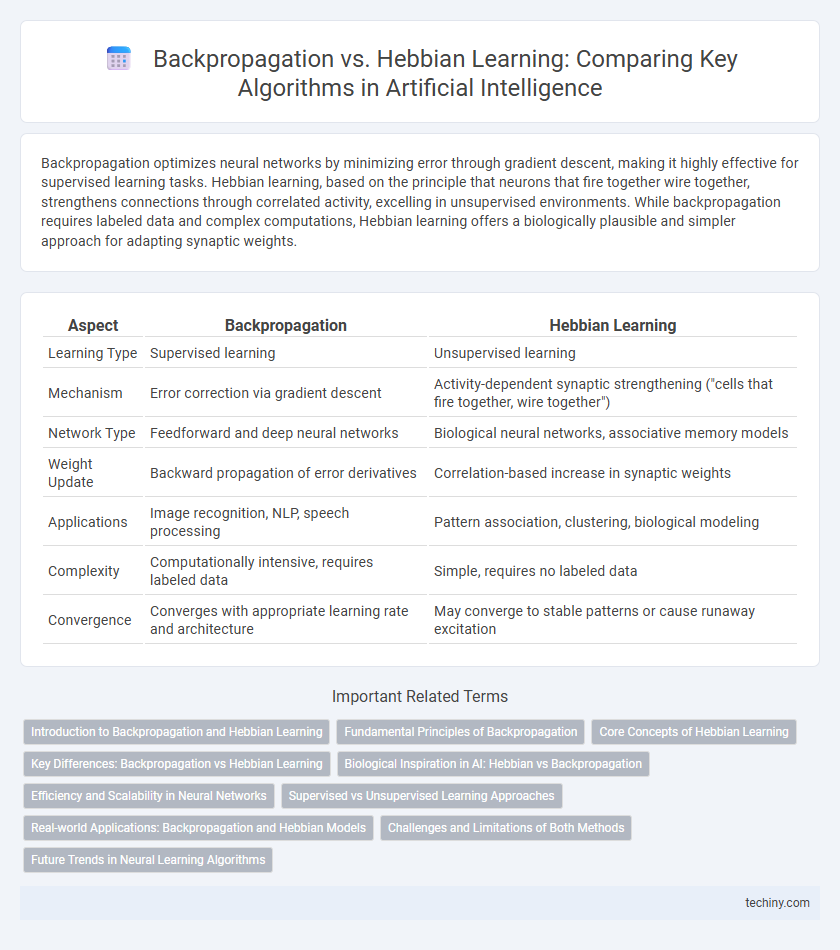

Backpropagation optimizes neural networks by minimizing error through gradient descent, making it highly effective for supervised learning tasks. Hebbian learning, based on the principle that neurons that fire together wire together, strengthens connections through correlated activity, excelling in unsupervised environments. While backpropagation requires labeled data and complex computations, Hebbian learning offers a biologically plausible and simpler approach for adapting synaptic weights.

Table of Comparison

| Aspect | Backpropagation | Hebbian Learning |

|---|---|---|

| Learning Type | Supervised learning | Unsupervised learning |

| Mechanism | Error correction via gradient descent | Activity-dependent synaptic strengthening ("cells that fire together, wire together") |

| Network Type | Feedforward and deep neural networks | Biological neural networks, associative memory models |

| Weight Update | Backward propagation of error derivatives | Correlation-based increase in synaptic weights |

| Applications | Image recognition, NLP, speech processing | Pattern association, clustering, biological modeling |

| Complexity | Computationally intensive, requires labeled data | Simple, requires no labeled data |

| Convergence | Converges with appropriate learning rate and architecture | May converge to stable patterns or cause runaway excitation |

Introduction to Backpropagation and Hebbian Learning

Backpropagation is a supervised learning algorithm used in artificial neural networks to minimize error by adjusting weights through gradient descent based on the difference between predicted and actual outputs. Hebbian learning, an unsupervised method inspired by biological neural processes, strengthens the connection between neurons that activate simultaneously, encapsulated in the phrase "cells that fire together, wire together." Both methods play crucial roles in training neural networks but differ fundamentally in feedback dependence and learning mechanisms.

Fundamental Principles of Backpropagation

Backpropagation operates on the principle of error minimization by propagating the gradient of the loss function backward through the neural network layers, enabling precise weight adjustments based on gradient descent optimization. It uses chain rule differentiation to compute partial derivatives of the error with respect to each weight, ensuring efficient training of deep neural architectures. This supervised learning technique contrasts with Hebbian learning by relying on labeled data and explicit error signals to iteratively improve model performance.

Core Concepts of Hebbian Learning

Hebbian learning is a biologically inspired learning rule emphasizing synaptic plasticity, where the strength of connections between neurons increases when they activate simultaneously. This principle, often summarized as "cells that fire together wire together," supports unsupervised learning by reinforcing correlated neural activities without explicit error signals. In contrast to backpropagation's reliance on gradient descent and error correction, Hebbian learning leverages local information and temporal correlation to adapt synaptic weights, enabling efficient pattern recognition in neural networks.

Key Differences: Backpropagation vs Hebbian Learning

Backpropagation uses gradient descent to minimize error by adjusting weights based on the difference between predicted and actual outputs, making it highly effective for supervised learning tasks. Hebbian learning strengthens connections between neurons that activate simultaneously, following the principle "cells that fire together wire together," and is primarily unsupervised and biologically inspired. Key differences include backpropagation's reliance on global error signals versus Hebbian learning's local synaptic updates, and its suitability for complex, labeled datasets compared to Hebbian's focus on correlation-driven adaptation.

Biological Inspiration in AI: Hebbian vs Backpropagation

Hebbian learning models synaptic plasticity inspired by the biological principle "cells that fire together, wire together," emphasizing local, unsupervised adjustments based on neuron co-activation patterns. In contrast, backpropagation simulates error-driven learning through global feedback and weight updates, relying on gradient descent, which lacks direct biological plausibility but excels in training deep networks. The biological inspiration in AI highlights Hebbian learning's alignment with natural neural processes, while backpropagation remains a powerful, though less biologically grounded, computational algorithm.

Efficiency and Scalability in Neural Networks

Backpropagation demonstrates higher efficiency and scalability in training large-scale neural networks by optimizing error gradients through layer-wise weight adjustments, enabling rapid convergence. Hebbian learning, grounded in unsupervised correlation-based weight updates, tends to be less scalable due to local learning rules that limit global error correction across deep architectures. Consequently, backpropagation remains the preferred method for deep neural networks requiring efficient processing of extensive datasets and complex tasks.

Supervised vs Unsupervised Learning Approaches

Backpropagation is a supervised learning algorithm widely used in training artificial neural networks, optimizing weights by minimizing error through gradient descent. Hebbian learning operates as an unsupervised method, strengthening connections between co-activated neurons without explicit error signals. The key distinction lies in supervision: backpropagation requires labeled data for error correction, whereas Hebbian learning adapts based on intrinsic activity patterns, enabling feature extraction and self-organization.

Real-world Applications: Backpropagation and Hebbian Models

Backpropagation powers advanced neural networks in image recognition, natural language processing, and autonomous systems by minimizing error through gradient descent optimization. Hebbian learning models excel in unsupervised tasks such as pattern recognition, associative memory, and neural plasticity simulations, reflecting biological learning processes. Combining backpropagation with Hebbian principles enhances adaptive AI systems in robotics and brain-computer interfaces, driving innovation in real-world intelligent applications.

Challenges and Limitations of Both Methods

Backpropagation faces challenges such as high computational cost, difficulty in training deep networks due to vanishing or exploding gradients, and lack of biological plausibility. Hebbian learning, while more biologically inspired, struggles with stability issues, lack of error correction mechanisms, and limited scalability for complex tasks. Both methods exhibit limitations in adapting to dynamic environments and require hybrid approaches for enhanced performance in artificial intelligence models.

Future Trends in Neural Learning Algorithms

Future trends in neural learning algorithms emphasize the integration of backpropagation's gradient-based optimization with Hebbian learning's local synaptic updates to create more efficient and biologically plausible models. Advances in neuromorphic computing and unsupervised learning drive research toward hybrid algorithms that leverage Hebbian plasticity for real-time adaptation while maintaining backpropagation's precision in deep architectures. Emerging frameworks are expected to enhance energy efficiency and scalability, pushing AI systems closer to human-like learning and cognition.

Backpropagation vs Hebbian Learning Infographic

techiny.com

techiny.com