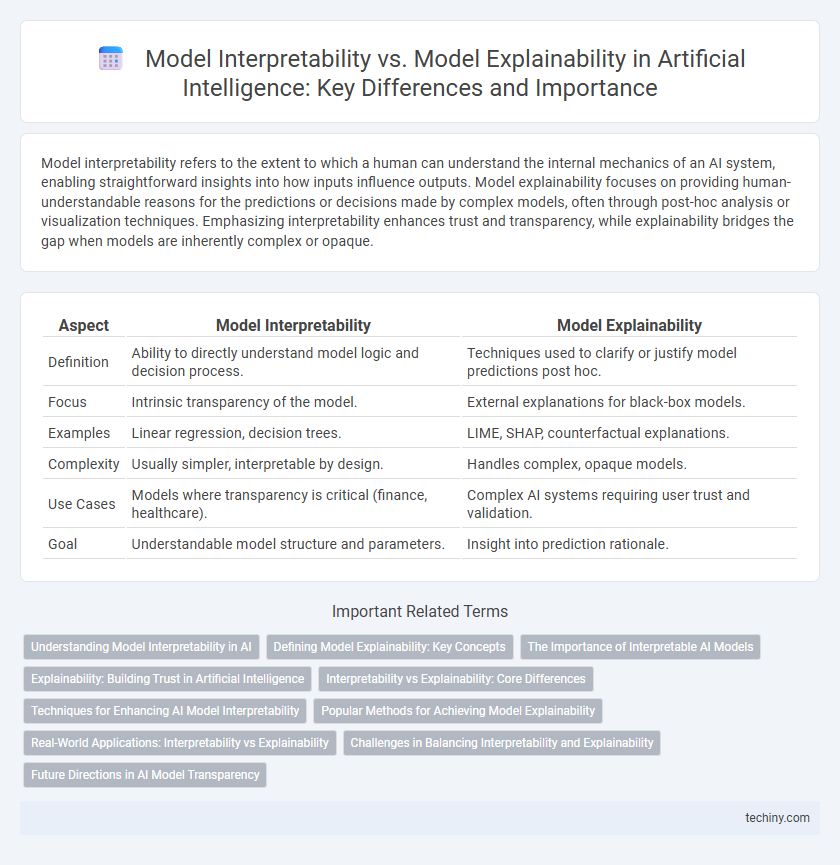

Model interpretability refers to the extent to which a human can understand the internal mechanics of an AI system, enabling straightforward insights into how inputs influence outputs. Model explainability focuses on providing human-understandable reasons for the predictions or decisions made by complex models, often through post-hoc analysis or visualization techniques. Emphasizing interpretability enhances trust and transparency, while explainability bridges the gap when models are inherently complex or opaque.

Table of Comparison

| Aspect | Model Interpretability | Model Explainability |

|---|---|---|

| Definition | Ability to directly understand model logic and decision process. | Techniques used to clarify or justify model predictions post hoc. |

| Focus | Intrinsic transparency of the model. | External explanations for black-box models. |

| Examples | Linear regression, decision trees. | LIME, SHAP, counterfactual explanations. |

| Complexity | Usually simpler, interpretable by design. | Handles complex, opaque models. |

| Use Cases | Models where transparency is critical (finance, healthcare). | Complex AI systems requiring user trust and validation. |

| Goal | Understandable model structure and parameters. | Insight into prediction rationale. |

Understanding Model Interpretability in AI

Model interpretability in AI refers to the extent to which a human can understand the internal mechanics and decision-making processes of a machine learning model. It enables users to trace how input features influence predictions, enhancing transparency and trust in AI systems. Unlike model explainability, which focuses on post-hoc explanations of model outputs, interpretability emphasizes the innate clarity and simplicity of the model's structure and behavior.

Defining Model Explainability: Key Concepts

Model explainability refers to the degree to which the internal mechanics and decision-making processes of an artificial intelligence system can be clearly described and understood. It involves techniques such as feature importance, surrogate models, and visualizations that reveal how input variables influence predictions. Key concepts include transparency, which relates to open model structure, and post-hoc explanations that clarify complex, black-box algorithms after training.

The Importance of Interpretable AI Models

Interpretable AI models provide clear insights into how decisions are made, enhancing transparency and trust in artificial intelligence systems. Model interpretability is crucial for identifying biases and ensuring fairness, which is essential in sectors like healthcare, finance, and law. Explainability techniques complement interpretability by offering post-hoc justifications, but inherently interpretable models allow stakeholders to fully understand AI behavior from the outset.

Explainability: Building Trust in Artificial Intelligence

Model explainability enhances trust in artificial intelligence by providing clear, understandable insights into how AI systems make decisions. It goes beyond technical transparency, enabling stakeholders to comprehend the reasoning and factors influencing model outputs. This clarity fosters accountability and facilitates ethical AI deployment across diverse applications.

Interpretability vs Explainability: Core Differences

Model interpretability refers to the extent to which a human can understand the internal mechanics of a machine learning model, focusing on transparency and simplicity. Model explainability involves providing understandable explanations for model predictions, often post-hoc, emphasizing the reasons behind specific outcomes. Interpretability centers on the model's inherent clarity, while explainability deals with external tools and methods used to clarify model behavior.

Techniques for Enhancing AI Model Interpretability

Techniques for enhancing AI model interpretability include feature importance analysis, which ranks input features based on their influence on model predictions, and partial dependence plots that visualize the relationship between specific features and outputs. Local interpretable model-agnostic explanations (LIME) and SHapley Additive exPlanations (SHAP) provide instance-level insights by approximating complex models with interpretable local models or assigning contribution scores to features. These techniques improve understanding of black-box models, aiding in model validation, debugging, and building user trust in AI systems.

Popular Methods for Achieving Model Explainability

Popular methods for achieving model explainability in artificial intelligence include SHAP (SHapley Additive exPlanations), LIME (Local Interpretable Model-agnostic Explanations), and counterfactual explanations. SHAP provides consistent and locally accurate feature attributions, aiding in understanding individual predictions. LIME approximates complex model behavior with simpler interpretable models around specific instances, while counterfactual explanations highlight minimal changes needed to alter predictions, enhancing transparency.

Real-World Applications: Interpretability vs Explainability

Model interpretability in artificial intelligence enables practitioners to understand how input features directly influence model predictions, facilitating trust and regulatory compliance in high-stakes domains like healthcare and finance. Explainability, often involving post-hoc analysis and visualizations, helps stakeholders grasp complex model reasoning processes without requiring full transparency, making it crucial for user acceptance and debugging in real-world applications. Balancing interpretability and explainability enhances AI reliability by providing clear insights into model behavior while maintaining performance in environments such as autonomous systems and fraud detection.

Challenges in Balancing Interpretability and Explainability

Balancing model interpretability and explainability presents challenges related to trade-offs between simplicity and complexity, where highly interpretable models often sacrifice predictive accuracy while more complex models deliver superior performance but hinder transparent understanding. The difficulty lies in designing techniques that provide meaningful explanations for black-box models without overwhelming users or compromising confidentiality and intellectual property. Achieving an optimal balance requires integrating advanced methods such as local interpretable model-agnostic explanations (LIME) and SHAP values to enhance comprehension while maintaining robustness and trustworthiness in AI systems.

Future Directions in AI Model Transparency

Future directions in AI model transparency emphasize enhancing both interpretability and explainability through advanced techniques such as counterfactual reasoning and causal inference. Integrating robust visualization tools and developing standardized frameworks are critical for improving user trust and regulatory compliance. Research efforts are increasingly focused on creating models that provide transparent decision boundaries while maintaining high performance across diverse applications.

Model Interpretability vs Model Explainability Infographic

techiny.com

techiny.com