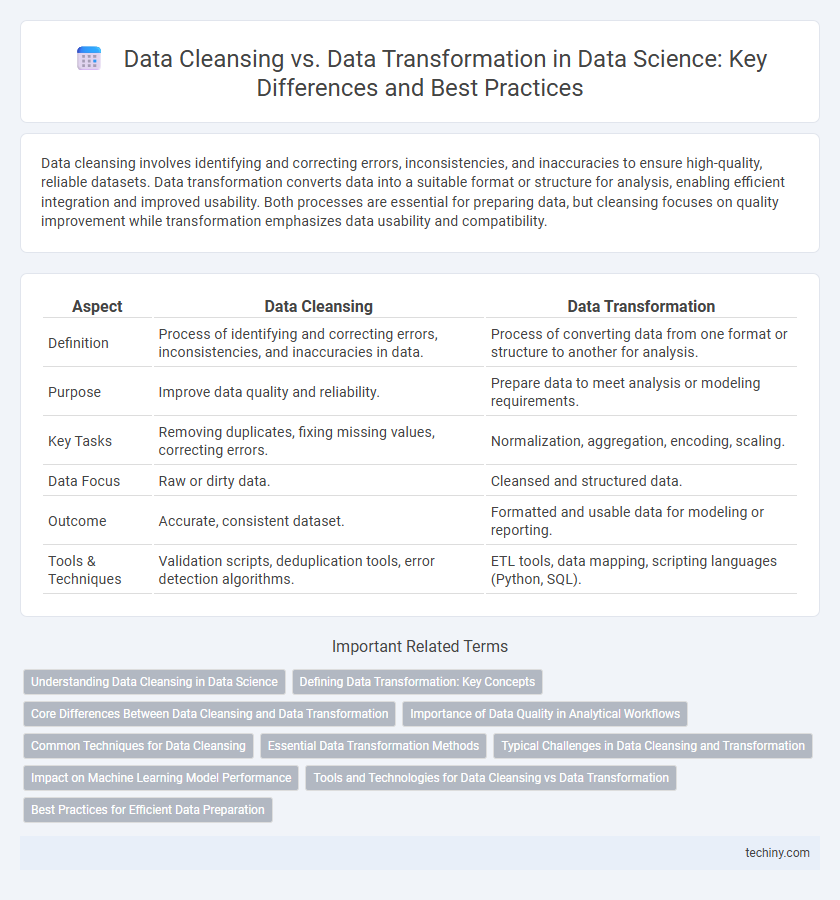

Data cleansing involves identifying and correcting errors, inconsistencies, and inaccuracies to ensure high-quality, reliable datasets. Data transformation converts data into a suitable format or structure for analysis, enabling efficient integration and improved usability. Both processes are essential for preparing data, but cleansing focuses on quality improvement while transformation emphasizes data usability and compatibility.

Table of Comparison

| Aspect | Data Cleansing | Data Transformation |

|---|---|---|

| Definition | Process of identifying and correcting errors, inconsistencies, and inaccuracies in data. | Process of converting data from one format or structure to another for analysis. |

| Purpose | Improve data quality and reliability. | Prepare data to meet analysis or modeling requirements. |

| Key Tasks | Removing duplicates, fixing missing values, correcting errors. | Normalization, aggregation, encoding, scaling. |

| Data Focus | Raw or dirty data. | Cleansed and structured data. |

| Outcome | Accurate, consistent dataset. | Formatted and usable data for modeling or reporting. |

| Tools & Techniques | Validation scripts, deduplication tools, error detection algorithms. | ETL tools, data mapping, scripting languages (Python, SQL). |

Understanding Data Cleansing in Data Science

Data cleansing in data science involves identifying and correcting errors, inconsistencies, and inaccuracies within datasets to ensure data quality and reliability. This process includes handling missing values, removing duplicates, and standardizing data formats to prepare raw data for accurate analysis. Effective data cleansing enhances model performance by providing a strong foundation for subsequent data transformation and analytical tasks.

Defining Data Transformation: Key Concepts

Data transformation in data science refers to the process of converting raw data into a structured format suitable for analysis by applying techniques such as normalization, aggregation, and encoding. It involves changing the data's format, structure, or values to ensure compatibility and improved data quality for predictive modeling and reporting. Key concepts include data mapping, scaling, and feature engineering, which enable more accurate insights and machine learning model performance.

Core Differences Between Data Cleansing and Data Transformation

Data cleansing primarily focuses on identifying and correcting errors, inconsistencies, and inaccuracies in raw datasets to ensure data quality and reliability. Data transformation involves converting data from its original format or structure into a suitable format for analysis, integration, or storage, often including normalization, aggregation, and encoding processes. The core difference lies in data cleansing improving data accuracy and validity, while data transformation changes the data's format and structure for better compatibility with analytical models or systems.

Importance of Data Quality in Analytical Workflows

Data quality is crucial in analytical workflows as it directly impacts the accuracy and reliability of insights. Data cleansing removes errors, inconsistencies, and duplicates, ensuring the dataset is accurate and complete. Data transformation restructures and formats clean data to fit analytical models, enhancing the effectiveness of data-driven decisions.

Common Techniques for Data Cleansing

Common techniques for data cleansing include handling missing values through imputation or removal, detecting and correcting errors with validation rules, and standardizing formats such as dates and categorical variables. Duplicate detection and removal ensure data integrity, while outlier detection methods identify anomalous data points for further review. These processes enhance data quality, which is essential for accurate data transformation and subsequent analysis.

Essential Data Transformation Methods

Data transformation involves crucial methods such as normalization, aggregation, encoding, and discretization, which prepare data for advanced analysis and model training. Normalization scales numerical data to a consistent range, improving algorithm performance, while encoding converts categorical variables into numerical forms for machine learning compatibility. Aggregation summarizes data points to reveal trends, and discretization converts continuous data into categorical bins, enhancing pattern detection in data science workflows.

Typical Challenges in Data Cleansing and Transformation

Typical challenges in data cleansing include handling missing values, correcting inconsistencies, and removing duplicate records, which are essential for ensuring data quality and reliability. Data transformation often faces difficulties in standardizing data formats, normalizing scales, and integrating disparate data sources to prepare datasets for analysis. Both processes demand meticulous attention to detail and robust tools to maintain data integrity throughout the data science workflow.

Impact on Machine Learning Model Performance

Data cleansing improves machine learning model performance by removing errors, inconsistencies, and outliers, resulting in higher data quality and more reliable predictions. Data transformation enhances model effectiveness by converting raw data into meaningful features through normalization, encoding, and scaling, enabling algorithms to learn patterns more efficiently. Both processes are critical, but data cleansing ensures the foundation's accuracy, while transformation optimizes input representation for better model generalization.

Tools and Technologies for Data Cleansing vs Data Transformation

Data cleansing utilizes tools like OpenRefine, Trifacta, and Talend Data Quality to identify and correct inconsistencies, missing values, and errors in datasets. Data transformation leverages technologies such as Apache Spark, Alteryx, and Informatica PowerCenter to convert raw data into structured formats suitable for analysis. Both processes often integrate within ETL pipelines, but cleansing emphasizes data accuracy while transformation focuses on data reformatting and enrichment.

Best Practices for Efficient Data Preparation

Data cleansing involves identifying and correcting errors, inconsistencies, and missing values to ensure data accuracy and reliability, while data transformation converts data into suitable formats or structures for analysis and modeling. Best practices for efficient data preparation emphasize automating repetitive cleansing tasks, applying consistent validation rules, and using scalable transformation techniques like normalization and encoding. Leveraging integrated tools that support both cleansing and transformation accelerates workflows and enhances overall data quality for better analytical outcomes.

Data Cleansing vs Data Transformation Infographic

techiny.com

techiny.com