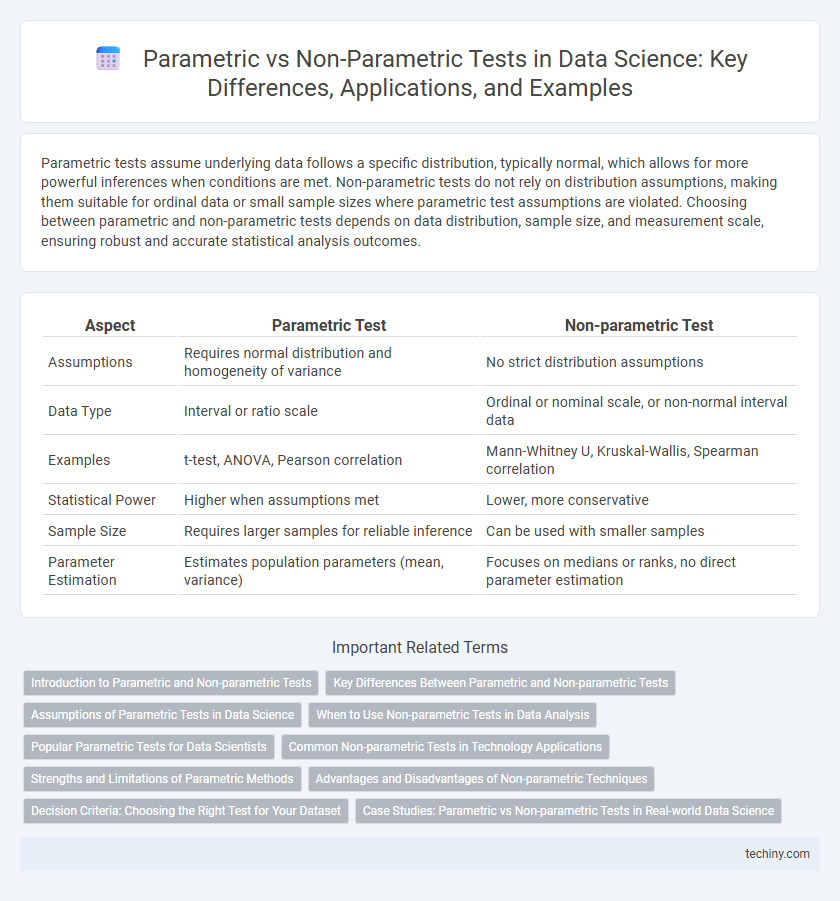

Parametric tests assume underlying data follows a specific distribution, typically normal, which allows for more powerful inferences when conditions are met. Non-parametric tests do not rely on distribution assumptions, making them suitable for ordinal data or small sample sizes where parametric test assumptions are violated. Choosing between parametric and non-parametric tests depends on data distribution, sample size, and measurement scale, ensuring robust and accurate statistical analysis outcomes.

Table of Comparison

| Aspect | Parametric Test | Non-parametric Test |

|---|---|---|

| Assumptions | Requires normal distribution and homogeneity of variance | No strict distribution assumptions |

| Data Type | Interval or ratio scale | Ordinal or nominal scale, or non-normal interval data |

| Examples | t-test, ANOVA, Pearson correlation | Mann-Whitney U, Kruskal-Wallis, Spearman correlation |

| Statistical Power | Higher when assumptions met | Lower, more conservative |

| Sample Size | Requires larger samples for reliable inference | Can be used with smaller samples |

| Parameter Estimation | Estimates population parameters (mean, variance) | Focuses on medians or ranks, no direct parameter estimation |

Introduction to Parametric and Non-parametric Tests

Parametric tests assume underlying data distributions, typically normality, and rely on parameters such as mean and variance for hypothesis testing, making them efficient with large samples and interval or ratio data. Non-parametric tests do not assume specific data distributions, making them suitable for ordinal data or small sample sizes, and they focus on data ranking rather than parameter estimation. Understanding when to apply parametric versus non-parametric tests is crucial for accurate data analysis and valid statistical inferences in data science.

Key Differences Between Parametric and Non-parametric Tests

Parametric tests assume underlying data distributions, such as normality, and rely on population parameters like mean and variance for hypothesis testing. Non-parametric tests do not require distribution assumptions, making them suitable for ordinal data or non-normal populations, often using medians or ranks instead of means. Key differences include sensitivity to outliers, data scale requirements, and the types of data each test accommodates in statistical analysis.

Assumptions of Parametric Tests in Data Science

Parametric tests in data science assume that the data follows a specific distribution, typically a normal distribution, and require homogeneity of variances across groups. These tests also assume independence of observations and that the data is measured on at least an interval scale. Violations of these assumptions can lead to inaccurate results, which is why checking assumptions is crucial before applying parametric methods.

When to Use Non-parametric Tests in Data Analysis

Non-parametric tests are preferred in data analysis when the sample size is small or when the data violates assumptions of normality and homoscedasticity required by parametric tests. These tests are ideal for ordinal data, nominal data, or when the measurement scale is not interval or ratio. They provide robust results without relying on underlying population distribution, making them suitable for skewed or heterogeneous datasets in fields like bioinformatics and social sciences.

Popular Parametric Tests for Data Scientists

Popular parametric tests for data scientists include the t-test, ANOVA, and linear regression, which assume underlying data distributions such as normality and homoscedasticity. These tests leverage parameters like mean and variance to make inferences about population characteristics, providing powerful tools for hypothesis testing under specific data assumptions. Parametric tests often yield more precise results when their assumptions are met, making them essential in many data science applications involving structured numerical data.

Common Non-parametric Tests in Technology Applications

Common non-parametric tests in technology applications include the Mann-Whitney U test, Kruskal-Wallis test, and the Wilcoxon signed-rank test, which are widely used for analyzing data that do not meet normality assumptions. These tests are essential for handling ordinal data or datasets with outliers, often encountered in machine learning model evaluation and user behavior analysis. Their robustness in detecting distributional differences without requiring parameterized data distributions makes them invaluable in data-driven technology projects.

Strengths and Limitations of Parametric Methods

Parametric tests offer high statistical power and efficiency when data meet assumptions of normality, homogeneity of variance, and interval scaling, enabling precise estimation of population parameters. Their main limitation lies in sensitivity to deviations from these assumptions, which can lead to misleading results or inflated Type I error rates. Despite these constraints, parametric methods are preferred for large sample sizes and well-defined distributions due to their strong inferential capabilities and computational simplicity.

Advantages and Disadvantages of Non-parametric Techniques

Non-parametric tests offer advantages such as fewer assumptions about data distribution, making them suitable for small sample sizes or ordinal data, enhancing flexibility in diverse data scenarios. Their robustness against outliers and non-normal distributions improves reliability when parametric test assumptions are violated. However, non-parametric techniques may have lower statistical power compared to parametric tests and can be less efficient with large samples or interval data.

Decision Criteria: Choosing the Right Test for Your Dataset

Decision criteria for selecting between parametric and non-parametric tests hinge on the underlying assumptions about the dataset, such as normality, variance homogeneity, and measurement scale. Parametric tests like the t-test or ANOVA are optimal for data meeting assumptions of normal distribution and interval or ratio scales, providing powerful and precise estimates. Non-parametric tests such as the Mann-Whitney U or Kruskal-Wallis are preferred when data violate these assumptions, offering flexibility for ordinal or non-normal data without requiring strict distributional prerequisites.

Case Studies: Parametric vs Non-parametric Tests in Real-world Data Science

Parametric tests assume underlying data distributions, making them ideal for normally distributed datasets with known parameters, such as t-tests used in A/B testing scenarios within e-commerce platforms. Non-parametric tests, like the Mann-Whitney U test or the Kruskal-Wallis test, provide robust alternatives when data violate parametric assumptions, commonly applied in healthcare studies with skewed or ordinal data. Case studies demonstrate that selecting the correct test type enhances statistical validity and predictive accuracy in machine learning model evaluations and experimental research.

Parametric Test vs Non-parametric Test Infographic

techiny.com

techiny.com