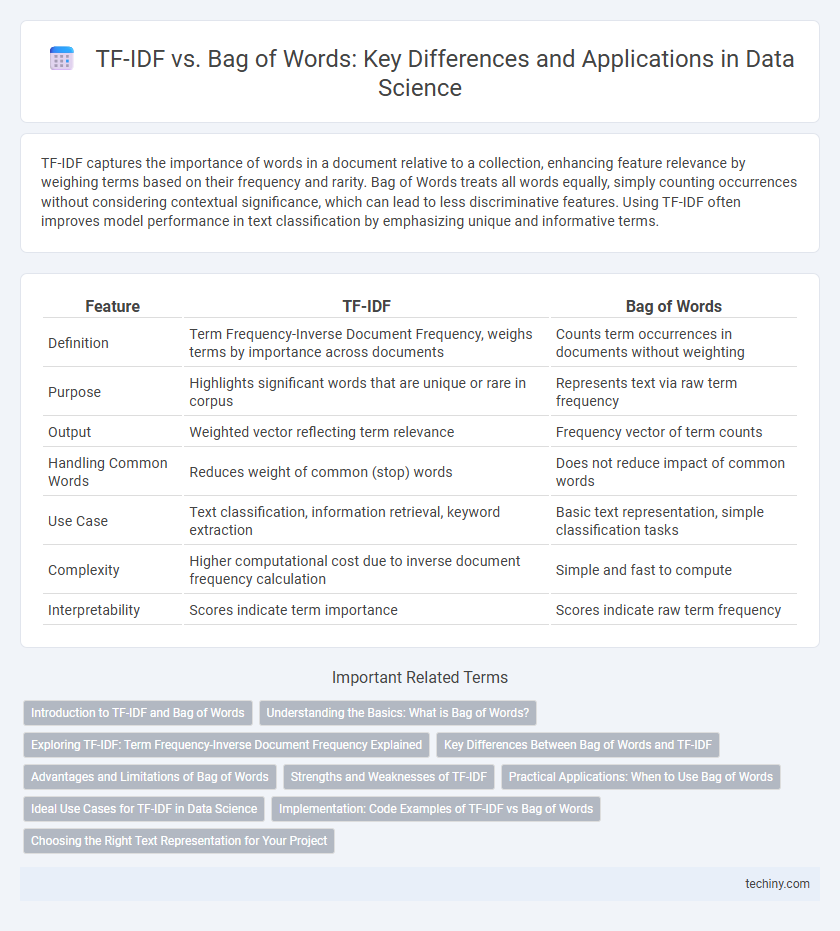

TF-IDF captures the importance of words in a document relative to a collection, enhancing feature relevance by weighing terms based on their frequency and rarity. Bag of Words treats all words equally, simply counting occurrences without considering contextual significance, which can lead to less discriminative features. Using TF-IDF often improves model performance in text classification by emphasizing unique and informative terms.

Table of Comparison

| Feature | TF-IDF | Bag of Words |

|---|---|---|

| Definition | Term Frequency-Inverse Document Frequency, weighs terms by importance across documents | Counts term occurrences in documents without weighting |

| Purpose | Highlights significant words that are unique or rare in corpus | Represents text via raw term frequency |

| Output | Weighted vector reflecting term relevance | Frequency vector of term counts |

| Handling Common Words | Reduces weight of common (stop) words | Does not reduce impact of common words |

| Use Case | Text classification, information retrieval, keyword extraction | Basic text representation, simple classification tasks |

| Complexity | Higher computational cost due to inverse document frequency calculation | Simple and fast to compute |

| Interpretability | Scores indicate term importance | Scores indicate raw term frequency |

Introduction to TF-IDF and Bag of Words

TF-IDF (Term Frequency-Inverse Document Frequency) and Bag of Words (BoW) are foundational techniques in text analysis and natural language processing. Bag of Words creates a sparse matrix by counting term occurrences in documents without considering word order or context, making it simple yet effective for basic text representation. TF-IDF improves upon BoW by weighing terms based on their frequency in a document relative to their frequency across all documents, highlighting important words and reducing the impact of common but less informative terms.

Understanding the Basics: What is Bag of Words?

Bag of Words (BoW) is a fundamental text representation technique in data science that transforms a document into a fixed-length vector by counting the frequency of each word, disregarding grammar and word order. This model simplifies text data but often results in high-dimensional, sparse matrices that lack semantic meaning. Understanding BoW is essential for comparing with more advanced methods like TF-IDF, which account for word importance across documents.

Exploring TF-IDF: Term Frequency-Inverse Document Frequency Explained

TF-IDF (Term Frequency-Inverse Document Frequency) quantifies the importance of a term within a document relative to a collection of documents by balancing term frequency against its rarity across the corpus, enhancing document representation beyond the simplistic Bag of Words approach. While Bag of Words counts term occurrences, TF-IDF assigns weights that reduce the impact of commonly used words and highlight distinctive terms, improving text classification and information retrieval accuracy. This weighting mechanism makes TF-IDF essential for identifying key phrases and improving feature extraction in natural language processing tasks.

Key Differences Between Bag of Words and TF-IDF

Bag of Words (BoW) represents text by counting word occurrences, ignoring word order and context, which often leads to high-dimensional sparse vectors. TF-IDF (Term Frequency-Inverse Document Frequency) enhances BoW by scaling word frequencies according to their rarity across documents, thus emphasizing more informative and distinctive terms. While BoW treats all words equally, TF-IDF assigns weights that reduce the impact of common words and highlight keywords critical for text classification and retrieval tasks.

Advantages and Limitations of Bag of Words

Bag of Words (BoW) offers simplicity and efficiency by converting text into fixed-length feature vectors based on word frequency, making it easy to implement and interpret. Its main limitations include ignoring word order and context, leading to a loss of semantic meaning and potential misrepresentation in natural language tasks. BoW struggles with large vocabularies causing high dimensionality and sparsity, which can impact model performance and scalability.

Strengths and Weaknesses of TF-IDF

TF-IDF excels at highlighting important words by weighing terms based on their frequency within a document relative to their frequency across the entire corpus, effectively reducing the impact of common words. Its strength lies in improving text classification and information retrieval by emphasizing distinctive terms, yet it struggles with capturing semantic meaning and contextual relationships between words. Unlike simple Bag of Words, TF-IDF is more robust in handling large, diverse datasets but may underperform in situations requiring deep language understanding.

Practical Applications: When to Use Bag of Words

Bag of Words excels in text classification tasks with smaller, domain-specific datasets where interpretability and computational simplicity are crucial. It effectively captures word frequency information for spam detection, sentiment analysis, and topic categorization without requiring complex context understanding. This approach is preferred when feature sparsity and model transparency are prioritized over nuanced semantic representation.

Ideal Use Cases for TF-IDF in Data Science

TF-IDF excels in text classification and sentiment analysis by highlighting the importance of words that uniquely characterize documents within a corpus, reducing the impact of common but less informative terms. It is ideal for information retrieval tasks, such as search engines and document ranking systems, where weighting terms by their frequency and inverse document frequency enhances relevance scoring. TF-IDF also performs well in feature extraction for machine learning models requiring meaningful representations of textual data over simple term occurrence counts.

Implementation: Code Examples of TF-IDF vs Bag of Words

TF-IDF implementation in Python commonly uses scikit-learn's TfidfVectorizer to convert text data into weighted feature vectors that reflect term importance, while Bag of Words frequently employs CountVectorizer to create simple frequency-based representations. Both techniques require preprocessing steps like tokenization and stopwords removal, but TF-IDF assigns lower weights to common words through inverse document frequency, enhancing model performance in text classification tasks. Code examples demonstrate TF-IDF as `TfidfVectorizer().fit_transform(corpus)` and Bag of Words as `CountVectorizer().fit_transform(corpus)`, highlighting differences in output sparsity and feature scaling.

Choosing the Right Text Representation for Your Project

TF-IDF (Term Frequency-Inverse Document Frequency) highlights important words by reducing the weight of common terms across documents, making it ideal for projects that require identifying distinctive keywords or themes. Bag of Words (BoW) represents text by counting word occurrences, offering simplicity and efficiency for tasks like basic text classification or sentiment analysis where word frequency matters more than context. Selecting the right representation depends on project goals: use TF-IDF for emphasizing relevant terms in large or diverse corpora, while BoW suits scenarios needing straightforward frequency-based features.

TF-IDF vs Bag of Words Infographic

techiny.com

techiny.com