Pipelining enhances hardware performance by breaking instruction execution into discrete stages, allowing multiple instructions to overlap in different phases, thus increasing throughput. Superscalar architecture further improves processing speed by enabling multiple instructions to be issued and executed simultaneously within a single clock cycle, leveraging parallel execution units. While pipelining boosts sequential instruction flow efficiency, superscalar designs exploit instruction-level parallelism for greater computational power.

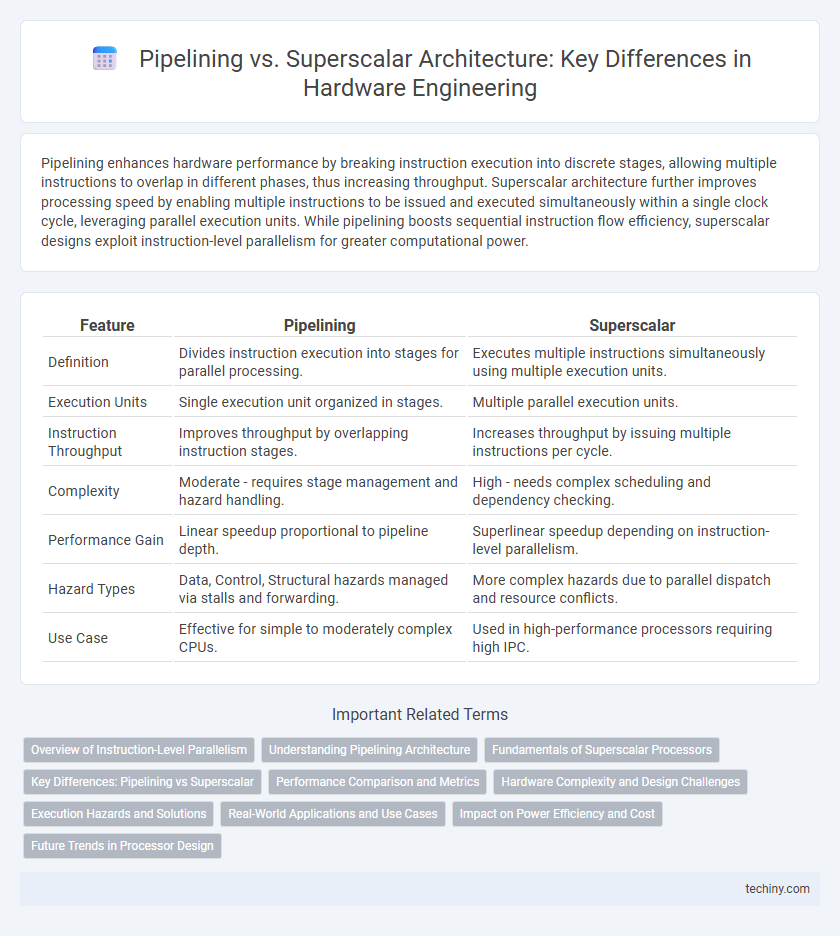

Table of Comparison

| Feature | Pipelining | Superscalar |

|---|---|---|

| Definition | Divides instruction execution into stages for parallel processing. | Executes multiple instructions simultaneously using multiple execution units. |

| Execution Units | Single execution unit organized in stages. | Multiple parallel execution units. |

| Instruction Throughput | Improves throughput by overlapping instruction stages. | Increases throughput by issuing multiple instructions per cycle. |

| Complexity | Moderate - requires stage management and hazard handling. | High - needs complex scheduling and dependency checking. |

| Performance Gain | Linear speedup proportional to pipeline depth. | Superlinear speedup depending on instruction-level parallelism. |

| Hazard Types | Data, Control, Structural hazards managed via stalls and forwarding. | More complex hazards due to parallel dispatch and resource conflicts. |

| Use Case | Effective for simple to moderately complex CPUs. | Used in high-performance processors requiring high IPC. |

Overview of Instruction-Level Parallelism

Pipelining enhances instruction-level parallelism by dividing instruction execution into discrete stages, allowing multiple instructions to overlap in different pipeline phases simultaneously. Superscalar architecture increases parallelism by issuing multiple instructions per clock cycle using multiple execution units, enabling concurrent processing beyond a single instruction stream. Both techniques aim to maximize CPU throughput by exploiting fine-grained instruction-level parallelism, but superscalar designs typically achieve higher execution rates through dynamic instruction dispatch and parallel functional units.

Understanding Pipelining Architecture

Pipelining architecture in hardware engineering enhances instruction throughput by dividing the execution process into distinct stages, allowing multiple instructions to overlap in execution. This method improves CPU efficiency by ensuring continuous data flow and minimizing idle time in processor components. Compared to superscalar designs, pipelining focuses on sequentially advancing instructions through stages rather than issuing multiple instructions simultaneously.

Fundamentals of Superscalar Processors

Superscalar processors enhance performance by executing multiple instructions per clock cycle through parallel pipelines, leveraging instruction-level parallelism beyond traditional pipelining. Core fundamentals include dynamic instruction dispatch, out-of-order execution, and complex hazard detection to maximize throughput and minimize stalls. This approach contrasts with simple pipelining, which processes instructions in sequential stages, limiting instruction throughput to one per cycle.

Key Differences: Pipelining vs Superscalar

Pipelining improves processor throughput by dividing instructions into sequential stages, allowing overlapping execution of multiple instructions in different stages, whereas superscalar architecture executes multiple instructions simultaneously by using multiple execution units. Pipelining increases instruction-level parallelism through temporal division, while superscalar enhances parallelism spatially by issuing and completing multiple instructions per clock cycle. Key differences include pipeline's reliance on linear instruction flow and stage dependencies versus superscalar's complex instruction dispatch, issue logic, and out-of-order execution capabilities.

Performance Comparison and Metrics

Pipelining enhances instruction throughput by dividing execution into discrete stages, enabling multiple instructions to overlap in execution, which reduces cycle time and increases instruction per cycle (IPC) efficiency. Superscalar architectures improve performance by issuing multiple instructions per clock cycle through multiple execution units, significantly boosting IPC beyond the limits of a single pipeline. Performance metrics commonly used for comparison include IPC, clock frequency, pipeline depth, and instructions per cycle scalability, with superscalar designs generally achieving higher IPC but facing complexity in instruction-level parallelism and hazard management.

Hardware Complexity and Design Challenges

Pipelining enhances processor throughput by dividing instruction execution into stages but introduces challenges such as hazard detection and pipeline stalling, increasing control complexity. Superscalar architectures require multiple parallel execution units and sophisticated instruction scheduling logic, significantly raising hardware complexity to manage instruction dependencies and resource conflicts. Both approaches demand intricate design efforts to optimize performance while balancing power consumption and silicon area constraints.

Execution Hazards and Solutions

Pipelining faces execution hazards such as data, control, and structural hazards, which stall the pipeline and degrade performance. Superscalar architectures mitigate these hazards by issuing multiple instructions per cycle, leveraging dynamic scheduling and out-of-order execution to resolve dependencies. Techniques like register renaming and branch prediction further enhance instruction throughput and reduce pipeline stalls in both paradigms.

Real-World Applications and Use Cases

Pipelining enhances processor throughput by breaking instruction execution into discrete stages, widely used in CPUs for tasks like multimedia processing and embedded systems where consistent instruction flow improves efficiency. Superscalar architectures enable parallel execution of multiple instructions per clock cycle, critical in high-performance computing and gaming consoles to maximize processing power. Real-world applications often combine both techniques to optimize speed and resource utilization in modern microprocessors.

Impact on Power Efficiency and Cost

Pipelining enhances power efficiency by allowing multiple instruction stages to operate in parallel, reducing idle processor cycles and improving throughput without significantly increasing hardware complexity or cost. Superscalar architectures execute multiple instructions simultaneously through multiple execution units, delivering higher performance but often at the expense of increased power consumption and greater silicon area, which raises manufacturing costs. Balancing power efficiency and cost, pipelining remains a more scalable solution for low-power, cost-sensitive hardware designs, while superscalar designs suit high-performance applications with less stringent power and cost constraints.

Future Trends in Processor Design

Future trends in processor design emphasize the integration of advanced pipelining techniques with superscalar architectures to maximize instruction-level parallelism and throughput. Emerging technologies leverage dynamic scheduling, deeper pipelines, and wider superscalar execution units to enhance performance while managing power efficiency. Innovations such as machine learning-driven branch prediction and speculative execution further refine pipeline utilization and superscalar processing capabilities.

Pipelining vs Superscalar Infographic

techiny.com

techiny.com