Active perception in robotics involves the robot actively exploring its environment by moving sensors or changing viewpoint to gather relevant data, enhancing situational awareness and decision-making. Passive perception relies on fixed sensors or predefined data inputs, limiting adaptability but simplifying processing requirements. Employing active perception strategies enables robots to perform more complex tasks in dynamic environments by continuously updating their sensory information.

Table of Comparison

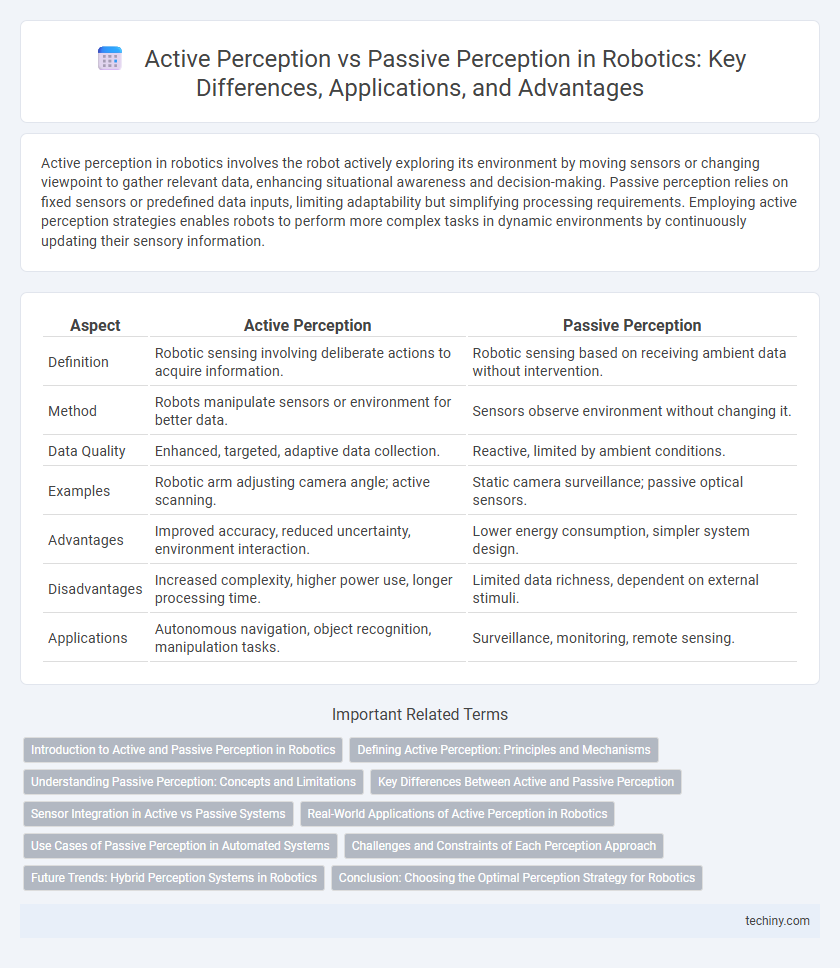

| Aspect | Active Perception | Passive Perception |

|---|---|---|

| Definition | Robotic sensing involving deliberate actions to acquire information. | Robotic sensing based on receiving ambient data without intervention. |

| Method | Robots manipulate sensors or environment for better data. | Sensors observe environment without changing it. |

| Data Quality | Enhanced, targeted, adaptive data collection. | Reactive, limited by ambient conditions. |

| Examples | Robotic arm adjusting camera angle; active scanning. | Static camera surveillance; passive optical sensors. |

| Advantages | Improved accuracy, reduced uncertainty, environment interaction. | Lower energy consumption, simpler system design. |

| Disadvantages | Increased complexity, higher power use, longer processing time. | Limited data richness, dependent on external stimuli. |

| Applications | Autonomous navigation, object recognition, manipulation tasks. | Surveillance, monitoring, remote sensing. |

Introduction to Active and Passive Perception in Robotics

Active perception in robotics involves the robot proactively controlling its sensors and movements to gather relevant information, enhancing understanding of the environment. Passive perception relies on fixed or pre-determined sensor inputs, capturing data without influencing the scene or sensor positioning. The distinction between active and passive perception is critical for optimizing robot autonomy, situational awareness, and task performance in dynamic settings.

Defining Active Perception: Principles and Mechanisms

Active perception in robotics involves the deliberate control of sensors and movement to acquire relevant environmental information, enhancing situational awareness and decision-making. This approach integrates sensorimotor coordination, enabling robots to adaptively focus attention, manipulate viewpoints, and interact with objects to optimize data quality. Core mechanisms include sensor positioning strategies, feedback loops for real-time adjustment, and predictive models that guide exploratory actions to improve perception accuracy.

Understanding Passive Perception: Concepts and Limitations

Passive perception in robotics refers to the process of gathering sensory data without actively influencing the environment, relying on pre-existing stimuli such as ambient light or sound. This approach limits the robot's ability to obtain comprehensive information, often resulting in incomplete or ambiguous data due to occlusions and static viewpoints. Understanding these constraints is crucial for optimizing sensor placement and developing algorithms that compensate for the lack of interaction inherent in passive perception systems.

Key Differences Between Active and Passive Perception

Active perception in robotics involves proactive sensing where the robot controls its sensors to gather data, enabling adaptive interaction with the environment. Passive perception relies on fixed sensors collecting data without influencing or modifying the sensory input, often resulting in limited environmental awareness. Key differences include sensor mobility, data acquisition strategy, and adaptability, with active perception offering enhanced scene understanding and environment exploration compared to passive perception's static data collection.

Sensor Integration in Active vs Passive Systems

Active perception systems in robotics integrate multiple sensors such as LiDAR, cameras, and tactile sensors to actively explore and interpret the environment, enhancing data acquisition through movement and sensor fusion techniques. Passive perception relies primarily on fixed sensors like cameras or microphones to gather environmental information without influencing the scene, leading to limitations in data richness and adaptability. Sensor integration in active systems enables dynamic adjustment of sensor configurations and data collection strategies, resulting in more comprehensive and robust environmental understanding compared to passive systems.

Real-World Applications of Active Perception in Robotics

Active perception in robotics involves robots dynamically interacting with their environment to enhance data acquisition, unlike passive perception that relies solely on pre-collected sensory input. This approach enables real-world applications such as autonomous navigation, where robots actively adjust sensors to avoid obstacles, and robotic manipulation, which requires continuous environment assessment for precise object handling. By integrating active perception, robots improve decision-making accuracy and adaptability in complex, uncertain environments like warehouses and disaster response scenarios.

Use Cases of Passive Perception in Automated Systems

Passive perception in automated systems excels in environments where minimal energy consumption and long-term monitoring are crucial, such as surveillance drones and autonomous vehicles relying on ambient sensor data like cameras and microphones. These systems process pre-existing environmental signals without emitting energy, enabling stealthy operation in security applications or wildlife observation. Examples include traffic flow analysis through video feeds and ambient sound detection for industrial equipment monitoring, highlighting the efficiency of passive perception in continuous, non-intrusive data collection.

Challenges and Constraints of Each Perception Approach

Active perception in robotics faces challenges such as increased energy consumption and the need for precise control algorithms to manage sensor movement and data acquisition. Passive perception struggles with limitations in environmental awareness and vulnerability to occlusions, relying solely on fixed sensors that may miss critical information. Both approaches encounter constraints related to real-time processing demands and robustness in dynamic, cluttered environments.

Future Trends: Hybrid Perception Systems in Robotics

Future trends in robotics emphasize the integration of active and passive perception systems to enhance environmental awareness and decision-making accuracy. Hybrid perception systems leverage active sensors like LiDAR and cameras alongside passive sensors such as microphones and thermal detectors to create more robust, adaptive models for real-time analysis. This fusion enables robots to operate efficiently in dynamic, complex environments, advancing applications in autonomous navigation, industrial automation, and human-robot interaction.

Conclusion: Choosing the Optimal Perception Strategy for Robotics

Active perception enables robots to interact dynamically with their surroundings by controlling sensors and acquiring targeted data, enhancing task-specific accuracy. Passive perception relies on static data capture, offering computational simplicity but potentially limiting responsiveness in complex environments. Selecting the optimal perception strategy depends on the application requirements, environmental complexity, and real-time processing capabilities to balance accuracy, efficiency, and adaptability in robotic systems.

Active perception vs passive perception Infographic

techiny.com

techiny.com