Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while simultaneously tracking their position within it, enhancing navigation accuracy in dynamic settings. In contrast, traditional mapping relies on pre-existing data and does not update robot location in real-time, limiting adaptability. SLAM integrates sensor data and algorithms to offer real-time localization and map refinement, crucial for autonomous robotics applications.

Table of Comparison

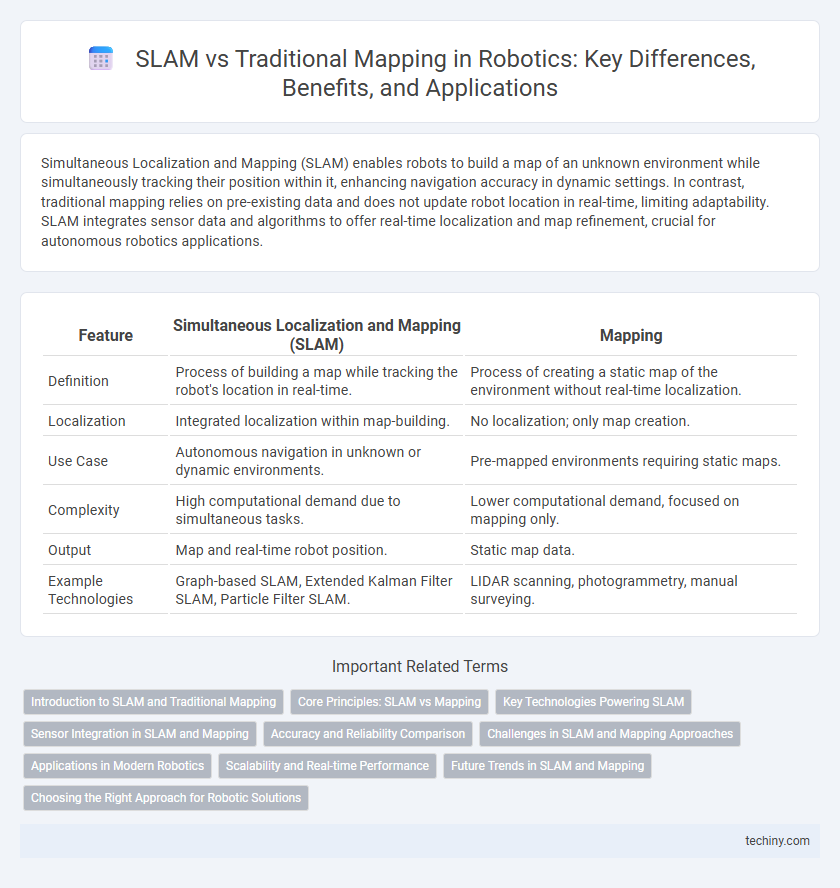

| Feature | Simultaneous Localization and Mapping (SLAM) | Mapping |

|---|---|---|

| Definition | Process of building a map while tracking the robot's location in real-time. | Process of creating a static map of the environment without real-time localization. |

| Localization | Integrated localization within map-building. | No localization; only map creation. |

| Use Case | Autonomous navigation in unknown or dynamic environments. | Pre-mapped environments requiring static maps. |

| Complexity | High computational demand due to simultaneous tasks. | Lower computational demand, focused on mapping only. |

| Output | Map and real-time robot position. | Static map data. |

| Example Technologies | Graph-based SLAM, Extended Kalman Filter SLAM, Particle Filter SLAM. | LIDAR scanning, photogrammetry, manual surveying. |

Introduction to SLAM and Traditional Mapping

Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while simultaneously tracking their position within it, using sensors such as LiDAR, cameras, and IMUs. Traditional mapping relies on pre-existing maps or external localization systems, requiring the robot's position to be known beforehand for accurate environment representation. SLAM techniques integrate sensor data processing, probabilistic state estimation, and loop closure detection to enhance autonomous navigation and environment understanding.

Core Principles: SLAM vs Mapping

Simultaneous Localization and Mapping (SLAM) integrates real-time localization with environment mapping, enabling robots to build and update maps while simultaneously determining their position within unknown spaces. Traditional mapping relies solely on pre-known robot positions to create environmental models, lacking the dynamic self-localization component integral to SLAM. Core SLAM algorithms fuse sensor data and motion estimates through probabilistic methods like Kalman filters or particle filters, contrasting with static mapping approaches that assume fixed robot trajectories.

Key Technologies Powering SLAM

Simultaneous Localization and Mapping (SLAM) integrates advanced sensor fusion, employing LiDAR, RGB-D cameras, and inertial measurement units (IMUs) to concurrently construct a map while tracking a robot's position in real-time. Algorithms such as Extended Kalman Filter (EKF), Graph-Based SLAM, and Particle Filters enable precise pose estimation and environmental understanding, optimizing navigation in dynamic and unknown settings. Key technologies like loop closure detection and real-time data processing frameworks ensure robustness and scalability, distinguishing SLAM from traditional mapping methods that rely solely on pre-mapped environments.

Sensor Integration in SLAM and Mapping

Simultaneous Localization and Mapping (SLAM) integrates sensor data from LiDAR, cameras, and inertial measurement units (IMUs) to build real-time, dynamic environment maps while simultaneously estimating the robot's position. In contrast, traditional mapping systems primarily rely on pre-collected sensor data, such as high-resolution LiDAR scans, without the concurrent localization process. Effective sensor fusion in SLAM enhances accuracy and robustness in unknown environments, enabling autonomous navigation and real-time updates.

Accuracy and Reliability Comparison

Simultaneous Localization and Mapping (SLAM) offers higher accuracy and reliability compared to traditional mapping techniques by continuously updating the robot's position and environment map in real-time. SLAM algorithms integrate sensor data from LiDAR, cameras, and IMUs to reduce cumulative errors and provide robust localization even in dynamic or unknown environments. Traditional mapping methods often rely on pre-existing maps, resulting in lower adaptability and increased susceptibility to localization drift and environmental changes.

Challenges in SLAM and Mapping Approaches

Simultaneous Localization and Mapping (SLAM) faces significant challenges such as real-time data processing, sensor noise, and dynamic environment adaptation, requiring complex probabilistic algorithms to accurately build maps while localizing the robot. Mapping approaches often rely on pre-existing maps or offline data acquisition, limiting adaptability but simplifying computational demands. Robust SLAM systems integrate techniques like particle filters, Kalman filters, and graph-based optimization to address uncertainty and ensure precise navigation in unknown or changing environments.

Applications in Modern Robotics

Simultaneous Localization and Mapping (SLAM) integrates real-time position tracking with environmental mapping, enabling autonomous robots to navigate unknown spaces without pre-existing maps. In contrast, traditional mapping relies on predefined maps and lacks real-time localization capabilities, limiting its use in dynamic or unexplored environments. SLAM's applications in modern robotics include autonomous vehicles, drone navigation, and robotic vacuum cleaners, where accurate localization and environment understanding are critical for efficient operation.

Scalability and Real-time Performance

Simultaneous Localization and Mapping (SLAM) enhances scalability by enabling robots to build and update maps in unknown environments while simultaneously tracking their position, reducing the need for pre-existing maps. Mapping alone often requires extensive pre-processing and lacks adaptability in dynamic or large-scale settings, limiting real-time performance. SLAM's integrated approach supports real-time data processing and continuous environment updates, critical for scalable robotic navigation and autonomous operation.

Future Trends in SLAM and Mapping

Future trends in Simultaneous Localization and Mapping (SLAM) emphasize the integration of artificial intelligence and machine learning to enhance real-time environmental understanding and adaptive navigation in complex settings. Advances in sensor fusion, including LiDAR, visual cameras, and inertial measurement units, are driving improvements in the accuracy and robustness of both SLAM and traditional mapping techniques. Emerging developments highlight cloud-based processing and collaborative multi-robot systems to enable large-scale, dynamic environment mapping with continuous updates and improved scalability.

Choosing the Right Approach for Robotic Solutions

Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while tracking their location within it, which is essential for dynamic or unexplored settings. Traditional mapping relies on pre-existing maps and is suitable for controlled, static environments where localization data is readily available. Selecting the appropriate approach depends on the robot's operational context, environmental complexity, and the need for real-time adaptation, with SLAM offering greater flexibility for autonomous navigation in unpredictable scenarios.

Simultaneous Localization and Mapping (SLAM) vs Mapping Infographic

techiny.com

techiny.com