Active vision involves a robot dynamically adjusting its sensors and viewpoint to gather targeted information, enhancing perception accuracy and interaction capabilities. Passive vision relies on fixed sensors that capture visual data without movement or adaptation, limiting the robot's ability to respond to changing environments. Employing active vision systems improves spatial understanding and decision-making in robotic applications compared to passive vision approaches.

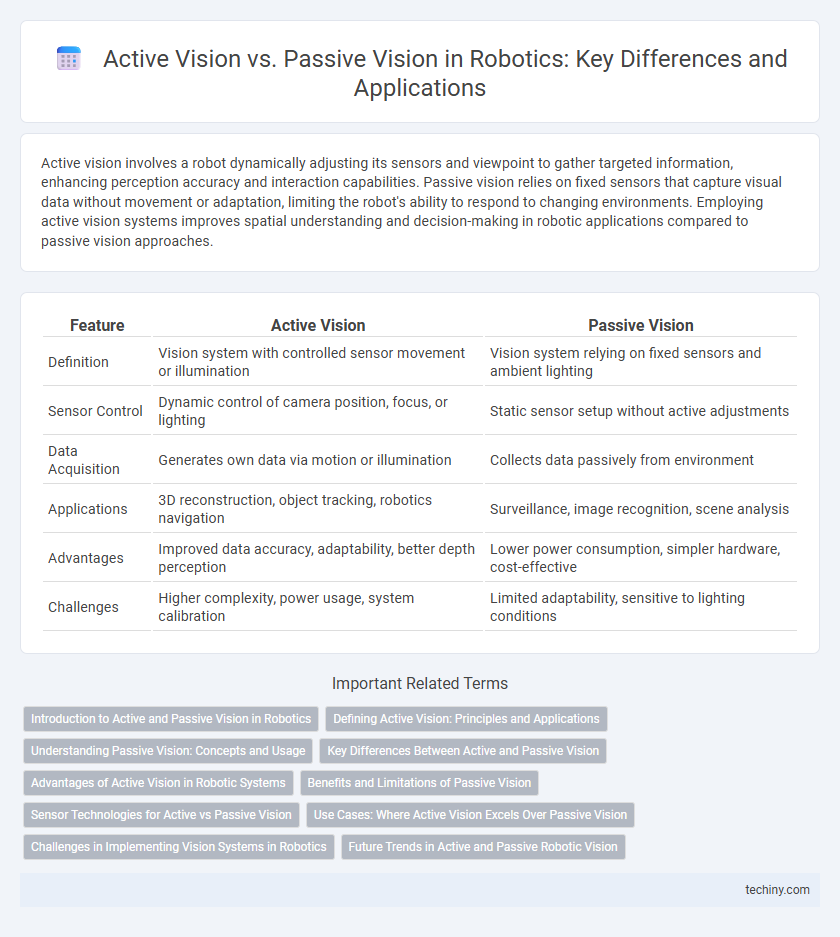

Table of Comparison

| Feature | Active Vision | Passive Vision |

|---|---|---|

| Definition | Vision system with controlled sensor movement or illumination | Vision system relying on fixed sensors and ambient lighting |

| Sensor Control | Dynamic control of camera position, focus, or lighting | Static sensor setup without active adjustments |

| Data Acquisition | Generates own data via motion or illumination | Collects data passively from environment |

| Applications | 3D reconstruction, object tracking, robotics navigation | Surveillance, image recognition, scene analysis |

| Advantages | Improved data accuracy, adaptability, better depth perception | Lower power consumption, simpler hardware, cost-effective |

| Challenges | Higher complexity, power usage, system calibration | Limited adaptability, sensitive to lighting conditions |

Introduction to Active and Passive Vision in Robotics

Active vision in robotics involves the robot dynamically controlling its sensors, such as cameras, to acquire information by moving or adjusting viewpoints, enhancing scene understanding and interaction capabilities. Passive vision relies on fixed sensors capturing visual data without sensor movement, limiting adaptability but reducing complexity and energy consumption. The distinction between active and passive vision is critical for designing robotic systems optimized for tasks like object recognition, navigation, and manipulation.

Defining Active Vision: Principles and Applications

Active vision integrates robotic sensors and motor control to dynamically adjust the viewpoint, enabling systems to acquire task-relevant information through real-time interaction with the environment. This approach leverages principles such as sensorimotor coordination, selective attention, and continuous feedback, contrasting passive vision which relies solely on fixed camera inputs without environmental adaptation. Applications of active vision span autonomous navigation, object manipulation, and robotic inspection, enhancing accuracy and adaptability in complex, unstructured settings.

Understanding Passive Vision: Concepts and Usage

Passive vision in robotics relies on external light sources to capture images, emphasizing image analysis without altering the environment. This approach utilizes cameras and sensors to interpret scene information based on reflected light, aiding tasks like object recognition and scene reconstruction. Its advantages include lower energy consumption and simplicity of hardware, though it can be limited by lighting conditions and lack of depth perception compared to active vision systems.

Key Differences Between Active and Passive Vision

Active vision systems in robotics employ sensors that move or adjust to gather information, enabling dynamic scene analysis and depth perception. Passive vision relies on fixed sensors capturing static images, limiting perspective changes and real-time interaction. The key differences include sensing approach, data richness, and the ability to adapt to environmental changes for task optimization.

Advantages of Active Vision in Robotic Systems

Active vision in robotic systems enhances environmental interaction by dynamically adjusting the sensor's position and focus, enabling robots to acquire more relevant and detailed visual information compared to passive vision, which relies on fixed perspectives. This adaptability improves object recognition, depth perception, and scene understanding, contributing to higher accuracy in navigation and manipulation tasks. Robots equipped with active vision systems can better handle occlusions and varying lighting conditions, resulting in greater operational robustness and autonomy.

Benefits and Limitations of Passive Vision

Passive vision systems in robotics rely on ambient lighting and typically use cameras or sensors to capture visual information without emitting any light or signals. Benefits include lower power consumption, reduced hardware complexity, and minimal interference with the environment, making them suitable for long-term monitoring and energy-efficient applications. Limitations involve sensitivity to lighting conditions, difficulty in depth perception, and challenges with detecting objects in low contrast or cluttered scenes, which can reduce reliability in dynamic or poorly illuminated environments.

Sensor Technologies for Active vs Passive Vision

Active vision systems utilize sensors such as LiDAR, structured light, and time-of-flight cameras to emit signals and capture reflected data, enabling precise depth perception and environmental mapping. Passive vision relies on traditional RGB cameras and stereo vision to interpret ambient light without emitting signals, making it energy-efficient but less effective in low-light or complex environments. Sensor fusion combining active and passive technologies enhances robotic perception by leveraging the strengths of both methods for improved accuracy and robustness.

Use Cases: Where Active Vision Excels Over Passive Vision

Active vision excels in dynamic environments requiring real-time interaction, such as robotic manipulation and autonomous navigation, by actively controlling camera parameters to optimize perception. This approach allows robots to focus on relevant objects, improving accuracy in tasks like object recognition, tracking, and 3D reconstruction. Passive vision systems, relying on fixed sensors, often struggle with occlusions and varying lighting, whereas active vision adapts to these challenges for enhanced situational awareness.

Challenges in Implementing Vision Systems in Robotics

Active vision systems in robotics utilize movable cameras and sensors to dynamically capture environmental data, offering enhanced adaptability but facing challenges such as increased mechanical complexity and higher energy consumption. Passive vision systems rely on fixed cameras capturing static images, which simplifies design but limits adaptability to changing environments and dynamic interactions. Implementing vision systems confronts obstacles including real-time processing demands, varying lighting conditions, occlusions, and sensor calibration to ensure accurate perception and decision-making.

Future Trends in Active and Passive Robotic Vision

Future trends in active robotic vision emphasize the integration of real-time environmental interaction through dynamic sensors like LiDAR and time-of-flight cameras, enabling robots to navigate complex and unstructured environments more effectively. Passive vision systems are advancing via deep learning-based image recognition and 3D reconstruction, improving scene understanding without emitting signals. Hybrid approaches combining active sensing with passive data processing are expected to dominate, enhancing robotic perception accuracy and adaptability in autonomous applications.

Active vision vs passive vision Infographic

techiny.com

techiny.com