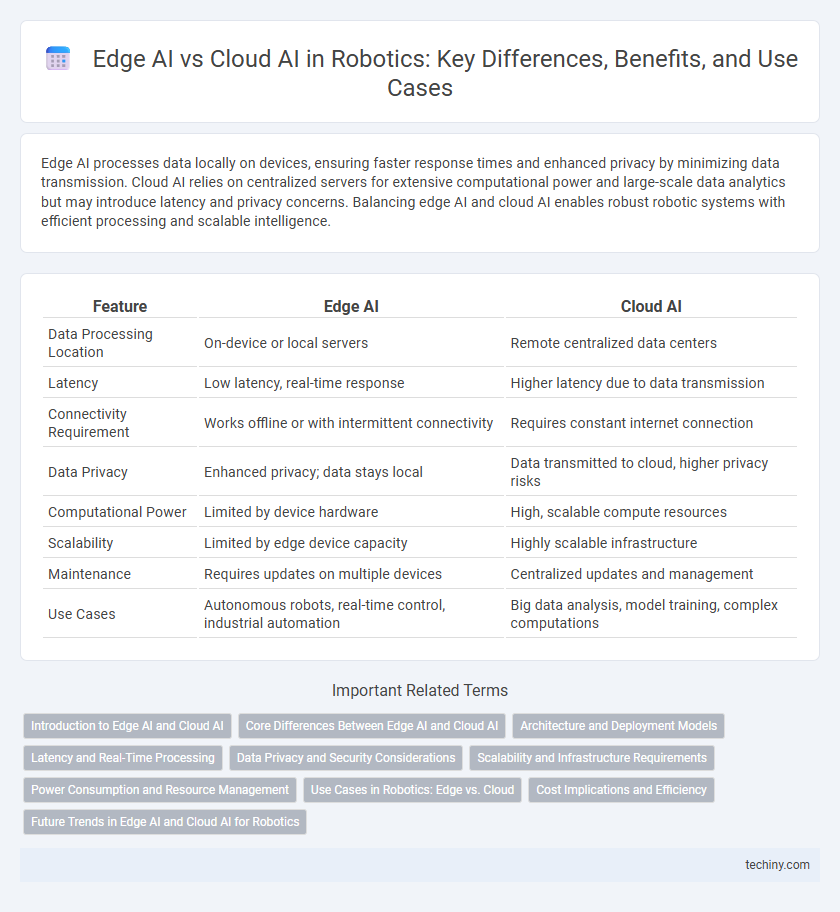

Edge AI processes data locally on devices, ensuring faster response times and enhanced privacy by minimizing data transmission. Cloud AI relies on centralized servers for extensive computational power and large-scale data analytics but may introduce latency and privacy concerns. Balancing edge AI and cloud AI enables robust robotic systems with efficient processing and scalable intelligence.

Table of Comparison

| Feature | Edge AI | Cloud AI |

|---|---|---|

| Data Processing Location | On-device or local servers | Remote centralized data centers |

| Latency | Low latency, real-time response | Higher latency due to data transmission |

| Connectivity Requirement | Works offline or with intermittent connectivity | Requires constant internet connection |

| Data Privacy | Enhanced privacy; data stays local | Data transmitted to cloud, higher privacy risks |

| Computational Power | Limited by device hardware | High, scalable compute resources |

| Scalability | Limited by edge device capacity | Highly scalable infrastructure |

| Maintenance | Requires updates on multiple devices | Centralized updates and management |

| Use Cases | Autonomous robots, real-time control, industrial automation | Big data analysis, model training, complex computations |

Introduction to Edge AI and Cloud AI

Edge AI processes data locally on devices such as robots, enabling faster decision-making and reduced latency compared to Cloud AI, which relies on centralized servers to analyze data remotely. Robotics applications benefit from Edge AI by improving real-time responsiveness and maintaining privacy, while Cloud AI offers powerful computational resources and scalability for complex tasks. Understanding the balance between Edge AI's on-device processing and Cloud AI's remote analytics is critical for optimizing robotic performance and efficiency.

Core Differences Between Edge AI and Cloud AI

Edge AI processes data locally on devices such as robots, enabling real-time decision-making with reduced latency and enhanced privacy, while Cloud AI relies on centralized servers for data processing, offering greater computational power and scalability. Edge AI is optimal for environments with limited connectivity or strict security requirements, whereas Cloud AI excels in tasks demanding intensive data analysis and model training. The core difference lies in data processing location, impacting response time, resource utilization, and data control.

Architecture and Deployment Models

Edge AI architecture processes data locally on robotics devices using embedded processors and real-time decision-making units, minimizing latency and ensuring greater autonomy. Cloud AI relies on centralized servers that handle complex computations and large-scale data analytics, enabling continual learning and model updates but requiring stable network connectivity. Deployment models vary with edge AI favoring distributed, low-power environments and cloud AI adopting centralized, resource-rich infrastructures to optimize performance and scalability in robotics applications.

Latency and Real-Time Processing

Edge AI in robotics significantly reduces latency by processing data locally on the device, enabling real-time decision-making crucial for tasks like autonomous navigation and obstacle avoidance. Cloud AI, while offering expansive computational resources, introduces latency due to data transmission delays, which can impair the responsiveness needed in dynamic environments. Real-time processing capabilities of edge AI ensure immediate feedback and control, essential for safety and efficiency in robotic operations.

Data Privacy and Security Considerations

Edge AI in robotics enhances data privacy by processing sensitive information locally on devices, minimizing exposure to cloud-based vulnerabilities. Cloud AI offers robust computational power but relies on transmitting data over networks, increasing risks of interception and unauthorized access. Implementing edge AI reduces latency and supports secure, real-time decision-making while preserving user privacy in robotic applications.

Scalability and Infrastructure Requirements

Edge AI offers superior scalability in robotics by enabling data processing directly on devices, reducing latency and reliance on continuous internet connectivity. This decentralized approach minimizes infrastructure requirements compared to cloud AI, which demands substantial bandwidth and centralized computing resources to handle extensive data transmission and complex processing tasks. Leveraging edge AI allows robotic systems to operate efficiently in diverse environments with limited infrastructure while maintaining real-time performance.

Power Consumption and Resource Management

Edge AI in robotics significantly reduces power consumption by processing data locally, minimizing the need for constant data transmission to the cloud and lowering latency. This local processing enables efficient resource management by optimizing the use of onboard hardware such as CPUs, GPUs, and specialized AI accelerators, resulting in extended battery life and enhanced real-time decision-making. In contrast, cloud AI relies heavily on remote servers, which increases power consumption due to data transfer and depends on stable network connectivity, potentially causing delays and inefficiencies in resource allocation.

Use Cases in Robotics: Edge vs. Cloud

Edge AI in robotics enables real-time data processing for applications like autonomous navigation, industrial automation, and predictive maintenance by reducing latency and dependency on internet connectivity. Cloud AI excels in large-scale data analysis, machine learning model training, and collaborative robot coordination, benefiting from vast computational resources and centralized updates. Combining edge and cloud AI optimizes robotic performance by balancing local responsiveness with advanced analytics and scalability.

Cost Implications and Efficiency

Edge AI in robotics significantly reduces latency and bandwidth costs by processing data locally, enabling real-time decision-making crucial for autonomous operations. Cloud AI, while offering scalable computational power and extensive data storage, incurs ongoing expenses related to data transmission, cloud service fees, and potential delays affecting robotic responsiveness. Balancing cost implications and efficiency depends on application-specific needs, with edge AI favoring cost-effective, low-latency solutions and cloud AI best suited for complex processing requiring large-scale analytics.

Future Trends in Edge AI and Cloud AI for Robotics

Future trends in robotics emphasize the integration of edge AI for real-time data processing, enhancing robot autonomy and reducing latency in decision-making. Cloud AI continues to expand capabilities with vast computational resources and collaborative learning through massive datasets, supporting complex tasks like multi-robot coordination and large-scale analytics. Hybrid architectures leveraging both edge and cloud AI are emerging to optimize performance, scalability, and energy efficiency in advanced robotic systems.

edge AI vs cloud AI Infographic

techiny.com

techiny.com