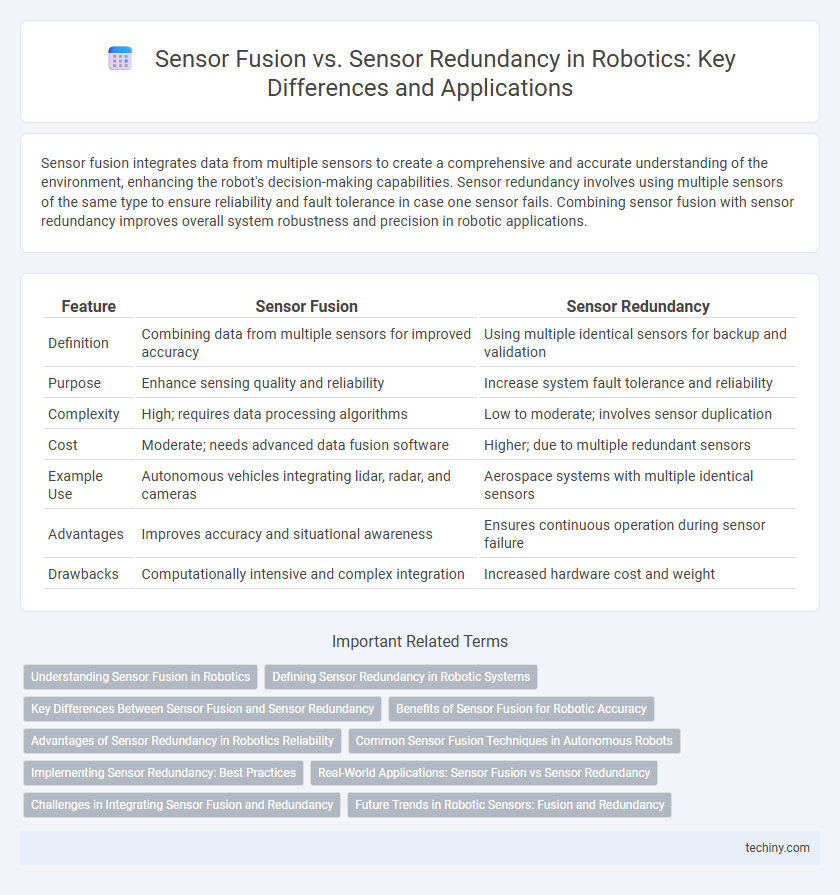

Sensor fusion integrates data from multiple sensors to create a comprehensive and accurate understanding of the environment, enhancing the robot's decision-making capabilities. Sensor redundancy involves using multiple sensors of the same type to ensure reliability and fault tolerance in case one sensor fails. Combining sensor fusion with sensor redundancy improves overall system robustness and precision in robotic applications.

Table of Comparison

| Feature | Sensor Fusion | Sensor Redundancy |

|---|---|---|

| Definition | Combining data from multiple sensors for improved accuracy | Using multiple identical sensors for backup and validation |

| Purpose | Enhance sensing quality and reliability | Increase system fault tolerance and reliability |

| Complexity | High; requires data processing algorithms | Low to moderate; involves sensor duplication |

| Cost | Moderate; needs advanced data fusion software | Higher; due to multiple redundant sensors |

| Example Use | Autonomous vehicles integrating lidar, radar, and cameras | Aerospace systems with multiple identical sensors |

| Advantages | Improves accuracy and situational awareness | Ensures continuous operation during sensor failure |

| Drawbacks | Computationally intensive and complex integration | Increased hardware cost and weight |

Understanding Sensor Fusion in Robotics

Sensor fusion in robotics involves integrating data from multiple heterogeneous sensors to create a comprehensive and accurate representation of the environment, enhancing decision-making and control. This process combines complementary sensor inputs, such as LiDAR, cameras, and IMUs, to improve reliability and reduce uncertainty compared to single sensor usage. Unlike sensor redundancy, which duplicates sensors to provide backup, sensor fusion synthesizes diverse data sources to optimize perception and operational efficiency in robotic systems.

Defining Sensor Redundancy in Robotic Systems

Sensor redundancy in robotic systems involves the integration of multiple identical sensors to ensure reliability and fault tolerance by providing backup measurements in case of sensor failure. This approach enhances system robustness by allowing continuous operation through cross-verification of data from redundant sensors. Redundant sensor configurations improve safety and accuracy in autonomous robots, especially in critical applications such as industrial automation and autonomous vehicles.

Key Differences Between Sensor Fusion and Sensor Redundancy

Sensor fusion integrates data from multiple sensors to create a comprehensive and accurate understanding of the environment, enhancing decision-making capabilities in robotic systems. Sensor redundancy involves using multiple sensors of the same type to provide backup and increase reliability, primarily focusing on fault tolerance rather than data integration. Key differences include sensor fusion's emphasis on combining diverse data types for improved situational awareness versus sensor redundancy's role in ensuring continuous operation through duplication.

Benefits of Sensor Fusion for Robotic Accuracy

Sensor fusion enhances robotic accuracy by integrating data from multiple sensors, resulting in a more reliable and precise understanding of the environment compared to sensor redundancy alone. This combination reduces noise and compensates for individual sensor limitations, improving decision-making and navigation in complex tasks. Advanced algorithms in sensor fusion enable real-time processing and adaptive responses, crucial for autonomous robots operating in dynamic settings.

Advantages of Sensor Redundancy in Robotics Reliability

Sensor redundancy significantly enhances robotics reliability by providing backup data streams, ensuring continuous operation even if one sensor fails. This approach improves fault tolerance and error detection, crucial for maintaining performance in safety-critical applications. Redundant sensors also enable cross-verification of measurements, reducing the likelihood of inaccurate data affecting robotic decision-making.

Common Sensor Fusion Techniques in Autonomous Robots

Common sensor fusion techniques in autonomous robots include Kalman filters, particle filters, and complementary filters, which effectively combine data from heterogeneous sensors like LiDAR, cameras, and IMUs. These methods enhance the accuracy of environment perception and state estimation by merging noisy and partial sensor inputs into a cohesive representation. Sensor fusion improves decision-making and navigation robustness compared to simple sensor redundancy, which relies on multiple identical sensors for fault tolerance without integrating diverse data streams.

Implementing Sensor Redundancy: Best Practices

Implementing sensor redundancy in robotics involves strategically integrating multiple sensors of the same type to ensure fault tolerance and maintain system reliability during component failures. Best practices include calibrating sensors regularly for consistent accuracy, employing diverse data validation algorithms, and designing modular sensor networks to simplify maintenance and upgrades. Leveraging synchronization protocols and real-time diagnostics enhances the effectiveness of redundant sensor systems in dynamic robotic environments.

Real-World Applications: Sensor Fusion vs Sensor Redundancy

Sensor fusion integrates data from multiple heterogeneous sensors to create a comprehensive environmental model, enhancing accuracy and reliability in autonomous robots, drones, and self-driving cars. Sensor redundancy involves using multiple identical sensors to improve fault tolerance and system robustness, commonly applied in mission-critical aerospace and industrial robotics where failure is not an option. Real-world applications favor sensor fusion for complex perception tasks requiring diverse data types, while sensor redundancy is preferred when system reliability and fault detection are paramount.

Challenges in Integrating Sensor Fusion and Redundancy

Integrating sensor fusion and sensor redundancy in robotics poses challenges such as managing increased computational complexity and ensuring real-time data synchronization across diverse sensor modalities. Balancing data reliability with processing latency requires sophisticated algorithms to handle conflicting or redundant information without compromising system responsiveness. Effective calibration and fault detection mechanisms are essential to maintain accuracy and robustness in dynamic environments.

Future Trends in Robotic Sensors: Fusion and Redundancy

Future trends in robotic sensors emphasize enhanced sensor fusion techniques to improve data accuracy and environmental perception by combining inputs from diverse sensor types like LiDAR, cameras, and ultrasonic sensors. Sensor redundancy remains crucial for system reliability, ensuring continuous operation despite sensor failures through overlapping functionalities in critical applications such as autonomous vehicles and industrial automation. Integration of AI-driven algorithms with sensor fusion and redundancy frameworks is accelerating, enabling real-time decision-making and adaptive responses in complex, dynamic environments.

Sensor Fusion vs Sensor Redundancy Infographic

techiny.com

techiny.com