Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while simultaneously determining their location within it. This dual process is crucial for autonomous navigation, allowing robots to explore dynamic or uncharted areas with accuracy. Advanced SLAM algorithms integrate sensor data to improve real-time positioning and environmental understanding.

Table of Comparison

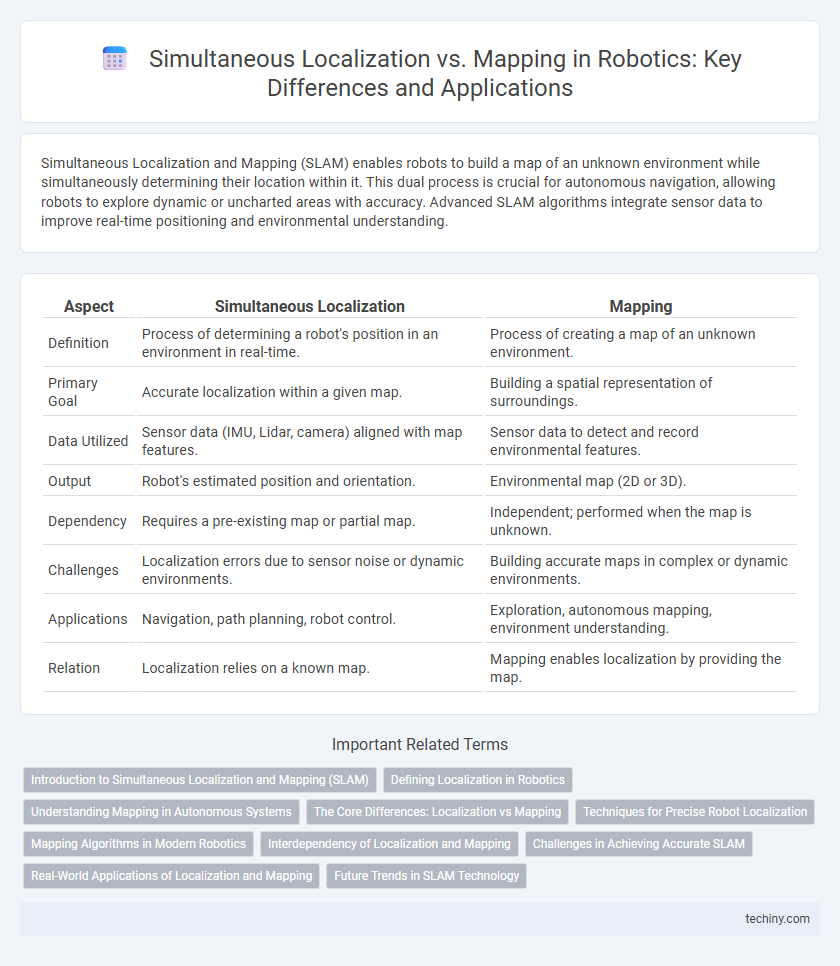

| Aspect | Simultaneous Localization | Mapping |

|---|---|---|

| Definition | Process of determining a robot's position in an environment in real-time. | Process of creating a map of an unknown environment. |

| Primary Goal | Accurate localization within a given map. | Building a spatial representation of surroundings. |

| Data Utilized | Sensor data (IMU, Lidar, camera) aligned with map features. | Sensor data to detect and record environmental features. |

| Output | Robot's estimated position and orientation. | Environmental map (2D or 3D). |

| Dependency | Requires a pre-existing map or partial map. | Independent; performed when the map is unknown. |

| Challenges | Localization errors due to sensor noise or dynamic environments. | Building accurate maps in complex or dynamic environments. |

| Applications | Navigation, path planning, robot control. | Exploration, autonomous mapping, environment understanding. |

| Relation | Localization relies on a known map. | Mapping enables localization by providing the map. |

Introduction to Simultaneous Localization and Mapping (SLAM)

Simultaneous Localization and Mapping (SLAM) is a critical robotics technique that enables autonomous robots to create a map of an unknown environment while simultaneously determining their precise location within it. This process relies on sensor data integration, such as LiDAR, cameras, and IMUs, to build accurate spatial representations and facilitate real-time navigation. SLAM algorithms are foundational for robot autonomy in applications like self-driving cars, drones, and service robots operating in dynamic or previously unexplored spaces.

Defining Localization in Robotics

Localization in robotics refers to the process by which a robot determines its position within a given environment, using sensor data and algorithms such as Monte Carlo Localization or Extended Kalman Filters. Accuracy in localization is critical for effective navigation and task execution, enabling robots to understand their spatial relationship to landmarks or map features. Advanced localization methods often integrate real-time sensor inputs from LIDAR, cameras, or IMUs to continuously update the robot's estimated position within a pre-existing map or relative frame.

Understanding Mapping in Autonomous Systems

Mapping in autonomous systems involves creating a detailed representation of the environment using sensor data such as lidar, cameras, and sonar. Precise mapping enables robots to identify obstacles, plan paths, and perform tasks efficiently within unknown or dynamic surroundings. High-resolution maps combined with real-time updates enhance the reliability and accuracy of autonomous navigation and decision-making.

The Core Differences: Localization vs Mapping

Localization in robotics refers to the process of determining a robot's precise position within a known environment using sensors and pre-existing maps. Mapping involves creating a detailed representation of an unknown environment by collecting data through sensors to build spatial layouts. The core difference lies in localization relying on existing maps for position estimation, whereas mapping focuses on generating and updating those environmental maps from scratch.

Techniques for Precise Robot Localization

Techniques for precise robot localization in robotics heavily rely on simultaneous localization and mapping (SLAM) algorithms that integrate sensor data from LiDAR, cameras, and inertial measurement units (IMUs) to construct accurate spatial maps while determining the robot's position. Probabilistic methods such as particle filters and extended Kalman filters enhance localization accuracy by effectively managing uncertainties and sensor noise. Advanced approaches utilize graph-based optimization and real-time loop closure detection to continuously refine the robot's trajectory and environmental map.

Mapping Algorithms in Modern Robotics

Mapping algorithms in modern robotics leverage advanced techniques such as occupancy grid mapping, feature-based mapping, and semantic mapping to create detailed environmental representations. These algorithms enable robots to interpret sensor data from LiDAR, cameras, and IMUs, constructing accurate 2D or 3D maps essential for navigation and task execution. State-of-the-art approaches integrate probabilistic models and deep learning to enhance the robustness and adaptability of mapping in dynamic and unstructured environments.

Interdependency of Localization and Mapping

Simultaneous Localization and Mapping (SLAM) relies on the interdependency between localization and mapping, where accurate robot position estimation is essential for building a reliable map, and a precise map is crucial for effective localization. Errors in localization propagate to the mapping process, causing map inaccuracies, while incomplete or distorted maps hinder the robot's ability to localize itself within the environment. Advanced SLAM algorithms use iterative feedback loops to continuously refine both localization and mapping, enhancing overall robotic navigation and environment understanding.

Challenges in Achieving Accurate SLAM

Achieving accurate Simultaneous Localization and Mapping (SLAM) presents challenges such as sensor noise, dynamic environmental changes, and computational limitations. Robust data association and loop closure detection are critical to prevent map drift and localization errors. Furthermore, real-time processing constraints require efficient algorithms to maintain accuracy without sacrificing speed or scalability.

Real-World Applications of Localization and Mapping

Simultaneous Localization and Mapping (SLAM) enables autonomous robots to create real-time maps of unknown environments while accurately tracking their position within them, essential for applications like self-driving cars, drone navigation, and warehouse automation. Advanced SLAM algorithms process sensor data from LiDAR, cameras, and IMUs to construct detailed 3D maps, facilitating obstacle avoidance and path planning in dynamic, cluttered spaces. Integration of SLAM with artificial intelligence enhances robots' ability to operate efficiently in complex settings such as urban infrastructure inspection and agricultural field monitoring.

Future Trends in SLAM Technology

Emerging trends in SLAM technology emphasize the integration of deep learning algorithms with traditional probabilistic methods to enhance real-time environmental mapping and localization accuracy. Advances in sensor fusion, combining data from LiDAR, cameras, and inertial measurement units, enable more robust and scalable SLAM systems suitable for dynamic and large-scale environments. Research is also focusing on cloud-based SLAM and collaborative multi-robot systems to facilitate shared map generation and improve computational efficiency.

Simultaneous Localization vs Mapping Infographic

techiny.com

techiny.com