SLAM (Simultaneous Localization and Mapping) enables robots to build a map of an unknown environment while simultaneously determining their position within it, making it essential for autonomous navigation in dynamic or unexplored settings. Localization, on the other hand, refers to a robot's ability to determine its position within a pre-existing map, relying on environmental features or landmarks for accuracy. Understanding the distinction between SLAM and localization is crucial for optimizing robotics applications, as SLAM suits unknown terrains, whereas localization enhances efficiency in known environments.

Table of Comparison

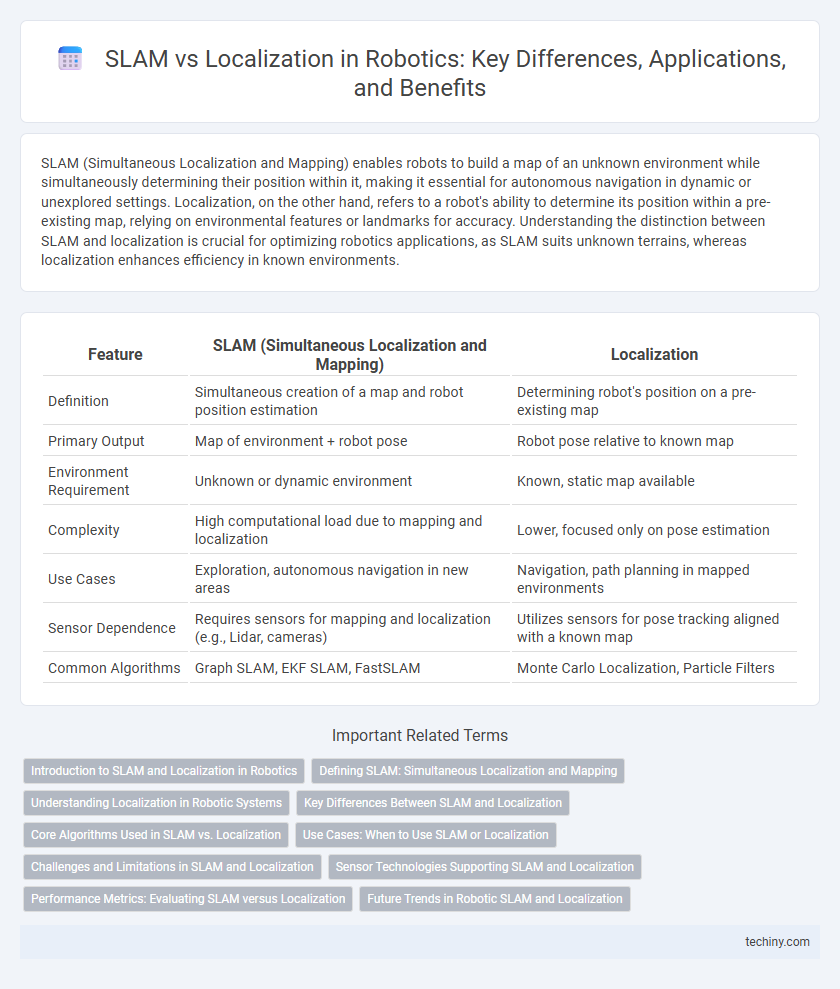

| Feature | SLAM (Simultaneous Localization and Mapping) | Localization |

|---|---|---|

| Definition | Simultaneous creation of a map and robot position estimation | Determining robot's position on a pre-existing map |

| Primary Output | Map of environment + robot pose | Robot pose relative to known map |

| Environment Requirement | Unknown or dynamic environment | Known, static map available |

| Complexity | High computational load due to mapping and localization | Lower, focused only on pose estimation |

| Use Cases | Exploration, autonomous navigation in new areas | Navigation, path planning in mapped environments |

| Sensor Dependence | Requires sensors for mapping and localization (e.g., Lidar, cameras) | Utilizes sensors for pose tracking aligned with a known map |

| Common Algorithms | Graph SLAM, EKF SLAM, FastSLAM | Monte Carlo Localization, Particle Filters |

Introduction to SLAM and Localization in Robotics

Simultaneous Localization and Mapping (SLAM) enables robots to build a map of an unknown environment while simultaneously tracking their position within it, using sensors such as LIDAR, cameras, and IMUs. Localization focuses solely on determining the robot's precise pose relative to a known map, leveraging techniques like particle filters, Kalman filters, and Monte Carlo localization. Together, SLAM and localization are fundamental for autonomous navigation, obstacle avoidance, and environment interaction in robotic systems.

Defining SLAM: Simultaneous Localization and Mapping

Simultaneous Localization and Mapping (SLAM) is a fundamental robotic process that enables a robot to construct a map of an unknown environment while simultaneously determining its precise location within that map. Unlike traditional localization, which assumes the map is pre-existing, SLAM integrates sensor data and algorithms to perform both tasks in real-time, crucial for autonomous navigation in dynamic or unstructured settings. Key components of SLAM include sensor fusion, probabilistic algorithms, and loop closure detection, all working together to improve the accuracy and reliability of robot positioning and environment mapping.

Understanding Localization in Robotic Systems

Localization in robotic systems refers to the process of determining a robot's precise position and orientation within a known environment, utilizing sensor data such as LiDAR, cameras, and odometry. Unlike SLAM (Simultaneous Localization and Mapping), which simultaneously constructs a map of an unknown environment while localizing, localization assumes a pre-existing map to accurately track the robot's pose. Effective localization algorithms, including particle filters and Kalman filters, are essential for navigation, obstacle avoidance, and task execution in autonomous robots.

Key Differences Between SLAM and Localization

SLAM (Simultaneous Localization and Mapping) simultaneously builds a map of an unknown environment while determining the robot's position within it, whereas localization assumes a pre-existing map and focuses solely on estimating the robot's pose in that map. SLAM integrates sensor data like LiDAR or cameras to solve both mapping and localization problems concurrently, making it essential for autonomous robots operating in dynamic or unexplored spaces. Localization techniques, such as particle filters or Kalman filters, rely on accurate and updated maps to refine the robot's pose but do not update the environment representation.

Core Algorithms Used in SLAM vs. Localization

Core algorithms in SLAM (Simultaneous Localization and Mapping) include Extended Kalman Filter (EKF), Particle Filter, and Graph-Based Optimization, which simultaneously estimate a robot's position and build a map of the environment. Localization algorithms primarily use methods like Monte Carlo Localization (MCL) and Extended Kalman Filter (EKF) to estimate the robot's pose given a pre-existing map, focusing solely on position tracking without environmental mapping. The integration of sensor fusion techniques such as LiDAR, RGB-D cameras, and IMUs enhances the accuracy and robustness of both SLAM and localization systems.

Use Cases: When to Use SLAM or Localization

SLAM (Simultaneous Localization and Mapping) is ideal for unknown or dynamic environments where robots need to build a map while determining their position, commonly used in autonomous exploration, search and rescue, and warehouse automation. Localization excels in well-mapped, static settings like industrial automation or indoor navigation where robots rely on pre-existing maps for precise positioning. Choosing SLAM or Localization depends on the operational environment's familiarity and the necessity for real-time map creation versus position tracking on known maps.

Challenges and Limitations in SLAM and Localization

SLAM (Simultaneous Localization and Mapping) faces challenges such as high computational complexity and sensitivity to dynamic environments, making real-time processing difficult. Localization accuracy depends heavily on the quality of sensor data and prior maps, which can degrade in GPS-denied or feature-poor areas. Both SLAM and localization struggle with drift over time, sensor noise, and scalability issues in large or cluttered environments.

Sensor Technologies Supporting SLAM and Localization

Lidar and RGB-D cameras play a crucial role in simultaneous localization and mapping (SLAM) by providing dense 3D environmental data essential for creating accurate maps. In contrast, localization often relies on GPS, inertial measurement units (IMUs), and wheel encoders to determine the robot's precise position within pre-existing maps. Advanced sensor fusion techniques combine data from Lidar, IMUs, and vision sensors to enhance both SLAM and localization accuracy in complex and dynamic environments.

Performance Metrics: Evaluating SLAM versus Localization

Performance metrics for evaluating SLAM versus localization include accuracy, robustness, computational efficiency, and scalability. SLAM systems are measured by their ability to simultaneously build maps and localize within unknown environments, often assessed through trajectory error and map quality metrics. Localization focuses on precise pose estimation within a pre-existing map, optimizing for low latency and high reliability in dynamic settings.

Future Trends in Robotic SLAM and Localization

Future trends in robotic SLAM and localization emphasize integration of deep learning techniques to enhance environmental perception and map accuracy. Advances in sensor fusion, combining LiDAR, vision, and inertial measurements, are driving improvements in real-time localization robustness and scalability. Research is also focusing on edge computing and cloud-based frameworks to enable efficient processing and collaborative simultaneous localization and mapping across multiple robots.

SLAM vs localization Infographic

techiny.com

techiny.com