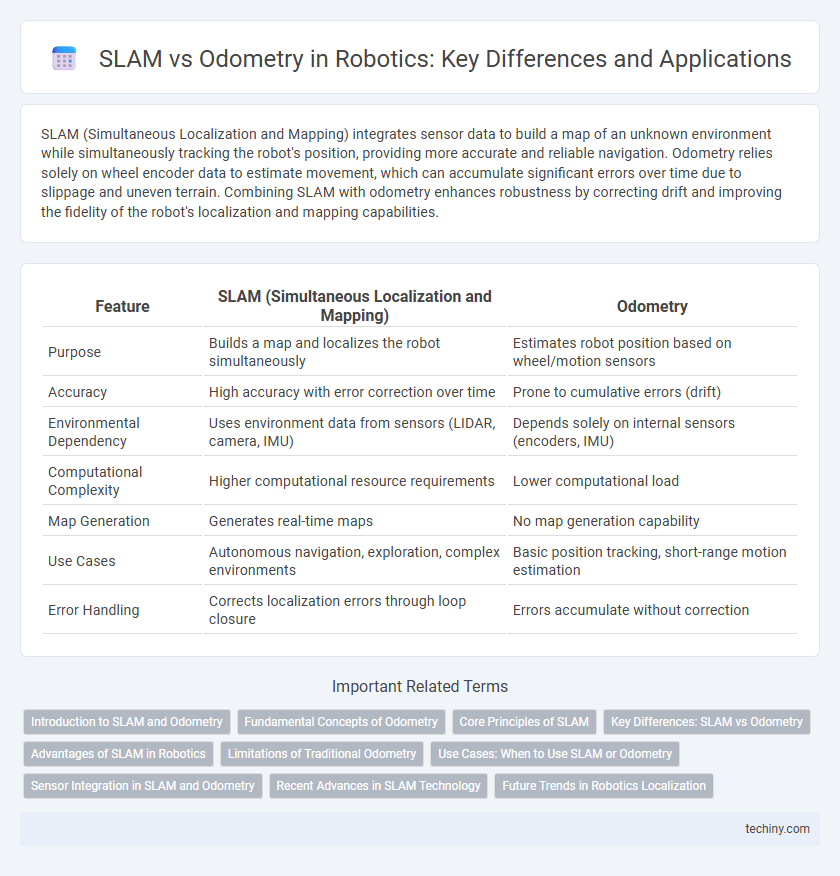

SLAM (Simultaneous Localization and Mapping) integrates sensor data to build a map of an unknown environment while simultaneously tracking the robot's position, providing more accurate and reliable navigation. Odometry relies solely on wheel encoder data to estimate movement, which can accumulate significant errors over time due to slippage and uneven terrain. Combining SLAM with odometry enhances robustness by correcting drift and improving the fidelity of the robot's localization and mapping capabilities.

Table of Comparison

| Feature | SLAM (Simultaneous Localization and Mapping) | Odometry |

|---|---|---|

| Purpose | Builds a map and localizes the robot simultaneously | Estimates robot position based on wheel/motion sensors |

| Accuracy | High accuracy with error correction over time | Prone to cumulative errors (drift) |

| Environmental Dependency | Uses environment data from sensors (LIDAR, camera, IMU) | Depends solely on internal sensors (encoders, IMU) |

| Computational Complexity | Higher computational resource requirements | Lower computational load |

| Map Generation | Generates real-time maps | No map generation capability |

| Use Cases | Autonomous navigation, exploration, complex environments | Basic position tracking, short-range motion estimation |

| Error Handling | Corrects localization errors through loop closure | Errors accumulate without correction |

Introduction to SLAM and Odometry

Simultaneous Localization and Mapping (SLAM) enables robots to create a map of an unknown environment while tracking their position within it, leveraging sensor data such as LiDAR or cameras. Odometry estimates the robot's position by measuring wheel rotations or motion increments but can accumulate errors over time due to slippage or uneven terrain. SLAM algorithms integrate odometry data with sensor observations to correct drift and produce accurate, real-time spatial awareness essential for autonomous navigation.

Fundamental Concepts of Odometry

Odometry fundamentally relies on the measurement of wheel rotations or robot motion to estimate position changes over time, making it essential for dead-reckoning in mobile robotics. Unlike SLAM (Simultaneous Localization and Mapping), which simultaneously builds a map of the environment while localizing the robot within it, odometry does not correct for cumulative errors, leading to drift in long-term navigation. Accurate odometry requires precise sensors such as encoders and IMUs to quantify translational and rotational movements, forming the basis for real-time pose estimation in robotic systems.

Core Principles of SLAM

SLAM (Simultaneous Localization and Mapping) integrates sensor data to construct a consistent map of an unknown environment while simultaneously tracking the robot's position within it, addressing the limitations of odometry that accumulates error over time. It employs probabilistic algorithms such as Extended Kalman Filters, Particle Filters, or Graph-Based Optimization to fuse measurements from lidar, cameras, or IMUs, thereby improving localization accuracy and map reliability. SLAM's core principles emphasize real-time data association, loop closure detection, and uncertainty modeling to maintain robustness in dynamic or feature-sparse environments.

Key Differences: SLAM vs Odometry

Simultaneous Localization and Mapping (SLAM) generates a consistent map of an unknown environment while determining the robot's position in real-time, addressing cumulative errors through sensor fusion techniques like Lidar and visual data. Odometry relies solely on wheel encoder data and inertial sensors to estimate position, which is prone to drift over time due to wheel slip and sensor noise. Unlike odometry, SLAM integrates additional environmental features for accurate localization and mapping, making it robust in dynamic or GPS-denied settings.

Advantages of SLAM in Robotics

SLAM (Simultaneous Localization and Mapping) offers significant advantages over traditional odometry by enabling robots to build and update maps of unknown environments while accurately tracking their position. Unlike odometry, which relies solely on wheel encoders and accumulates errors over time, SLAM integrates sensor data from lidar, cameras, and IMUs to correct drift and improve navigation robustness. This capability allows autonomous robots to operate effectively in complex, dynamic environments where precise localization and mapping are critical.

Limitations of Traditional Odometry

Traditional odometry in robotics suffers from cumulative drift errors caused by wheel slip, uneven terrain, and sensor noise, leading to inaccurate localization over time. Unlike SLAM, which integrates sensor data to build a global map and correct pose estimations, odometry relies solely on incremental motion measurements, limiting its reliability in complex environments. These inherent limitations restrict odometry's effectiveness for long-term navigation and autonomous robot operations.

Use Cases: When to Use SLAM or Odometry

SLAM (Simultaneous Localization and Mapping) excels in unknown or dynamic environments where creating a real-time map is crucial, such as autonomous exploration and indoor navigation. Odometry is suitable for consistent, predictable settings with limited environmental changes, often used in short-range applications or as a complementary data source for SLAM. Choosing between SLAM and odometry depends on the complexity of the environment and the need for accurate localization over extended areas.

Sensor Integration in SLAM and Odometry

SLAM (Simultaneous Localization and Mapping) integrates multiple sensors such as LiDAR, cameras, and IMUs to create a comprehensive environmental map while estimating the robot's position, enhancing accuracy and robustness. Odometry primarily relies on wheel encoders to measure movement, making it susceptible to drift and errors over time without external corrections. Sensor fusion in SLAM mitigates odometry's limitations by combining diverse data inputs for continuous, precise localization and mapping.

Recent Advances in SLAM Technology

Recent advances in SLAM technology leverage deep learning and sensor fusion techniques to enhance real-time environmental mapping accuracy, surpassing traditional odometry methods that rely solely on wheel encoder data and inertial measurements. Modern SLAM systems integrate LiDAR, RGB-D cameras, and simultaneous multi-sensor inputs to mitigate drift and improve pose estimation in dynamic and complex environments. These improvements enable robust autonomous navigation in robotics, making SLAM a preferred solution over odometry for precise localization and mapping.

Future Trends in Robotics Localization

Future trends in robotics localization emphasize the integration of Simultaneous Localization and Mapping (SLAM) with advanced odometry techniques to enhance accuracy and robustness. Machine learning algorithms and sensor fusion technologies are driving improvements in real-time environmental mapping and position estimation. Emerging developments in LiDAR, visual odometry, and inertial measurement units (IMUs) will further optimize autonomous navigation and adaptive robotic systems.

SLAM vs odometry Infographic

techiny.com

techiny.com