Vision-based grasping enables robots to identify and locate objects accurately using cameras and image processing, allowing for flexible manipulation of diverse items in unstructured environments. Force-based grasping relies on tactile sensors and feedback to adjust grip strength, ensuring delicate handling and preventing object damage during interaction. Combining both approaches enhances robotic manipulation by leveraging visual recognition and real-time force adjustments for improved precision and adaptability.

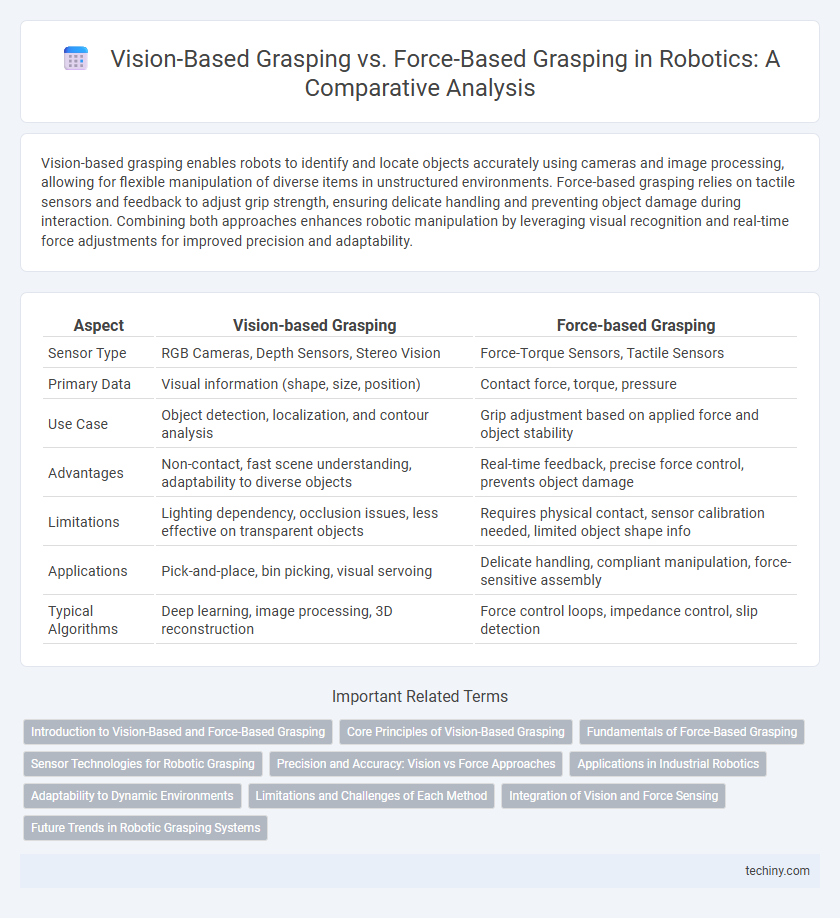

Table of Comparison

| Aspect | Vision-based Grasping | Force-based Grasping |

|---|---|---|

| Sensor Type | RGB Cameras, Depth Sensors, Stereo Vision | Force-Torque Sensors, Tactile Sensors |

| Primary Data | Visual information (shape, size, position) | Contact force, torque, pressure |

| Use Case | Object detection, localization, and contour analysis | Grip adjustment based on applied force and object stability |

| Advantages | Non-contact, fast scene understanding, adaptability to diverse objects | Real-time feedback, precise force control, prevents object damage |

| Limitations | Lighting dependency, occlusion issues, less effective on transparent objects | Requires physical contact, sensor calibration needed, limited object shape info |

| Applications | Pick-and-place, bin picking, visual servoing | Delicate handling, compliant manipulation, force-sensitive assembly |

| Typical Algorithms | Deep learning, image processing, 3D reconstruction | Force control loops, impedance control, slip detection |

Introduction to Vision-Based and Force-Based Grasping

Vision-based grasping leverages computer vision techniques and sensors like RGB-D cameras to identify object shapes, positions, and orientations, enabling precise manipulation in unstructured environments. Force-based grasping relies on tactile sensors and force feedback to adjust grip strength and detect slip, ensuring secure hold even with unknown or deformable objects. Integrating both approaches enhances robotic dexterity by combining environmental perception with adaptive force control.

Core Principles of Vision-Based Grasping

Vision-based grasping relies on computer vision algorithms to detect and analyze object features such as shape, size, and orientation, enabling robots to plan and execute precise grasping motions. It utilizes stereo cameras, RGB-D sensors, or LiDAR to create rich 3D representations of the environment, facilitating real-time adjustments based on visual feedback. Core principles include object recognition, pose estimation, and grasp strategy determination, which allow for adaptive manipulation in unstructured or dynamic settings.

Fundamentals of Force-Based Grasping

Force-based grasping relies on tactile feedback and force sensors to measure contact forces and adjust grip strength dynamically, ensuring stable object manipulation. This method utilizes real-time force data for responsive adjustments to prevent slippage or object damage during grasping tasks. Unlike vision-based systems that depend primarily on visual input, force-based grasping emphasizes direct haptic interaction and sensorimotor control for robust performance in unstructured environments.

Sensor Technologies for Robotic Grasping

Vision-based grasping relies on advanced sensor technologies such as RGB-D cameras, LiDAR, and stereo vision systems to capture detailed 3D spatial information and object features for precise localization and orientation. Force-based grasping utilizes tactile sensors, force-torque sensors, and pressure-sensitive pads to provide real-time feedback on contact forces, enabling adaptive grip adjustments and slip detection. Integrating vision and force sensor data enhances robotic manipulation accuracy, robustness, and the ability to handle diverse objects in dynamic environments.

Precision and Accuracy: Vision vs Force Approaches

Vision-based grasping systems leverage high-resolution cameras and advanced image processing algorithms to enhance precision by accurately identifying object shapes and positions before grasping. Force-based grasping relies on tactile sensors and feedback loops to adjust grip force in real time, improving accuracy in handling delicate or irregular objects. Combining vision and force data often results in superior manipulation performance, balancing precise object localization with adaptive grip strength control.

Applications in Industrial Robotics

Vision-based grasping in industrial robotics enables precise object recognition and localization, improving automation in complex environments such as assembly lines and quality inspection. Force-based grasping enhances the robot's ability to adapt to variable object weights and textures, crucial for handling fragile or irregular items in packaging and material handling. Combining both methods optimizes robotic dexterity and reliability, maximizing efficiency in manufacturing processes.

Adaptability to Dynamic Environments

Vision-based grasping leverages real-time sensor data and advanced image processing algorithms to adapt to changing object positions and environmental conditions, enabling dynamic response to unstructured scenarios. Force-based grasping relies on tactile feedback and force sensors to modulate grip strength, offering precise adjustments when handling objects with varying textures and fragility but may struggle with rapid environmental changes. Combining both approaches enhances robotic adaptability in dynamic environments by balancing visual perception and haptic feedback for robust manipulation.

Limitations and Challenges of Each Method

Vision-based grasping struggles with occlusions, varying lighting conditions, and inaccuracies in depth perception, which can lead to unreliable object detection and pose estimation. Force-based grasping faces challenges in detecting subtle slip events and adapting to complex surface textures without causing damage, often requiring sophisticated tactile sensors. Both methods demand significant computational resources and integration complexity to achieve robust and precise manipulation in dynamic environments.

Integration of Vision and Force Sensing

Integrating vision and force sensing in robotics enhances grasping precision by combining visual data with tactile feedback for adaptive manipulation. Vision-based systems identify object location and orientation, while force sensors detect contact forces, enabling smooth adjustments to grip strength. This synergy improves handling of varied object shapes and materials, reducing slippage and damage during complex tasks.

Future Trends in Robotic Grasping Systems

Vision-based grasping systems leverage advanced deep learning algorithms and 3D imaging to enhance object recognition and pose estimation, enabling robots to adapt to complex and unstructured environments with higher precision. Force-based grasping integrates tactile sensors and real-time feedback to improve grip stability and manipulation of delicate or deformable objects, advancing dexterous handling capabilities. Future trends point towards hybrid systems that combine multimodal sensing, artificial intelligence, and real-time data fusion to achieve more robust, adaptive, and versatile robotic grasping solutions.

Vision-based grasping vs Force-based grasping Infographic

techiny.com

techiny.com