Vision-based grasping enables robots to identify and localize objects using cameras, providing a broad spatial understanding crucial for initial object detection and positioning. Tactile-based grasping relies on sensor feedback from touch, allowing robots to adjust grip force and detect slippage, enhancing manipulation accuracy and object stability. Combining both methods improves robotic precision and adaptability in complex environments, as vision guides approach while tactile feedback ensures secure grasping.

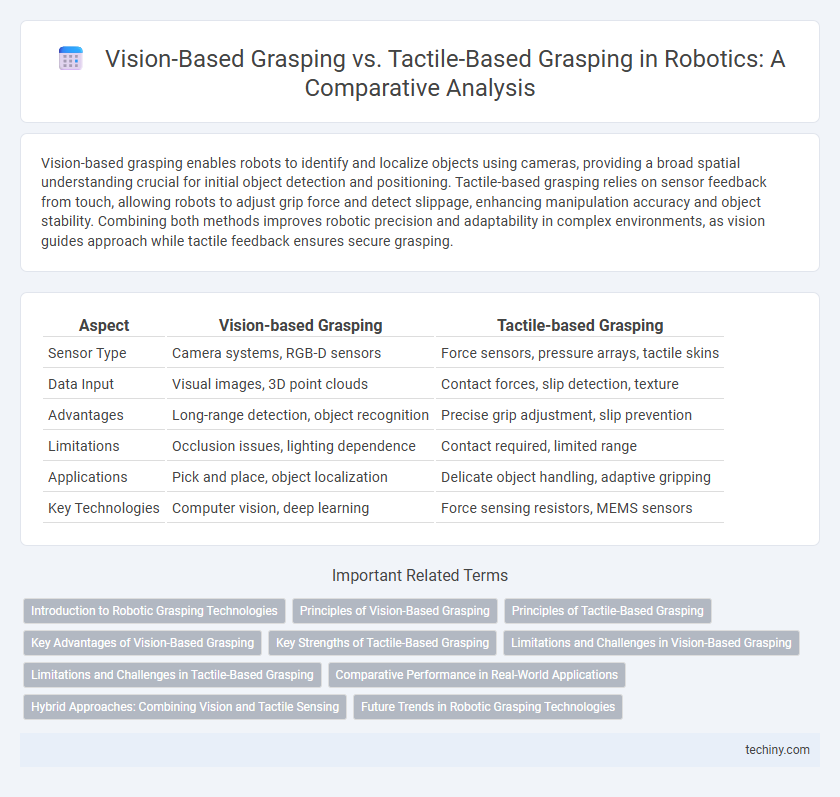

Table of Comparison

| Aspect | Vision-based Grasping | Tactile-based Grasping |

|---|---|---|

| Sensor Type | Camera systems, RGB-D sensors | Force sensors, pressure arrays, tactile skins |

| Data Input | Visual images, 3D point clouds | Contact forces, slip detection, texture |

| Advantages | Long-range detection, object recognition | Precise grip adjustment, slip prevention |

| Limitations | Occlusion issues, lighting dependence | Contact required, limited range |

| Applications | Pick and place, object localization | Delicate object handling, adaptive gripping |

| Key Technologies | Computer vision, deep learning | Force sensing resistors, MEMS sensors |

Introduction to Robotic Grasping Technologies

Vision-based grasping utilizes cameras and computer vision algorithms to identify and locate objects, enabling robots to plan precise hand trajectories for effective grasping in dynamic environments. Tactile-based grasping relies on sensors embedded in robotic fingers to detect contact forces and textures, providing real-time feedback to adjust grip strength and prevent object slippage. Combining both technologies enhances robotic manipulation by integrating spatial awareness with tactile sensitivity, improving grasp reliability and adaptability.

Principles of Vision-Based Grasping

Vision-based grasping relies on computer vision techniques to interpret visual data from cameras, enabling robots to identify and localize objects within their environment. It employs convolutional neural networks (CNNs) and 3D vision algorithms such as point cloud processing to predict optimal grasp points and orientations. The approach enhances manipulation accuracy by integrating depth perception and scene understanding, facilitating dynamic and adaptive grasp strategies without direct physical contact.

Principles of Tactile-Based Grasping

Tactile-based grasping relies on the integration of force sensors, pressure arrays, and tactile skin to detect contact, texture, and slip during object manipulation. This approach enables robots to adjust grip force dynamically, ensuring secure handling of objects with varying shapes and fragilities. Unlike vision-based grasping, tactile feedback provides real-time, localized information critical for delicate and adaptive grasp control in unstructured environments.

Key Advantages of Vision-Based Grasping

Vision-based grasping offers the advantage of enabling robots to identify and localize objects in cluttered and dynamic environments using advanced cameras and machine learning algorithms. This approach provides rich spatial and contextual information, facilitating adaptive grasp planning without requiring physical contact, which reduces the risk of damaging delicate items. High-resolution vision systems also allow for real-time adjustments and predictive modeling, enhancing overall grasp accuracy and operational efficiency.

Key Strengths of Tactile-Based Grasping

Tactile-based grasping excels in providing robots with precise feedback on grip force and surface texture, enabling adaptive adjustments in real-time to prevent slippage and object damage. It enhances manipulation accuracy in unstructured environments by detecting contact events and slip before visual cues are available. This approach supports complex tasks like handling deformable or occluded objects, where vision systems alone may fail due to limited line of sight or lighting conditions.

Limitations and Challenges in Vision-Based Grasping

Vision-based grasping struggles with occlusions and varying lighting conditions that obscure object features, reducing accuracy in object detection and pose estimation. The lack of tactile feedback limits the system's ability to assess grasp stability and adjust grip force dynamically, leading to potential slippage or damage. Challenges in real-time processing of complex visual data further hinder responsiveness in cluttered or unstructured environments.

Limitations and Challenges in Tactile-Based Grasping

Tactile-based grasping faces limitations such as sensor sensitivity constraints, which affect the detection of fine textures and forces, leading to challenges in precise manipulation. The integration of tactile sensors increases system complexity and computational requirements, impacting real-time responsiveness. Environmental variables like moisture, temperature, and surface contamination further degrade tactile sensor reliability and durability.

Comparative Performance in Real-World Applications

Vision-based grasping enables robots to identify and localize objects quickly by analyzing visual data from cameras, making it highly effective for tasks requiring precision with varying object shapes and positions. Tactile-based grasping excels in scenarios where object properties such as texture, compliance, and slippage are critical, providing real-time feedback for adaptive grip adjustments in unstructured environments. Combining vision and tactile inputs often results in superior grasping performance, improving success rates by compensating for the limitations of each modality in real-world robotic manipulation.

Hybrid Approaches: Combining Vision and Tactile Sensing

Hybrid approaches in robotics integrate vision-based grasping with tactile sensing to enhance object manipulation accuracy and adaptability. Vision systems provide spatial awareness and object localization, while tactile sensors offer precise feedback on contact forces and surface textures, enabling real-time adjustment of grip strength and positioning. Combining these modalities significantly improves grasp robustness in unstructured environments, facilitating tasks that require delicate handling and object identification.

Future Trends in Robotic Grasping Technologies

Vision-based grasping in robotics leverages advanced computer vision algorithms and deep learning to enhance object recognition and spatial awareness, enabling precise manipulation in dynamic environments. Tactile-based grasping incorporates high-resolution sensors to provide real-time feedback on contact forces and texture, improving adaptability and grip stability on diverse surfaces. Future trends emphasize the fusion of vision and tactile data through multi-modal sensing technologies, driving innovations in autonomous grasping systems with superior dexterity and robustness for complex industrial and service applications.

Vision-based grasping vs Tactile-based grasping Infographic

techiny.com

techiny.com