Workspace mapping creates a detailed spatial representation of the robot's environment, enabling efficient navigation and task planning. Object recognition identifies and classifies individual items within that space, crucial for manipulation and interaction. Integrating both processes enhances robotic precision and adaptability in complex, dynamic settings.

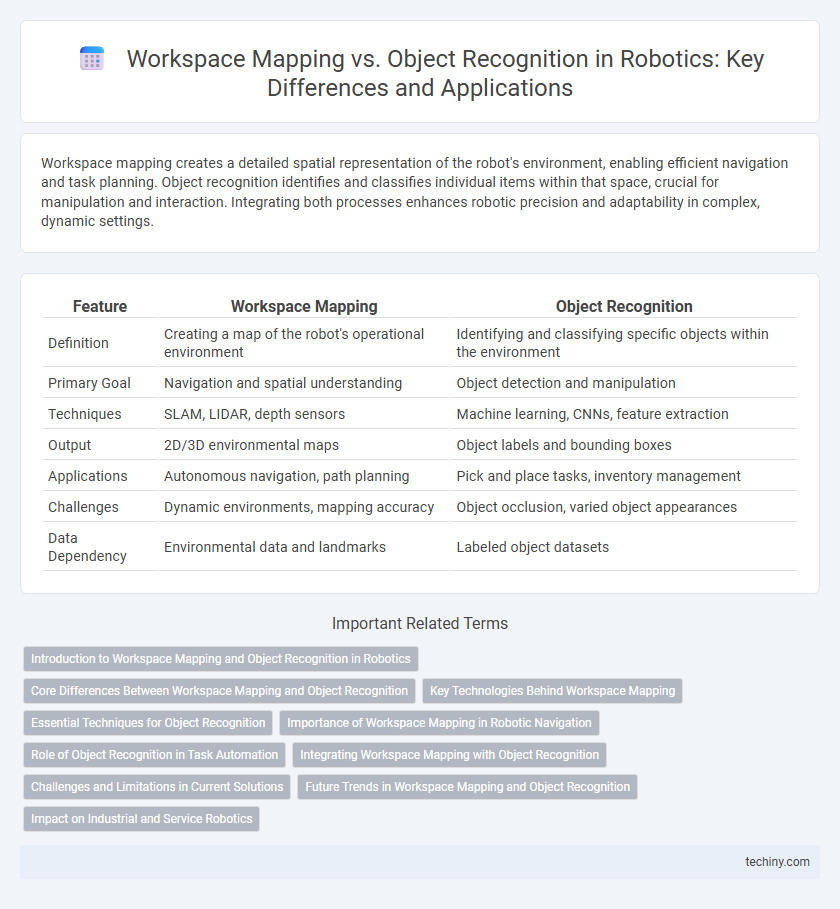

Table of Comparison

| Feature | Workspace Mapping | Object Recognition |

|---|---|---|

| Definition | Creating a map of the robot's operational environment | Identifying and classifying specific objects within the environment |

| Primary Goal | Navigation and spatial understanding | Object detection and manipulation |

| Techniques | SLAM, LIDAR, depth sensors | Machine learning, CNNs, feature extraction |

| Output | 2D/3D environmental maps | Object labels and bounding boxes |

| Applications | Autonomous navigation, path planning | Pick and place tasks, inventory management |

| Challenges | Dynamic environments, mapping accuracy | Object occlusion, varied object appearances |

| Data Dependency | Environmental data and landmarks | Labeled object datasets |

Introduction to Workspace Mapping and Object Recognition in Robotics

Workspace mapping in robotics involves creating detailed spatial representations of an environment to enable efficient navigation and task execution. Object recognition focuses on identifying and classifying items within the workspace using sensors and machine learning algorithms, critical for manipulation and interaction tasks. Combining accurate workspace mapping with robust object recognition enhances robot autonomy and operational precision in dynamic industrial settings.

Core Differences Between Workspace Mapping and Object Recognition

Workspace mapping involves creating a detailed spatial representation of an environment to enable a robot's navigation and task planning, focusing on understanding geometric and spatial relationships. Object recognition centers on identifying and classifying individual items within the environment based on visual or sensor data, emphasizing semantic interpretation and categorization. The core difference lies in workspace mapping's emphasis on environment-wide layout and navigational context, while object recognition prioritizes the detection and identification of specific entities within that mapped space.

Key Technologies Behind Workspace Mapping

Workspace mapping in robotics relies heavily on simultaneous localization and mapping (SLAM) techniques, which integrate sensor data from LIDAR, RGB-D cameras, and ultrasonic sensors to create precise 3D environmental models. Advanced algorithms such as occupancy grid mapping and point cloud processing enable robots to accurately represent their surroundings, facilitating navigation and task execution. These technologies differ from object recognition systems that focus on identifying and classifying individual objects rather than constructing comprehensive spatial maps.

Essential Techniques for Object Recognition

Workspace mapping involves creating a comprehensive spatial representation of the robot's environment, enabling efficient navigation and task planning. Object recognition utilizes advanced computer vision algorithms, such as convolutional neural networks (CNNs) and feature matching techniques, to identify and categorize individual items within the workspace. Essential techniques for object recognition include deep learning-based models, real-time image processing, and sensor fusion methods that integrate data from cameras, LiDAR, and ultrasonic sensors for accurate detection and classification.

Importance of Workspace Mapping in Robotic Navigation

Workspace mapping enables robots to create accurate environmental maps that are essential for precise navigation and path planning, reducing collision risks and improving operational efficiency. Unlike object recognition, which identifies specific items, workspace mapping provides a holistic spatial understanding critical for autonomous movement and task execution. Advanced techniques such as simultaneous localization and mapping (SLAM) enhance the robot's ability to update maps dynamically, allowing adaptability in changing environments.

Role of Object Recognition in Task Automation

Object recognition plays a critical role in task automation by enabling robots to accurately identify and categorize objects within their workspace, which directly influences the precision and efficiency of automated processes. Unlike workspace mapping that outlines the spatial layout, object recognition focuses on detecting individual items, facilitating complex tasks such as pick-and-place operations, quality inspection, and adaptive manipulation. Integrating advanced computer vision algorithms with machine learning enhances the robot's ability to differentiate objects under varying conditions, thereby optimizing automation workflows in dynamic environments.

Integrating Workspace Mapping with Object Recognition

Integrating workspace mapping with object recognition enhances robotic systems by combining spatial environment understanding with precise object identification, enabling more efficient navigation and task execution. This fusion allows robots to dynamically adapt to changing environments through real-time update of maps enriched with recognized object data. Advanced algorithms leveraging simultaneous localization and mapping (SLAM) alongside deep learning-based object detection optimize industrial automation, service robotics, and autonomous systems.

Challenges and Limitations in Current Solutions

Workspace mapping faces challenges in dynamic environments due to frequent changes and occlusions that hinder accurate spatial representation. Object recognition struggles with variability in object appearance, lighting conditions, and partial views, limiting reliable identification. Current solutions often lack robustness in real-time adaptability and integration, reducing performance in complex, unstructured settings.

Future Trends in Workspace Mapping and Object Recognition

Future trends in workspace mapping emphasize the integration of advanced SLAM algorithms and AI-driven environment understanding to enhance real-time adaptability and precision in robotic navigation. Object recognition is evolving through deep learning models and edge computing, enabling robots to identify and interact with diverse and dynamic objects more efficiently. The convergence of these technologies will drive smarter robotic systems capable of seamless operation in complex and unstructured environments.

Impact on Industrial and Service Robotics

Workspace mapping enables industrial and service robots to create accurate spatial representations, enhancing navigation and operational efficiency in dynamic environments. Object recognition empowers robots to identify and manipulate diverse items, crucial for tasks such as assembly, quality control, and service delivery. Combining these capabilities significantly improves robot autonomy, adaptability, and productivity across manufacturing floors and service sectors.

Workspace mapping vs Object recognition Infographic

techiny.com

techiny.com