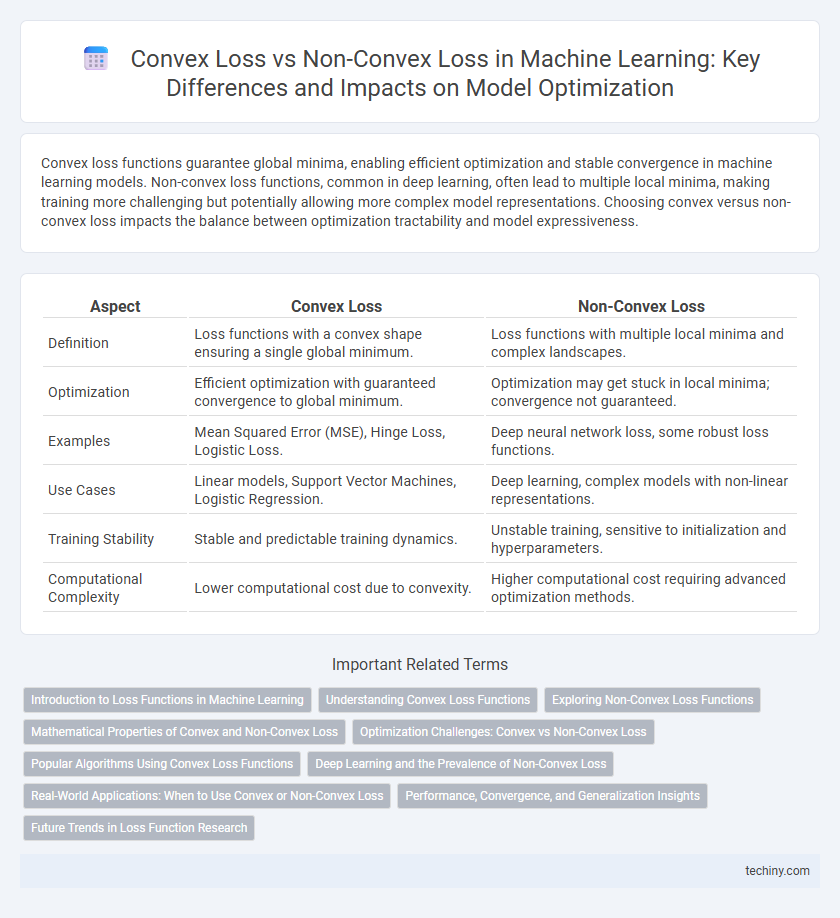

Convex loss functions guarantee global minima, enabling efficient optimization and stable convergence in machine learning models. Non-convex loss functions, common in deep learning, often lead to multiple local minima, making training more challenging but potentially allowing more complex model representations. Choosing convex versus non-convex loss impacts the balance between optimization tractability and model expressiveness.

Table of Comparison

| Aspect | Convex Loss | Non-Convex Loss |

|---|---|---|

| Definition | Loss functions with a convex shape ensuring a single global minimum. | Loss functions with multiple local minima and complex landscapes. |

| Optimization | Efficient optimization with guaranteed convergence to global minimum. | Optimization may get stuck in local minima; convergence not guaranteed. |

| Examples | Mean Squared Error (MSE), Hinge Loss, Logistic Loss. | Deep neural network loss, some robust loss functions. |

| Use Cases | Linear models, Support Vector Machines, Logistic Regression. | Deep learning, complex models with non-linear representations. |

| Training Stability | Stable and predictable training dynamics. | Unstable training, sensitive to initialization and hyperparameters. |

| Computational Complexity | Lower computational cost due to convexity. | Higher computational cost requiring advanced optimization methods. |

Introduction to Loss Functions in Machine Learning

Loss functions in machine learning quantify the difference between predicted outputs and true labels, guiding model optimization. Convex loss functions, such as mean squared error and hinge loss, ensure a single global minimum, facilitating efficient convergence with gradient-based methods. Non-convex loss functions, common in deep learning architectures, present multiple local minima, requiring advanced optimization techniques like stochastic gradient descent to navigate complex error surfaces.

Understanding Convex Loss Functions

Convex loss functions, such as mean squared error and logistic loss, are essential in machine learning for ensuring global optimality in model training due to their well-defined convex shape. These functions guarantee that any local minimum is also a global minimum, simplifying the optimization process and improving algorithm convergence. Their mathematical properties enable efficient implementation of gradient-based optimization techniques, making them the foundation for many supervised learning algorithms.

Exploring Non-Convex Loss Functions

Non-convex loss functions play a crucial role in advancing machine learning models by enabling the capture of complex patterns that convex loss functions cannot represent. These functions often lead to richer hypothesis spaces and potentially better generalization, despite challenges in optimization due to multiple local minima and saddle points. Techniques such as stochastic gradient descent with adaptive learning rates, momentum, or specialized initialization help navigate the non-convex landscape effectively.

Mathematical Properties of Convex and Non-Convex Loss

Convex loss functions exhibit a unique global minimum due to their mathematical property of convexity, ensuring consistent convergence in optimization algorithms such as gradient descent. Non-convex loss functions contain multiple local minima and saddle points, making optimization more challenging and often requiring advanced techniques like stochastic methods or heuristic algorithms. The mathematical distinction lies in the shape of the loss surface, where convex functions maintain a positive semi-definite Hessian matrix, while non-convex functions may have indefinite Hessians that complicate gradient-based optimization.

Optimization Challenges: Convex vs Non-Convex Loss

Convex loss functions guarantee global minima, enabling optimization algorithms like gradient descent to converge efficiently with predictable performance. Non-convex loss functions often contain multiple local minima and saddle points, complicating the optimization landscape and increasing the risk of convergence to suboptimal solutions. Advanced techniques such as stochastic gradient methods, momentum, and adaptive learning rates are essential to navigate non-convex optimization challenges in deep learning models.

Popular Algorithms Using Convex Loss Functions

Popular machine learning algorithms such as Logistic Regression, Support Vector Machines (SVM), and Linear Regression utilize convex loss functions like hinge loss and squared loss, ensuring global optimality and efficient convergence during training. Convex loss functions simplify the optimization landscape by eliminating local minima, which enhances stability and predictability in model performance. This property makes convex loss functions highly favored in supervised learning tasks that require robust generalization and fast computation.

Deep Learning and the Prevalence of Non-Convex Loss

Non-convex loss functions dominate deep learning due to their ability to model complex data patterns and representation hierarchies, unlike convex losses that simplify optimization but limit expressiveness. Training neural networks involves navigating high-dimensional, rugged loss landscapes where non-convex losses offer multiple local minima, facilitating better generalization despite optimization challenges. Techniques like stochastic gradient descent, batch normalization, and adaptive learning rates help efficiently optimize these non-convex loss functions in deep learning architectures.

Real-World Applications: When to Use Convex or Non-Convex Loss

Convex loss functions, such as mean squared error and hinge loss, are preferred in real-world applications requiring guaranteed convergence and efficient optimization, like linear regression and SVMs. Non-convex loss functions dominate deep learning tasks, including image recognition and natural language processing, due to their ability to model complex patterns despite optimization challenges. Choosing between convex and non-convex loss functions depends on the problem complexity, dataset size, and computational resources available.

Performance, Convergence, and Generalization Insights

Convex loss functions guarantee global optimum convergence and typically offer more stable and efficient training, leading to predictable performance in machine learning models. Non-convex loss functions, common in deep learning, can result in multiple local minima, complicating convergence but often enabling better generalization due to rich model expressiveness. Performance trade-offs arise as convex methods prioritize reliable optimization, while non-convex approaches potentially achieve superior accuracy at the cost of increased training complexity and sensitivity to initialization.

Future Trends in Loss Function Research

Future trends in loss function research emphasize the development of adaptive convex and non-convex loss functions that enhance model robustness and generalization in diverse machine learning applications. Hybrid loss functions combining convexity for optimization stability and non-convexity for expressiveness are gaining attention to address complex data structures. Advances in automated loss function design using meta-learning and differentiable programming promise to accelerate the discovery of optimal loss landscapes tailored to specific tasks and datasets.

convex loss vs non-convex loss Infographic

techiny.com

techiny.com