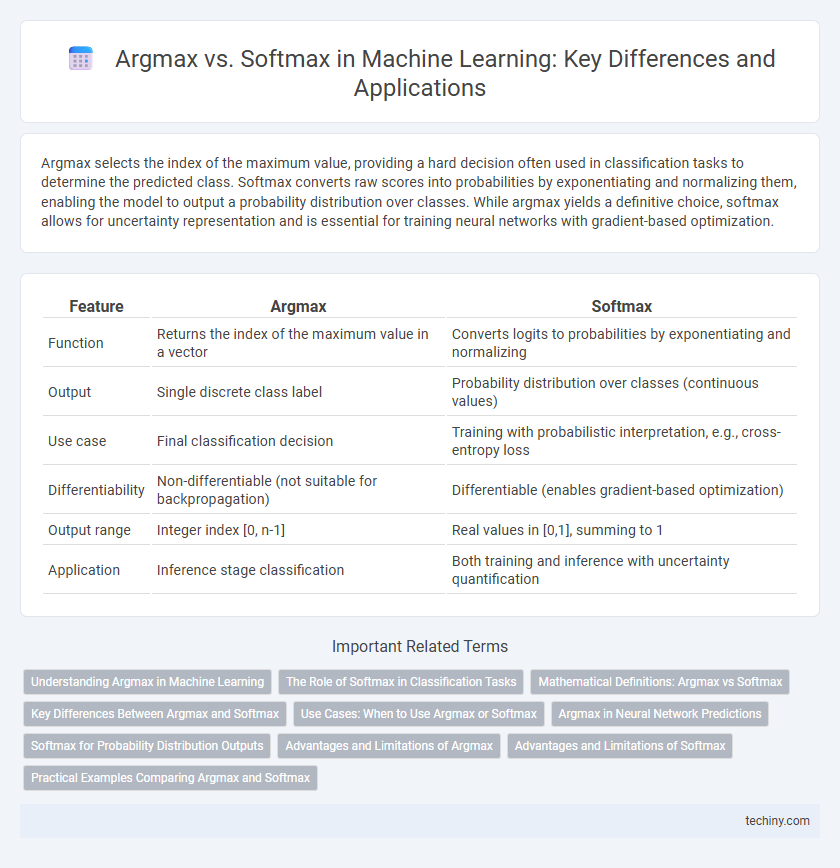

Argmax selects the index of the maximum value, providing a hard decision often used in classification tasks to determine the predicted class. Softmax converts raw scores into probabilities by exponentiating and normalizing them, enabling the model to output a probability distribution over classes. While argmax yields a definitive choice, softmax allows for uncertainty representation and is essential for training neural networks with gradient-based optimization.

Table of Comparison

| Feature | Argmax | Softmax |

|---|---|---|

| Function | Returns the index of the maximum value in a vector | Converts logits to probabilities by exponentiating and normalizing |

| Output | Single discrete class label | Probability distribution over classes (continuous values) |

| Use case | Final classification decision | Training with probabilistic interpretation, e.g., cross-entropy loss |

| Differentiability | Non-differentiable (not suitable for backpropagation) | Differentiable (enables gradient-based optimization) |

| Output range | Integer index [0, n-1] | Real values in [0,1], summing to 1 |

| Application | Inference stage classification | Both training and inference with uncertainty quantification |

Understanding Argmax in Machine Learning

Argmax in machine learning identifies the index of the maximum value within a vector, commonly used for decision-making in classification tasks by selecting the most probable class. Unlike softmax, which converts raw logits into a probability distribution over classes, argmax provides a discrete output representing the highest scoring category without probabilities. This function is essential for evaluation metrics and inference, enabling models to produce definitive predictions from continuous outputs.

The Role of Softmax in Classification Tasks

Softmax transforms raw logits into a probability distribution by exponentiating each logit and normalizing by the sum of all exponentials, enabling interpretable class probabilities in multi-class classification tasks. Unlike argmax, which selects the single highest scoring class without nuance, softmax provides a gradient-friendly output that supports model training through backpropagation. This probabilistic interpretation allows machine learning algorithms to quantify uncertainty and improve decision-making accuracy in tasks such as image recognition and natural language processing.

Mathematical Definitions: Argmax vs Softmax

Argmax is a function that returns the index of the maximum value in a vector, defined mathematically as argmax(x) = {i | x_i >= x_j for all j}, producing a discrete output. Softmax transforms a vector of real numbers into a probability distribution, given by softmax(x_i) = exp(x_i) / S_j exp(x_j), where exponentials normalize values to the range (0,1) summing to one. While argmax identifies the highest scoring class deterministically, softmax provides a differentiable approximation essential for gradient-based optimization in machine learning models.

Key Differences Between Argmax and Softmax

Argmax selects the index of the maximum value in a vector, producing a discrete output suitable for classification decisions, while softmax converts a vector of raw scores into a probability distribution, emphasizing relative confidence across all classes. Argmax is non-differentiable, limiting its use in gradient-based optimization, whereas softmax is differentiable and commonly used in loss functions like cross-entropy for training neural networks. The key difference lies in argmax's hard decision-making versus softmax's probabilistic interpretation that facilitates backpropagation.

Use Cases: When to Use Argmax or Softmax

Argmax is ideal for classification tasks requiring a definitive single output, such as selecting the most probable class in image recognition or language modeling. Softmax is preferred in scenarios needing a probabilistic distribution over multiple classes, enabling models to express uncertainty or support multi-class classification problems like sentiment analysis or object detection. Use argmax for deterministic decision-making and softmax when interpreting model confidence or training neural networks with cross-entropy loss.

Argmax in Neural Network Predictions

Argmax selects the index of the highest value in a neural network's output vector, providing a clear, discrete class prediction essential for classification tasks. This function simplifies decision-making by converting probabilities into a single class label, which is crucial for accuracy evaluation and deployment. Unlike softmax, argmax does not produce probability distributions but offers straightforward interpretation in one-hot encoded outputs.

Softmax for Probability Distribution Outputs

Softmax transforms raw scores from a neural network into a probability distribution by exponentiating each score and normalizing with the sum of all exponentials, ensuring outputs sum to one. This makes softmax ideal for multi-class classification tasks where probabilistic interpretation is necessary. In contrast, argmax selects the highest scoring class but does not provide probability information, limiting its use to deterministic prediction rather than uncertainty estimation.

Advantages and Limitations of Argmax

Argmax excels in producing a clear, discrete prediction by selecting the highest scoring class, making it ideal for classification tasks requiring definitive decisions. Its simplicity and computational efficiency allow for fast inference, but it lacks the ability to provide probabilistic outputs, limiting insight into model confidence. This hard decision boundary also reduces flexibility in gradient-based optimization and may lead to suboptimal learning during training.

Advantages and Limitations of Softmax

Softmax transforms raw prediction scores into probabilities, enabling effective multi-class classification by highlighting the most likely classes while maintaining differentiability for gradient-based optimization. It provides probabilistic interpretation, crucial for uncertainty estimation and downstream decision-making in neural networks. Limitations include susceptibility to producing overly confident predictions in imbalanced datasets and sensitivity to outliers, which can undermine model calibration.

Practical Examples Comparing Argmax and Softmax

Argmax selects the index of the highest value in a set, making it ideal for classification tasks requiring a definitive, single class prediction such as image recognition or spam detection. Softmax converts raw scores into probabilities that sum to one, which is crucial for multi-class classification problems like language modeling or neural network output layers, where understanding class probabilities informs decision-making. Practical use cases show argmax excels in crisp category assignments, while softmax provides nuanced confidence levels essential for probabilistic reasoning and gradient-based optimization in training.

argmax vs softmax Infographic

techiny.com

techiny.com