Parametric machine learning models rely on a fixed number of parameters to make predictions, offering faster training and easier interpretation but limited flexibility with complex data patterns. Non-parametric models adapt their complexity based on the data size, providing greater flexibility and accuracy for diverse datasets at the cost of increased computational resources and potential overfitting. Choosing between parametric and non-parametric methods depends on the trade-offs between model complexity, interpretability, and the nature of the data distribution.

Table of Comparison

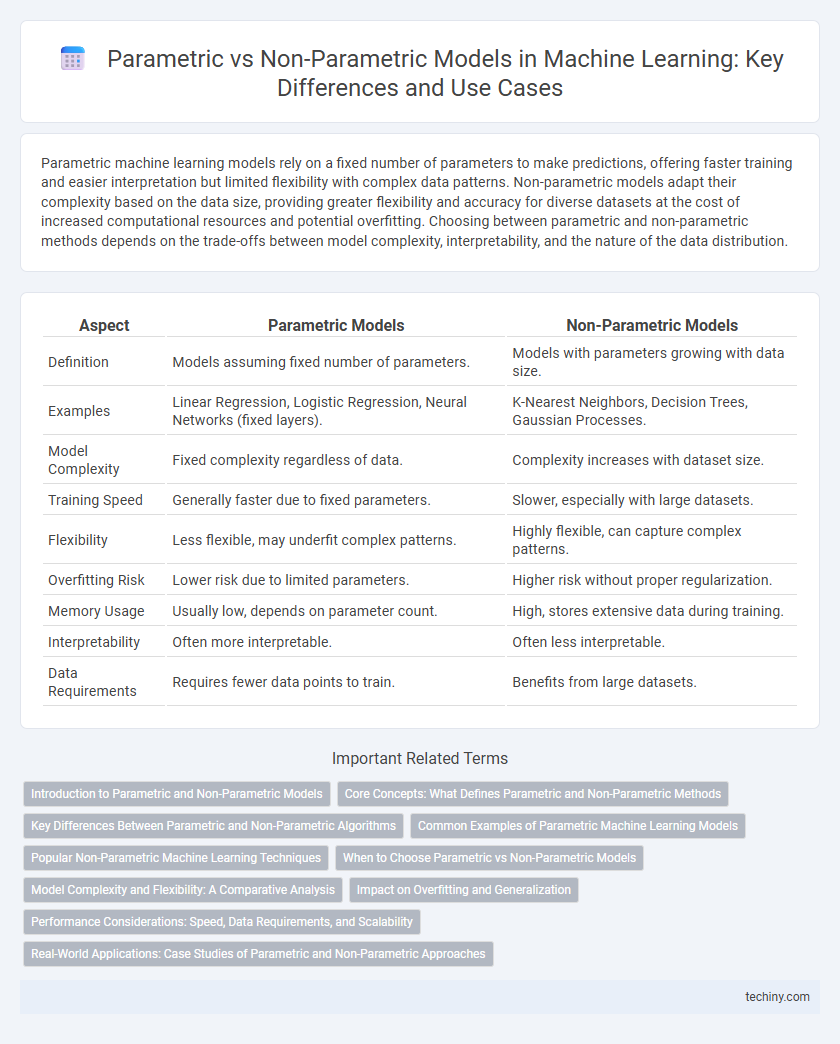

| Aspect | Parametric Models | Non-Parametric Models |

|---|---|---|

| Definition | Models assuming fixed number of parameters. | Models with parameters growing with data size. |

| Examples | Linear Regression, Logistic Regression, Neural Networks (fixed layers). | K-Nearest Neighbors, Decision Trees, Gaussian Processes. |

| Model Complexity | Fixed complexity regardless of data. | Complexity increases with dataset size. |

| Training Speed | Generally faster due to fixed parameters. | Slower, especially with large datasets. |

| Flexibility | Less flexible, may underfit complex patterns. | Highly flexible, can capture complex patterns. |

| Overfitting Risk | Lower risk due to limited parameters. | Higher risk without proper regularization. |

| Memory Usage | Usually low, depends on parameter count. | High, stores extensive data during training. |

| Interpretability | Often more interpretable. | Often less interpretable. |

| Data Requirements | Requires fewer data points to train. | Benefits from large datasets. |

Introduction to Parametric and Non-Parametric Models

Parametric models in machine learning rely on a fixed number of parameters to represent data patterns, offering simplicity and faster training but limited flexibility. Non-parametric models adapt their complexity based on the dataset size, enabling them to capture intricate relationships without assuming a predetermined form. Understanding the distinction between parametric and non-parametric models is essential for selecting the appropriate algorithm tailored to specific problem structures and data characteristics.

Core Concepts: What Defines Parametric and Non-Parametric Methods

Parametric methods in machine learning involve models characterized by a fixed number of parameters, such as linear regression or logistic regression, where the model's form is predetermined and summarized by a finite set of parameters. Non-parametric methods, including k-nearest neighbors and decision trees, do not assume a fixed number of parameters but instead adapt their complexity based on the dataset size, allowing for more flexible modeling of complex patterns. The core distinction lies in the parametric approach's reliance on predefined parameter structures versus non-parametric methods' capacity to grow and change with data, impacting model bias, variance, and scalability.

Key Differences Between Parametric and Non-Parametric Algorithms

Parametric algorithms rely on a fixed number of parameters to model data distributions, allowing faster training and requiring less memory, while non-parametric algorithms adapt their complexity based on the amount of data, offering greater flexibility and accuracy for complex patterns. Parametric methods, such as linear regression and logistic regression, assume a specific functional form, leading to simpler models but potential bias when assumptions are violated. Non-parametric techniques, including k-nearest neighbors and decision trees, do not assume an explicit form, enabling them to capture nonlinear relationships but often at the cost of higher computational resources and risk of overfitting.

Common Examples of Parametric Machine Learning Models

Parametric machine learning models include Linear Regression, Logistic Regression, and Support Vector Machines (SVM) with a linear kernel, which rely on a fixed number of parameters regardless of data size. These models assume a predetermined form and use parameters such as weights and biases to capture relationships within the input data. Neural networks with a defined architecture also fall under parametric models, optimizing a finite set of parameters through training.

Popular Non-Parametric Machine Learning Techniques

Popular non-parametric machine learning techniques include Decision Trees, k-Nearest Neighbors (k-NN), and Support Vector Machines (SVM) with kernel methods, which do not assume a fixed form for the underlying data distribution. These models adapt to the complexity of the data by increasing their capacity with more training samples, providing flexibility in capturing intricate patterns. Non-parametric methods excel in scenarios with heterogeneous data and complex decision boundaries, making them widely used in classification and regression tasks.

When to Choose Parametric vs Non-Parametric Models

Parametric models are suitable when the underlying data distribution is known or can be approximated with a fixed number of parameters, enabling faster training and simpler interpretation. Non-parametric models are preferred for complex or unknown data distributions, as they can adapt flexibly to the data without assuming a specific form, though they often require more computational resources and larger datasets. Choosing between parametric and non-parametric models depends on dataset size, model interpretability needs, and the complexity of the underlying patterns.

Model Complexity and Flexibility: A Comparative Analysis

Parametric models in machine learning rely on a fixed number of parameters, which limits their complexity and makes them computationally efficient but less flexible in capturing intricate data patterns. Non-parametric models do not assume a predefined form and can adapt their complexity based on the data, allowing them to model complex relationships more accurately at the cost of higher computational demand. This flexibility in non-parametric models often leads to improved performance on diverse datasets, especially when the underlying data structure is unknown or highly variable.

Impact on Overfitting and Generalization

Parametric models in machine learning, such as linear regression and logistic regression, have a fixed number of parameters that limit model complexity and reduce overfitting risk, but may underfit complex data patterns. Non-parametric models, including k-nearest neighbors and decision trees, adapt complexity based on training data size, enhancing flexibility and capturing intricate relationships but increasing overfitting potential if not properly regularized. Balancing bias and variance in parametric versus non-parametric approaches is crucial for achieving optimal generalization on unseen data.

Performance Considerations: Speed, Data Requirements, and Scalability

Parametric models in machine learning typically offer faster training and prediction speeds due to a fixed number of parameters, making them well-suited for large datasets with lower computational costs. Non-parametric models require more data to achieve high accuracy as their complexity grows with dataset size, often resulting in slower training and prediction times but greater flexibility in capturing complex patterns. Scalability favors parametric methods for real-time applications, while non-parametric approaches are preferred when model adaptability and accuracy over diverse data distributions are critical.

Real-World Applications: Case Studies of Parametric and Non-Parametric Approaches

Parametric models like linear regression and logistic regression excel in scenarios with fixed feature sets and large datasets, demonstrated by their use in credit scoring and spam detection where interpretability and computational efficiency are crucial. Non-parametric methods such as k-nearest neighbors, decision trees, and Gaussian processes thrive in complex, high-dimensional spaces like image recognition and bioinformatics, where they adapt flexibly to data distributions without assuming a predefined form. Case studies in healthcare diagnostics illustrate non-parametric models outperforming parametric counterparts due to their ability to capture nuanced patterns in heterogeneous patient data.

Parametric vs Non-Parametric Infographic

techiny.com

techiny.com