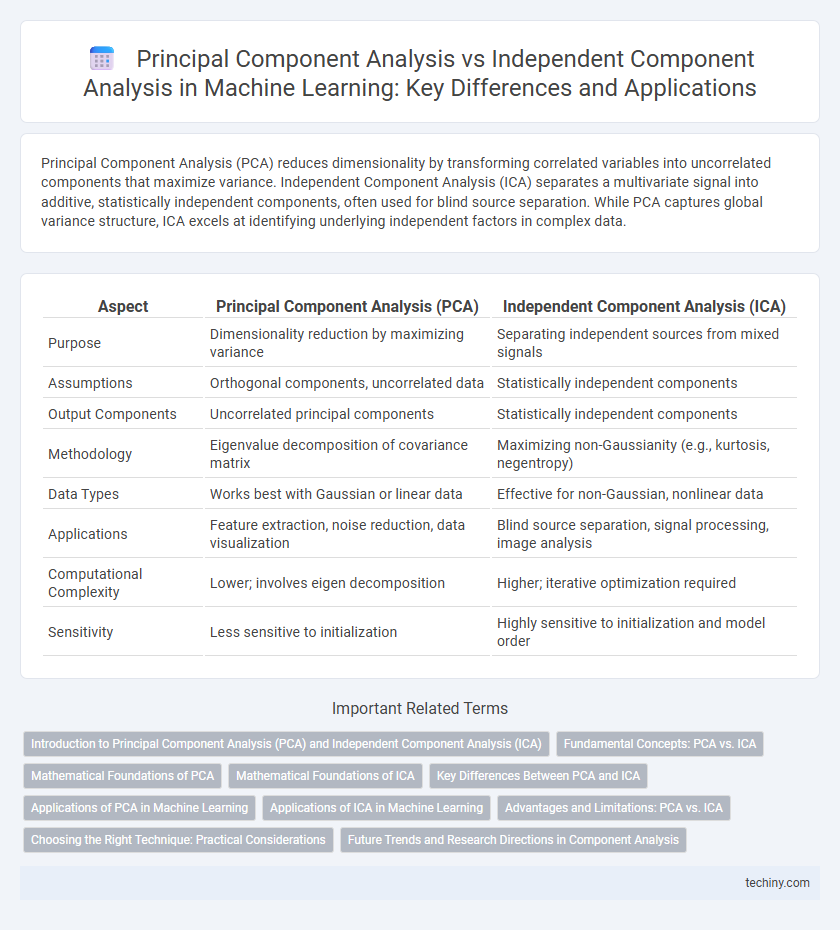

Principal Component Analysis (PCA) reduces dimensionality by transforming correlated variables into uncorrelated components that maximize variance. Independent Component Analysis (ICA) separates a multivariate signal into additive, statistically independent components, often used for blind source separation. While PCA captures global variance structure, ICA excels at identifying underlying independent factors in complex data.

Table of Comparison

| Aspect | Principal Component Analysis (PCA) | Independent Component Analysis (ICA) |

|---|---|---|

| Purpose | Dimensionality reduction by maximizing variance | Separating independent sources from mixed signals |

| Assumptions | Orthogonal components, uncorrelated data | Statistically independent components |

| Output Components | Uncorrelated principal components | Statistically independent components |

| Methodology | Eigenvalue decomposition of covariance matrix | Maximizing non-Gaussianity (e.g., kurtosis, negentropy) |

| Data Types | Works best with Gaussian or linear data | Effective for non-Gaussian, nonlinear data |

| Applications | Feature extraction, noise reduction, data visualization | Blind source separation, signal processing, image analysis |

| Computational Complexity | Lower; involves eigen decomposition | Higher; iterative optimization required |

| Sensitivity | Less sensitive to initialization | Highly sensitive to initialization and model order |

Introduction to Principal Component Analysis (PCA) and Independent Component Analysis (ICA)

Principal Component Analysis (PCA) reduces data dimensionality by transforming correlated variables into a set of orthogonal components capturing maximum variance. Independent Component Analysis (ICA) separates a multivariate signal into additive, statistically independent non-Gaussian components, often used in blind source separation. PCA emphasizes variance maximization and decorrelation, whereas ICA focuses on statistical independence and higher-order statistics for feature extraction.

Fundamental Concepts: PCA vs. ICA

Principal Component Analysis (PCA) reduces dimensionality by identifying orthogonal axes that maximize variance, capturing uncorrelated components. Independent Component Analysis (ICA) separates a multivariate signal into additive, statistically independent non-Gaussian components, focusing on source signal recovery. PCA emphasizes decorrelation and variance maximization, whereas ICA prioritizes statistical independence for blind source separation.

Mathematical Foundations of PCA

Principal Component Analysis (PCA) is grounded in linear algebra, specifically eigenvalue decomposition of the covariance matrix, which identifies orthogonal axes (principal components) capturing maximum variance in data. By projecting data onto these components, PCA reduces dimensionality while preserving as much variability as possible. This mathematical foundation enables efficient noise reduction and data compression, distinguishing PCA from Independent Component Analysis (ICA), which relies on higher-order statistics to find statistically independent sources.

Mathematical Foundations of ICA

Independent Component Analysis (ICA) relies on the mathematical foundation of maximizing statistical independence through higher-order statistics such as kurtosis or negentropy, contrasting Principal Component Analysis (PCA), which is based on second-order statistics and variance maximization. ICA models the observed multivariate data as linear combinations of statistically independent non-Gaussian source signals, utilizing techniques like the FastICA algorithm to estimate the unmixing matrix. The core mathematical principle behind ICA involves minimizing mutual information or maximizing non-Gaussianity to uncover underlying independent components, making it effective for blind source separation tasks.

Key Differences Between PCA and ICA

Principal Component Analysis (PCA) identifies orthogonal components that maximize variance, optimizing data dimensionality by decorrelating features, while Independent Component Analysis (ICA) separates statistically independent non-Gaussian sources, focusing on higher-order statistics beyond variance. PCA assumes Gaussian data and linear uncorrelated components, whereas ICA targets uncovering latent variables with non-Gaussian distributions and statistical independence. PCA is primarily used for dimensionality reduction and noise filtering, while ICA excels in blind source separation and feature extraction in signals.

Applications of PCA in Machine Learning

Principal Component Analysis (PCA) is widely used in machine learning for dimensionality reduction, enabling efficient processing of high-dimensional data by transforming it into a lower-dimensional space while preserving variance. PCA enhances the performance of algorithms such as classification, clustering, and regression by removing noise and reducing computational complexity. Its applications include feature extraction for image recognition, gene expression analysis, and anomaly detection in large datasets.

Applications of ICA in Machine Learning

Independent Component Analysis (ICA) is widely used in machine learning for blind source separation, where mixed signals such as audio or images are decomposed into statistically independent components. Its applications include feature extraction, noise reduction, and anomaly detection, particularly in areas like speech recognition, medical imaging, and financial data analysis. ICA's ability to identify underlying factors without prior knowledge makes it vital for unsupervised learning tasks involving complex, multidimensional datasets.

Advantages and Limitations: PCA vs. ICA

Principal Component Analysis (PCA) efficiently reduces dimensionality by capturing maximum variance through orthogonal linear components, which simplifies data visualization and speeds up algorithms, but it assumes Gaussian data and may lose interpretability due to uncorrelated but not necessarily independent components. Independent Component Analysis (ICA) excels at separating statistically independent and non-Gaussian signals, making it ideal for tasks like blind source separation, though it requires more computational resources and relies on assumptions about source independence that may not hold in all datasets. While PCA is generally faster and more robust for noise reduction, ICA offers superior feature extraction for complex, mixed-source signals, presenting a trade-off between computational efficiency and interpretability.

Choosing the Right Technique: Practical Considerations

Choosing between Principal Component Analysis (PCA) and Independent Component Analysis (ICA) depends on the data structure and the specific goals of the machine learning task. PCA is optimal for dimensionality reduction when the objective is to capture the maximum variance through orthogonal linear components, making it effective for noise reduction and feature extraction in correlated datasets. ICA excels in separating independent sources from mixed signals, making it suitable for applications such as blind source separation and feature independence in signal processing contexts.

Future Trends and Research Directions in Component Analysis

Emerging research in component analysis emphasizes integration of deep learning with Principal Component Analysis (PCA) and Independent Component Analysis (ICA) to enhance feature extraction from complex datasets. Advances in nonlinear and kernel-based extensions of PCA and ICA aim to improve performance in high-dimensional, non-Gaussian scenarios prevalent in big data and bioinformatics. Future trends also highlight the development of scalable algorithms for real-time processing and interpretability to address challenges in automated decision-making systems.

principal component analysis vs independent component analysis Infographic

techiny.com

techiny.com