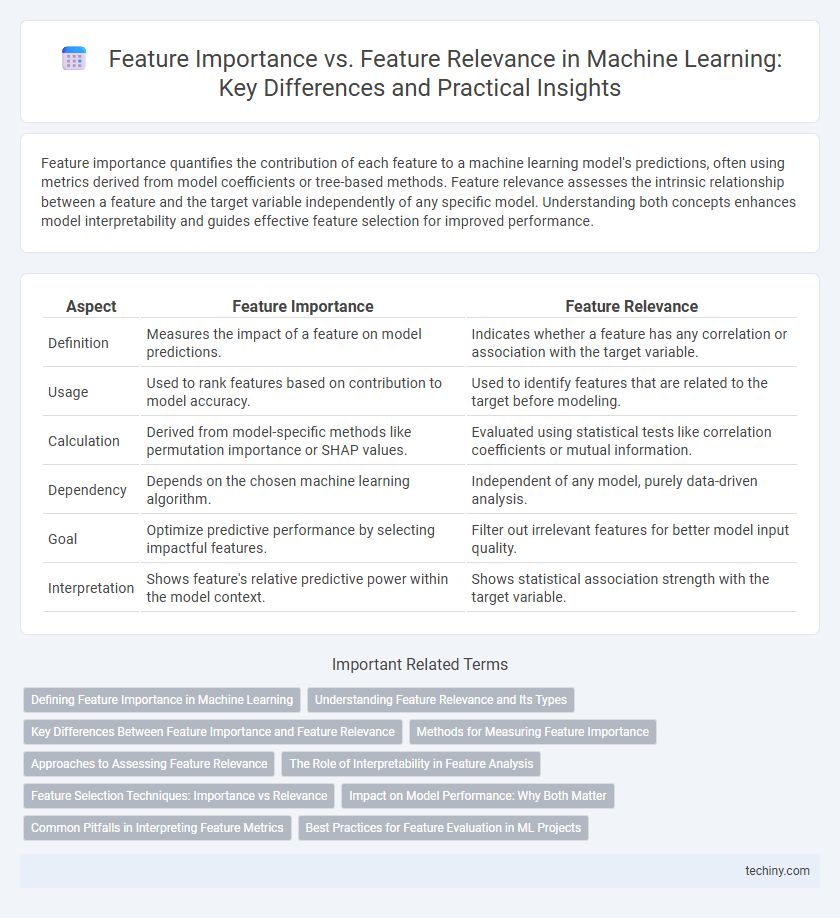

Feature importance quantifies the contribution of each feature to a machine learning model's predictions, often using metrics derived from model coefficients or tree-based methods. Feature relevance assesses the intrinsic relationship between a feature and the target variable independently of any specific model. Understanding both concepts enhances model interpretability and guides effective feature selection for improved performance.

Table of Comparison

| Aspect | Feature Importance | Feature Relevance |

|---|---|---|

| Definition | Measures the impact of a feature on model predictions. | Indicates whether a feature has any correlation or association with the target variable. |

| Usage | Used to rank features based on contribution to model accuracy. | Used to identify features that are related to the target before modeling. |

| Calculation | Derived from model-specific methods like permutation importance or SHAP values. | Evaluated using statistical tests like correlation coefficients or mutual information. |

| Dependency | Depends on the chosen machine learning algorithm. | Independent of any model, purely data-driven analysis. |

| Goal | Optimize predictive performance by selecting impactful features. | Filter out irrelevant features for better model input quality. |

| Interpretation | Shows feature's relative predictive power within the model context. | Shows statistical association strength with the target variable. |

Defining Feature Importance in Machine Learning

Feature importance in machine learning quantifies the impact of individual features on model predictions by measuring how much each feature contributes to reducing prediction error. Techniques such as permutation importance, SHAP values, and feature weights in linear models help assign numerical scores that rank features based on their influence within the trained model. Understanding feature importance aids in model interpretability, feature selection, and improving model performance.

Understanding Feature Relevance and Its Types

Feature relevance refers to the degree to which a feature contributes to the predictive power of a machine learning model, highlighting its necessity for accurate predictions. It consists of three main types: strongly relevant features, which are essential and cannot be replaced without loss of information; weakly relevant features, which contribute useful but redundant information; and irrelevant features, which do not influence the model's output. Understanding these distinctions helps in effective feature selection and improving model interpretability and performance.

Key Differences Between Feature Importance and Feature Relevance

Feature importance quantifies the contribution of each feature to the predictive accuracy of a machine learning model, often derived from model-specific metrics like Gini importance or SHAP values. Feature relevance assesses the inherent significance of a feature in relation to the target variable independent of any model, typically measured using statistical tests or correlation coefficients. The key difference lies in that feature importance is model-dependent and context-specific, while feature relevance provides a broader, model-agnostic indication of a feature's potential utility.

Methods for Measuring Feature Importance

Methods for measuring feature importance in machine learning include permutation importance, which assesses the impact of feature shuffling on model accuracy, and SHAP (SHapley Additive exPlanations) values, which provide a unified measure by attributing contributions to individual features based on cooperative game theory. Another widely used technique is the feature importance metric from tree-based models like Random Forest and Gradient Boosting, which quantifies feature impact through split criteria improvements. These methods enable practitioners to identify influential features, optimize model performance, and enhance interpretability.

Approaches to Assessing Feature Relevance

Approaches to assessing feature relevance in machine learning include filter methods, wrapper methods, and embedded methods, each offering distinct advantages in evaluating the impact of input variables on model performance. Filter methods rely on statistical measures such as correlation coefficients or mutual information to rank features independently of any model, providing quick insights into feature relevance. Wrapper methods evaluate subsets of features by training and testing a specific model, optimizing feature selection based on model accuracy, while embedded methods integrate relevance assessment within the model training process, exemplified by regularization techniques like Lasso or tree-based importance scores.

The Role of Interpretability in Feature Analysis

Feature importance quantifies the contribution of each feature to a machine learning model's predictive performance, while feature relevance indicates the intrinsic connection between a feature and the target variable. Interpretability plays a critical role in feature analysis by enabling the understanding of how features influence predictions, facilitating model transparency and trust. Techniques such as SHAP values and permutation importance provide interpretable metrics that help distinguish meaningful features from noisy or redundant ones in complex models.

Feature Selection Techniques: Importance vs Relevance

Feature importance quantifies the contribution of each feature to a predictive model's accuracy, often derived from methods like permutation importance or SHAP values, while feature relevance assesses a feature's inherent relationship with the target variable, typically through statistical measures such as correlation or mutual information. Feature selection techniques leveraging importance prioritize features based on their impact on model performance, whereas relevance-based methods focus on features demonstrating strong individual associations with the outcome, regardless of model context. Combining importance and relevance allows for more robust feature selection by capturing both predictive contribution and intrinsic relevance to the target.

Impact on Model Performance: Why Both Matter

Feature importance quantifies the impact of individual features on a machine learning model's predictive power, guiding feature selection and model interpretability. Feature relevance indicates whether a feature contains useful information for the target variable, which influences the underlying model's ability to learn patterns. Both concepts matter because relevant features may not always have high immediate importance due to interactions and redundancy, while important features directly affect model performance and reliability.

Common Pitfalls in Interpreting Feature Metrics

Confusing feature importance with feature relevance often leads to misinterpretation of model insights, as importance measures may reflect algorithm biases rather than true predictive power. Techniques like permutation importance can be misleading when features are correlated, causing overestimation or underestimation of feature significance. Careful analysis using complementary methods such as SHAP values or partial dependence plots is essential to avoid common pitfalls and achieve robust feature assessment in machine learning models.

Best Practices for Feature Evaluation in ML Projects

Feature importance quantifies the contribution of each input variable to a machine learning model's predictions, whereas feature relevance identifies variables correlated with the target outcome regardless of model context. Best practices for feature evaluation include using model-agnostic methods like permutation importance and SHAP values to assess importance, combined with domain knowledge to verify relevance. Cross-validating feature selection results and avoiding multicollinearity improve model interpretability and performance by ensuring that truly significant features are retained.

Feature Importance vs Feature Relevance Infographic

techiny.com

techiny.com