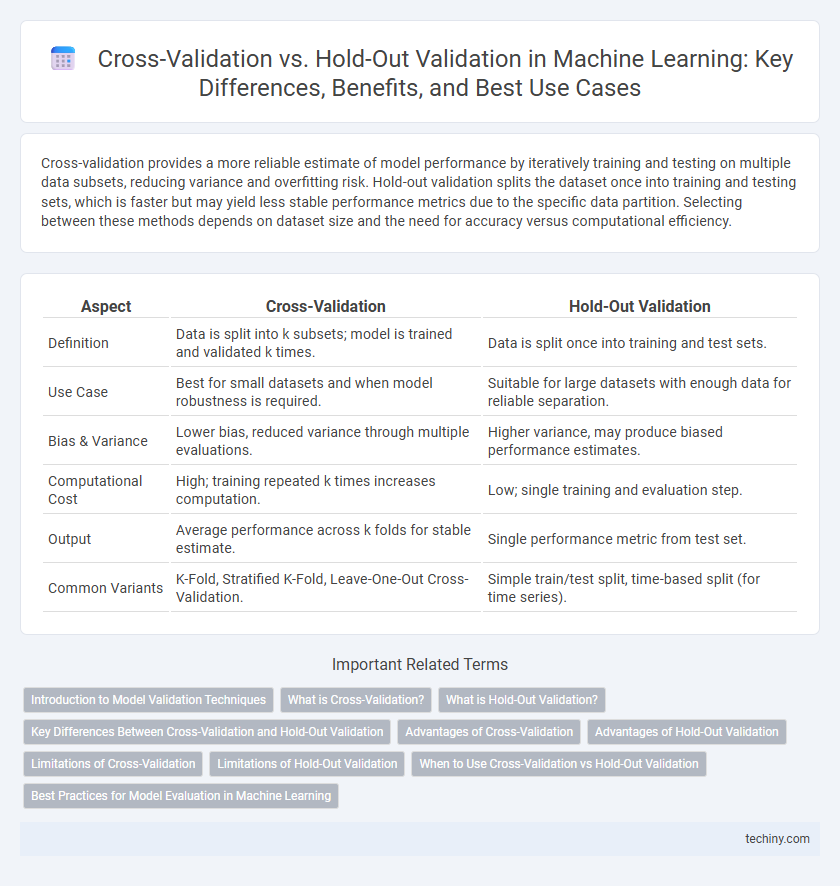

Cross-validation provides a more reliable estimate of model performance by iteratively training and testing on multiple data subsets, reducing variance and overfitting risk. Hold-out validation splits the dataset once into training and testing sets, which is faster but may yield less stable performance metrics due to the specific data partition. Selecting between these methods depends on dataset size and the need for accuracy versus computational efficiency.

Table of Comparison

| Aspect | Cross-Validation | Hold-Out Validation |

|---|---|---|

| Definition | Data is split into k subsets; model is trained and validated k times. | Data is split once into training and test sets. |

| Use Case | Best for small datasets and when model robustness is required. | Suitable for large datasets with enough data for reliable separation. |

| Bias & Variance | Lower bias, reduced variance through multiple evaluations. | Higher variance, may produce biased performance estimates. |

| Computational Cost | High; training repeated k times increases computation. | Low; single training and evaluation step. |

| Output | Average performance across k folds for stable estimate. | Single performance metric from test set. |

| Common Variants | K-Fold, Stratified K-Fold, Leave-One-Out Cross-Validation. | Simple train/test split, time-based split (for time series). |

Introduction to Model Validation Techniques

Cross-validation and hold-out validation are essential model validation techniques in machine learning that assess model performance and generalization. Cross-validation, especially k-fold cross-validation, provides more reliable evaluation by partitioning data into multiple subsets, training on some while validating on others, reducing bias and variance in performance estimates. Hold-out validation, involving a single split of the data into training and testing sets, offers faster evaluation but may result in higher variance and less stable performance metrics due to the dependency on the chosen split.

What is Cross-Validation?

Cross-validation is a robust statistical method used in machine learning to evaluate model performance by partitioning the dataset into multiple subsets or folds. Each fold is used as a testing set while the remaining folds serve as the training set, ensuring that every data point is used for both training and validation. This technique reduces overfitting risk and provides a more reliable estimate of the model's generalization ability compared to single hold-out validation.

What is Hold-Out Validation?

Hold-Out Validation is a technique in machine learning where the dataset is randomly split into two distinct subsets: one for training the model and the other for testing its performance. This method provides a straightforward evaluation by reserving a portion of the data, typically 20-30%, as a hold-out test set to estimate how the model generalizes to unseen data. Its simplicity and speed make it suitable for large datasets but can lead to higher variance in performance estimates compared to more robust methods like cross-validation.

Key Differences Between Cross-Validation and Hold-Out Validation

Cross-validation divides the dataset into multiple folds, ensuring that each subset is used both for training and testing, which provides a more reliable estimate of model performance. Hold-out validation splits the data once into separate training and testing sets, which can be faster but may produce higher variance in performance estimates. Cross-validation is generally preferred for smaller datasets due to its robustness, while hold-out is simpler and computationally efficient for very large datasets.

Advantages of Cross-Validation

Cross-validation offers more reliable model performance estimates by utilizing multiple train-test splits, reducing the risk of overfitting to a single hold-out set. It maximizes the use of limited data, providing a comprehensive evaluation across various subsets, which enhances the generalizability of machine learning models. This technique effectively balances bias and variance, resulting in robust selection and tuning of hyperparameters compared to hold-out validation.

Advantages of Hold-Out Validation

Hold-out validation offers simplicity and speed by splitting the dataset into distinct training and testing subsets, making it computationally efficient for large datasets. This method reduces the risk of data leakage by keeping the test set completely separate from the training process. Hold-out validation is particularly advantageous for quick model evaluation and hyperparameter tuning in iterative machine learning workflows.

Limitations of Cross-Validation

Cross-validation, while effective for assessing model performance, can be computationally intensive, especially with large datasets or complex algorithms like deep learning. It may also lead to optimistic bias if the data is not independently and identically distributed, diminishing its reliability in time-series or sequential data contexts. Furthermore, improper stratification during cross-validation can cause class imbalance issues, affecting the generalizability of the machine learning model.

Limitations of Hold-Out Validation

Hold-out validation often suffers from high variance due to its dependence on a single split of the dataset, which can lead to unreliable performance estimates. This method may also result in biased evaluation if the training and test sets are not representative of the overall data distribution. Consequently, hold-out validation is less suitable for small datasets or when precise model assessment is critical, compared to methods like cross-validation.

When to Use Cross-Validation vs Hold-Out Validation

Cross-validation is preferred when working with limited datasets, as it maximizes data utilization by partitioning the data into multiple training and validation folds, providing a more reliable estimate of model performance. Hold-out validation suits larger datasets where an independent test set can be maintained, offering faster evaluation and simplicity without the computational overhead of multiple training iterations. For models requiring fine-tuning or selection among many hyperparameters, cross-validation reduces variance and bias, while hold-out validation is efficient for quick assessments or when computational resources are constrained.

Best Practices for Model Evaluation in Machine Learning

Cross-validation, especially k-fold cross-validation, offers a more reliable estimate of model performance by repeatedly training and testing on different data subsets, reducing variance and overfitting risks compared to hold-out validation. Hold-out validation involves a single split of the dataset into training and testing sets, which can lead to biased performance estimates if the split is not representative or if the dataset is small. Best practices recommend using cross-validation for robust model evaluation, particularly in scenarios with limited data, while hold-out validation may be suitable for quick preliminary assessments or very large datasets.

Cross-Validation vs Hold-Out Validation Infographic

techiny.com

techiny.com