The curse of dimensionality refers to the exponential increase in data sparsity as the number of features grows, making model training and generalization challenging. The bias-variance tradeoff involves balancing model complexity to minimize errors from underfitting (high bias) and overfitting (high variance). Effective machine learning requires managing high-dimensional data while optimizing this tradeoff to achieve robust predictive performance.

Table of Comparison

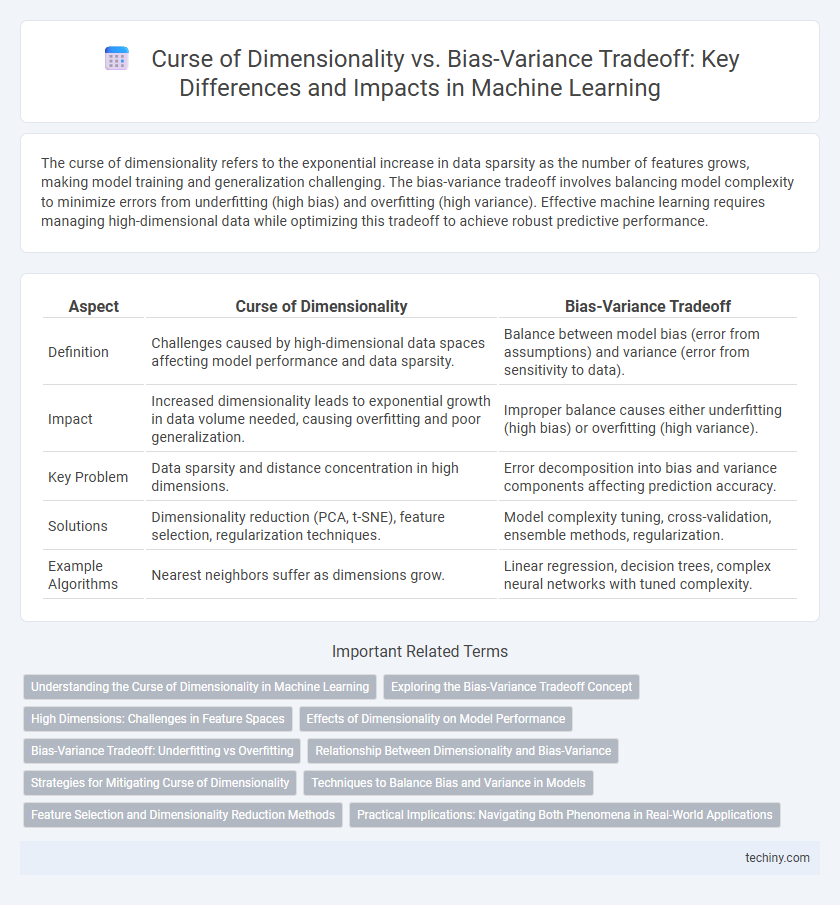

| Aspect | Curse of Dimensionality | Bias-Variance Tradeoff |

|---|---|---|

| Definition | Challenges caused by high-dimensional data spaces affecting model performance and data sparsity. | Balance between model bias (error from assumptions) and variance (error from sensitivity to data). |

| Impact | Increased dimensionality leads to exponential growth in data volume needed, causing overfitting and poor generalization. | Improper balance causes either underfitting (high bias) or overfitting (high variance). |

| Key Problem | Data sparsity and distance concentration in high dimensions. | Error decomposition into bias and variance components affecting prediction accuracy. |

| Solutions | Dimensionality reduction (PCA, t-SNE), feature selection, regularization techniques. | Model complexity tuning, cross-validation, ensemble methods, regularization. |

| Example Algorithms | Nearest neighbors suffer as dimensions grow. | Linear regression, decision trees, complex neural networks with tuned complexity. |

Understanding the Curse of Dimensionality in Machine Learning

The curse of dimensionality refers to the exponential increase in data sparsity as the number of features grows, making distance-based learning algorithms less effective in high-dimensional spaces. This phenomenon causes models to overfit due to the vast feature space relative to the available training data, complicating generalization. Understanding this challenge is crucial for balancing the bias-variance tradeoff by selecting appropriate dimensionality reduction techniques and model complexity.

Exploring the Bias-Variance Tradeoff Concept

The bias-variance tradeoff in machine learning highlights the balance between model complexity and prediction accuracy, where high bias leads to underfitting and high variance causes overfitting. Understanding this tradeoff is crucial for optimizing model generalization by selecting appropriate algorithms and tuning hyperparameters to minimize total error. Techniques such as cross-validation and regularization help navigate this balance, ensuring the model performs well on both training and unseen data.

High Dimensions: Challenges in Feature Spaces

High-dimensional feature spaces intensify the curse of dimensionality, leading to sparse data distributions that hinder effective model training and increase computational complexity. This sparsity exacerbates the bias-variance tradeoff by making it difficult to balance underfitting and overfitting, often causing models to either oversimplify or become overly sensitive to noise. Techniques such as dimensionality reduction and regularization are essential to mitigate these challenges and improve generalization in machine learning models.

Effects of Dimensionality on Model Performance

High dimensionality increases the complexity of machine learning models, often leading to sparse data representations that degrade model accuracy and generalization. This phenomenon, known as the curse of dimensionality, exacerbates overfitting, causing models to capture noise instead of underlying patterns. Balancing dimensionality reduction techniques with the bias-variance tradeoff is crucial for optimizing model performance and enhancing predictive robustness.

Bias-Variance Tradeoff: Underfitting vs Overfitting

The bias-variance tradeoff is a fundamental challenge in machine learning that balances underfitting and overfitting to optimize model performance. High bias models oversimplify data, leading to underfitting and poor accuracy on both training and test sets, while high variance models capture noise, causing overfitting with great training accuracy but poor generalization. Effective regularization techniques, cross-validation, and proper model complexity selection help minimize prediction error by addressing this tradeoff.

Relationship Between Dimensionality and Bias-Variance

High dimensionality exacerbates the curse of dimensionality by increasing data sparsity, which often inflates model variance due to overfitting. Conversely, reducing dimensionality can introduce bias by simplifying the model and overlooking important features. Balancing dimensionality is crucial to managing the bias-variance tradeoff for optimal machine learning performance.

Strategies for Mitigating Curse of Dimensionality

Strategies for mitigating the Curse of Dimensionality in machine learning include dimensionality reduction techniques like Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE), which transform high-dimensional data into lower-dimensional spaces while preserving significant variance. Feature selection methods, such as Recursive Feature Elimination (RFE) and Lasso regularization, help by identifying and retaining only the most relevant features to improve model generalization and reduce overfitting. These approaches complement the bias-variance tradeoff by reducing model complexity to enhance prediction accuracy and computational efficiency.

Techniques to Balance Bias and Variance in Models

Regularization methods such as Lasso and Ridge regression effectively control model complexity to balance bias and variance by shrinking coefficients and preventing overfitting in high-dimensional spaces. Cross-validation techniques optimize hyperparameters to find the best tradeoff point, enhancing the generalization performance of machine learning models. Ensemble methods like bagging and boosting combine multiple learners to reduce variance while maintaining low bias, improving model robustness against the curse of dimensionality.

Feature Selection and Dimensionality Reduction Methods

Feature selection and dimensionality reduction methods address the Curse of Dimensionality by removing irrelevant or redundant features, thereby improving model generalization and computational efficiency. Techniques such as Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) reduce feature space dimensionality while preserving essential data structure, aiding in the balance between bias and variance. Proper dimensionality management mitigates overfitting risks and improves predictive performance by optimizing the bias-variance tradeoff in machine learning models.

Practical Implications: Navigating Both Phenomena in Real-World Applications

High-dimensional data exacerbates the curse of dimensionality by increasing sparsity and reducing the effectiveness of distance metrics, necessitating dimensionality reduction techniques such as PCA or t-SNE to maintain model performance. Balancing the bias-variance tradeoff requires selecting model complexity and training data size carefully to avoid underfitting or overfitting, often using cross-validation and regularization methods. Practical machine learning applications must integrate strategies to mitigate high dimensionality effects while tuning models to achieve optimal predictive accuracy and generalization.

Curse of Dimensionality vs Bias-Variance Tradeoff Infographic

techiny.com

techiny.com