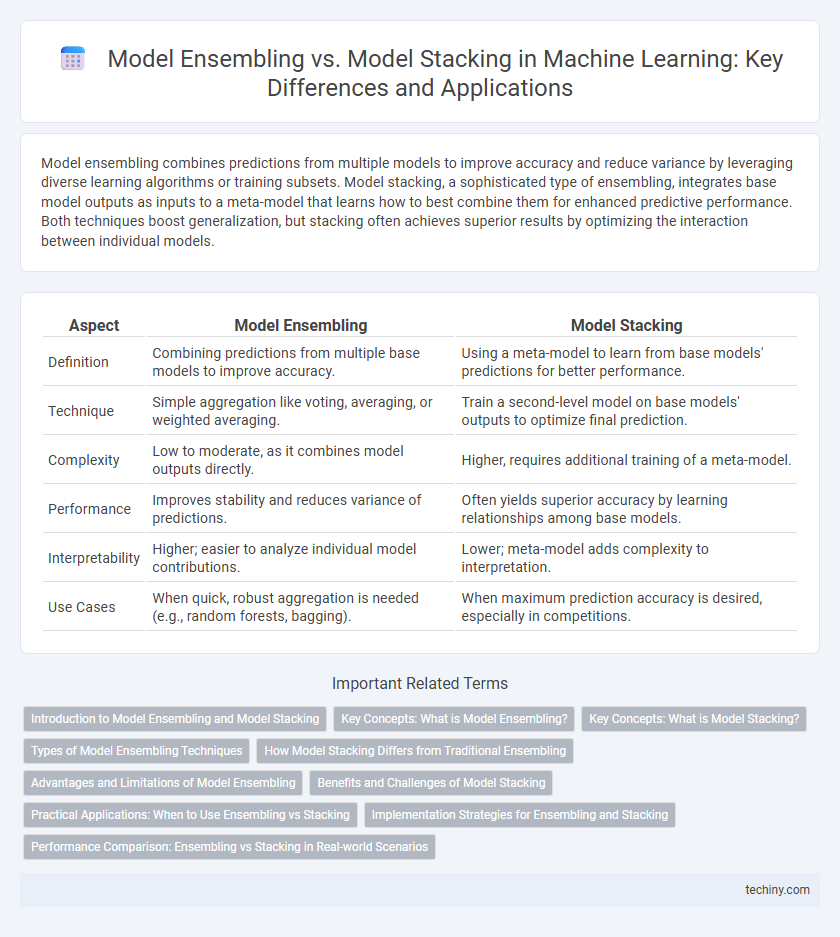

Model ensembling combines predictions from multiple models to improve accuracy and reduce variance by leveraging diverse learning algorithms or training subsets. Model stacking, a sophisticated type of ensembling, integrates base model outputs as inputs to a meta-model that learns how to best combine them for enhanced predictive performance. Both techniques boost generalization, but stacking often achieves superior results by optimizing the interaction between individual models.

Table of Comparison

| Aspect | Model Ensembling | Model Stacking |

|---|---|---|

| Definition | Combining predictions from multiple base models to improve accuracy. | Using a meta-model to learn from base models' predictions for better performance. |

| Technique | Simple aggregation like voting, averaging, or weighted averaging. | Train a second-level model on base models' outputs to optimize final prediction. |

| Complexity | Low to moderate, as it combines model outputs directly. | Higher, requires additional training of a meta-model. |

| Performance | Improves stability and reduces variance of predictions. | Often yields superior accuracy by learning relationships among base models. |

| Interpretability | Higher; easier to analyze individual model contributions. | Lower; meta-model adds complexity to interpretation. |

| Use Cases | When quick, robust aggregation is needed (e.g., random forests, bagging). | When maximum prediction accuracy is desired, especially in competitions. |

Introduction to Model Ensembling and Model Stacking

Model ensembling combines predictions from multiple machine learning models to improve overall accuracy and robustness by reducing variance and bias. Model stacking is a specific type of ensembling where the outputs of base learners are used as inputs for a meta-learner, enabling the system to capture complex patterns by leveraging strengths of different models. Both techniques enhance predictive performance but differ in architecture complexity and integration methods, with stacking typically providing more sophisticated error correction.

Key Concepts: What is Model Ensembling?

Model ensembling combines multiple machine learning models to improve predictive performance by leveraging diverse model strengths and reducing individual weaknesses. Techniques such as bagging, boosting, and random forests exemplify ensembling by aggregating base learners through voting or averaging methods. The core principle is to create a robust composite model that outperforms any single constituent model by minimizing bias and variance.

Key Concepts: What is Model Stacking?

Model stacking is an advanced ensemble learning technique where multiple base models are trained to make predictions, and a meta-model is subsequently trained on these predictions to improve overall accuracy. Unlike simple ensembling methods such as bagging or boosting, stacking leverages the strengths of diverse algorithms by combining their outputs, leading to enhanced predictive performance. This hierarchical approach optimizes model generalization by reducing bias and variance through layered learning.

Types of Model Ensembling Techniques

Model ensembling techniques include bagging, boosting, and stacking, each enhancing predictive performance by combining multiple models. Bagging reduces variance through parallel training of diverse base learners, while boosting sequentially corrects errors by emphasizing misclassified instances. Stacking integrates predictions from various models using a meta-learner to optimize overall accuracy, distinguishing it as a hybrid ensembling approach.

How Model Stacking Differs from Traditional Ensembling

Model stacking differs from traditional ensembling by leveraging a meta-model to learn how to best combine the predictions of base models, rather than simply averaging or voting on their outputs. This hierarchical approach enables stacking to capture complex relationships among model predictions, often resulting in improved generalization and accuracy. Traditional ensembling methods like bagging or boosting treat each base model independently, whereas stacking integrates their strengths through supervised learning at the second level.

Advantages and Limitations of Model Ensembling

Model ensembling enhances predictive performance by combining multiple models to reduce variance and bias, improving generalization on diverse datasets. It offers robustness against overfitting and model-specific errors but can increase computational complexity and require more memory during training and inference. Limitations include potential difficulty in interpreting combined model outputs and the challenge of selecting optimal models and combination strategies.

Benefits and Challenges of Model Stacking

Model stacking enhances predictive performance by combining multiple base models through a meta-learner, effectively capturing diverse patterns and reducing generalization error. This technique offers increased flexibility and accuracy compared to simple ensembling methods like bagging or boosting. Challenges include increased computational complexity, risk of overfitting in the meta-learner, and the need for careful cross-validation strategies to prevent information leakage.

Practical Applications: When to Use Ensembling vs Stacking

Model ensembling excels in improving prediction accuracy by combining diverse models, making it ideal for reducing variance in tasks like image classification and fraud detection. Model stacking, which involves training a meta-model on base model outputs, is better suited for complex datasets requiring hierarchical learning, such as natural language processing and structured data problems. Choosing ensembling over stacking depends on the trade-off between computational resources and the need for capturing intricate model interactions in applications like recommendation systems and financial forecasting.

Implementation Strategies for Ensembling and Stacking

Model ensembling involves combining predictions from multiple independent models, typically through methods such as bagging, boosting, or voting, to improve overall accuracy and reduce variance. Model stacking integrates multiple base learners by training a meta-model on their combined outputs, requiring careful cross-validation and data partitioning to prevent overfitting during the second-level training. Effective implementation strategies for stacking include using diverse base models, ensuring balanced training data, and employing regularization techniques in the meta-model to enhance generalization performance.

Performance Comparison: Ensembling vs Stacking in Real-world Scenarios

Model ensembling combines multiple models by averaging or voting to reduce variance and improve prediction stability, often yielding robust performance across diverse datasets. Model stacking leverages a meta-learner to integrate base model outputs, capturing complex patterns that individual models may miss, potentially enhancing accuracy but increasing computational complexity. Empirical studies in real-world scenarios indicate stacking frequently outperforms simple ensembling when carefully tuned, though ensembling remains valuable for its simplicity and lower overfitting risk.

model ensembling vs model stacking Infographic

techiny.com

techiny.com