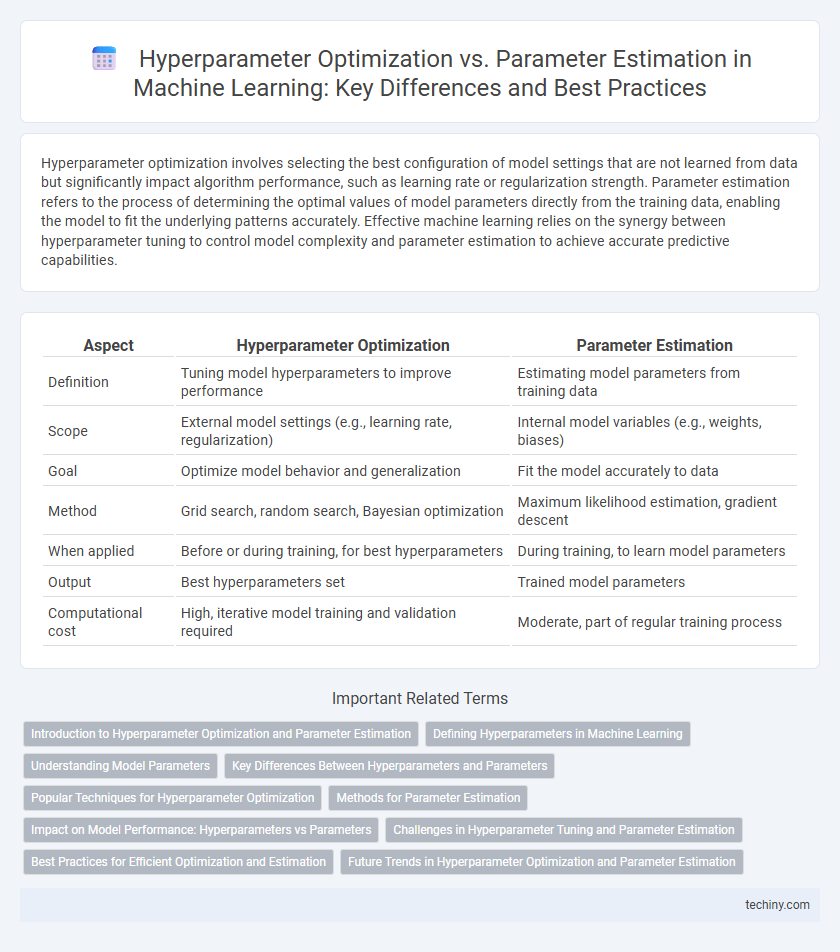

Hyperparameter optimization involves selecting the best configuration of model settings that are not learned from data but significantly impact algorithm performance, such as learning rate or regularization strength. Parameter estimation refers to the process of determining the optimal values of model parameters directly from the training data, enabling the model to fit the underlying patterns accurately. Effective machine learning relies on the synergy between hyperparameter tuning to control model complexity and parameter estimation to achieve accurate predictive capabilities.

Table of Comparison

| Aspect | Hyperparameter Optimization | Parameter Estimation |

|---|---|---|

| Definition | Tuning model hyperparameters to improve performance | Estimating model parameters from training data |

| Scope | External model settings (e.g., learning rate, regularization) | Internal model variables (e.g., weights, biases) |

| Goal | Optimize model behavior and generalization | Fit the model accurately to data |

| Method | Grid search, random search, Bayesian optimization | Maximum likelihood estimation, gradient descent |

| When applied | Before or during training, for best hyperparameters | During training, to learn model parameters |

| Output | Best hyperparameters set | Trained model parameters |

| Computational cost | High, iterative model training and validation required | Moderate, part of regular training process |

Introduction to Hyperparameter Optimization and Parameter Estimation

Hyperparameter optimization involves tuning external configuration settings of a machine learning model, such as learning rate or regularization strength, to enhance predictive performance and generalization. Parameter estimation, by contrast, refers to the process of determining the values of internal model parameters, like weights and biases, through training algorithms like gradient descent. Understanding the distinction between hyperparameters and parameters is crucial for effectively improving model accuracy and preventing overfitting.

Defining Hyperparameters in Machine Learning

Hyperparameters in machine learning are the configuration settings used to structure and control the learning process, such as learning rate, batch size, and number of hidden layers. These settings differ from parameters, which are learned directly from data during model training, like weights and biases in neural networks. Proper hyperparameter optimization involves searching for the best combination of hyperparameters to improve model performance and generalization.

Understanding Model Parameters

Model parameters are values learned directly from training data during the learning process, defining the model's predictive capabilities, while hyperparameters are set before training to control the learning process and model complexity. Hyperparameter optimization involves selecting the best hyperparameters to improve performance and generalization on unseen data, whereas parameter estimation focuses on fitting the model parameters to minimize error within the training set. Understanding the distinction between these two enables more effective tuning and accurate prediction in machine learning models.

Key Differences Between Hyperparameters and Parameters

Hyperparameters are external configurations set before training a machine learning model, such as learning rate or number of trees in a random forest, while parameters are internal model variables, like weights and biases, learned during training. Hyperparameter optimization involves selecting the best hyperparameter values using techniques like grid search or Bayesian optimization to improve model performance. In contrast, parameter estimation refers to the process of fitting the model by adjusting parameters based on training data to minimize error.

Popular Techniques for Hyperparameter Optimization

Grid search, random search, and Bayesian optimization are popular techniques for hyperparameter optimization in machine learning, each offering different trade-offs between exploration and computational cost. Grid search exhaustively evaluates predefined hyperparameter combinations, ensuring thoroughness but often at high computational expense. Bayesian optimization leverages probabilistic models to efficiently navigate the hyperparameter space, improving performance with fewer evaluations compared to random search.

Methods for Parameter Estimation

Parameter estimation in machine learning involves techniques such as Maximum Likelihood Estimation (MLE) and Bayesian Inference, which focus on finding the most probable parameters that fit the training data. Gradient-based methods like stochastic gradient descent (SGD) and its variants optimize model parameters by minimizing loss functions iteratively. These methods differ from hyperparameter optimization, which tunes model settings like learning rate or regularization strength externally to improve overall model performance.

Impact on Model Performance: Hyperparameters vs Parameters

Hyperparameter optimization significantly influences model performance by determining the learning process's overall structure, such as learning rate, batch size, and model architecture, which sets the foundation for effective training. Parameter estimation focuses on adjusting model weights and biases based on training data to minimize prediction error and enhance accuracy within the established framework. While hyperparameters guide model capacity and generalization, parameters directly affect the model's predictive capabilities through iterative refinement.

Challenges in Hyperparameter Tuning and Parameter Estimation

Hyperparameter optimization faces challenges such as high computational cost, non-convex search spaces, and the difficulty of selecting appropriate search strategies like grid search, random search, or Bayesian optimization. Parameter estimation struggles with noise in data, model identifiability issues, and overfitting risks that require regularization techniques and cross-validation for robustness. Both processes demand careful validation to ensure model generalization and performance on unseen data.

Best Practices for Efficient Optimization and Estimation

Hyperparameter optimization involves tuning model settings such as learning rate, batch size, and regularization strength to improve generalization, while parameter estimation focuses on finding the best model weights or coefficients through training algorithms like gradient descent. Efficient optimization leverages techniques like grid search, random search, and Bayesian optimization to systematically explore hyperparameter spaces, whereas parameter estimation requires robust convergence criteria and regularization to avoid overfitting. Best practices include using cross-validation for hyperparameter selection and employing early stopping during parameter estimation to balance model complexity and training time.

Future Trends in Hyperparameter Optimization and Parameter Estimation

Future trends in hyperparameter optimization emphasize automated techniques such as Bayesian optimization, reinforcement learning, and meta-learning to efficiently explore large hyperparameter spaces. Advances in hardware acceleration and parallel computing are driving scalable, real-time parameter estimation methods that improve model adaptability in dynamic environments. Integration of explainability and robustness metrics into optimization objectives is becoming a critical focus to enhance trustworthiness and performance in complex machine learning systems.

Hyperparameter Optimization vs Parameter Estimation Infographic

techiny.com

techiny.com