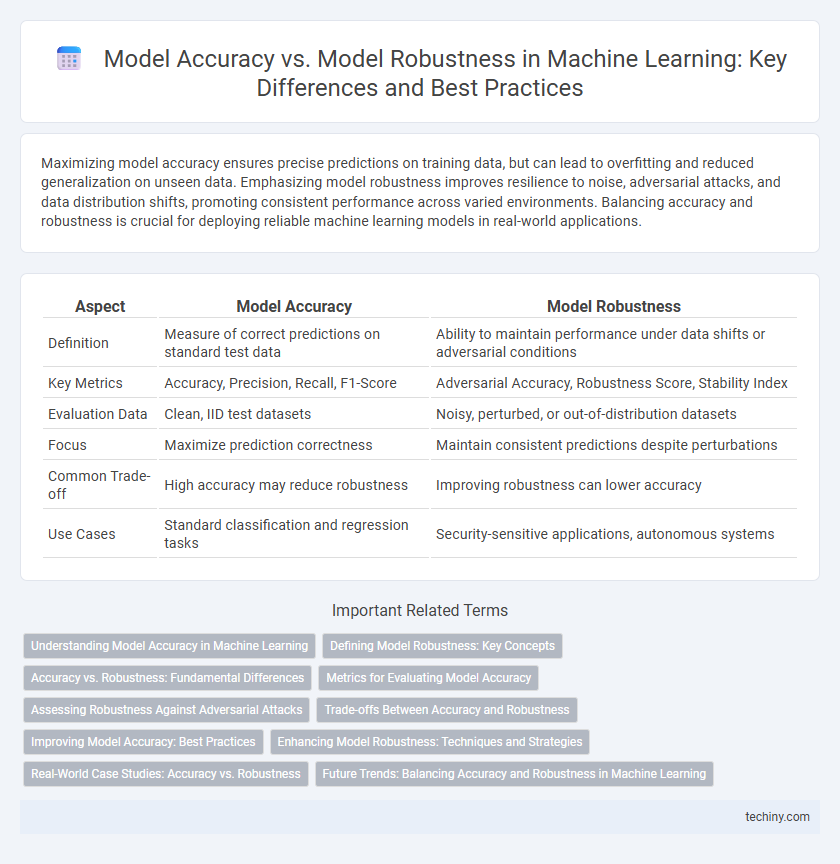

Maximizing model accuracy ensures precise predictions on training data, but can lead to overfitting and reduced generalization on unseen data. Emphasizing model robustness improves resilience to noise, adversarial attacks, and data distribution shifts, promoting consistent performance across varied environments. Balancing accuracy and robustness is crucial for deploying reliable machine learning models in real-world applications.

Table of Comparison

| Aspect | Model Accuracy | Model Robustness |

|---|---|---|

| Definition | Measure of correct predictions on standard test data | Ability to maintain performance under data shifts or adversarial conditions |

| Key Metrics | Accuracy, Precision, Recall, F1-Score | Adversarial Accuracy, Robustness Score, Stability Index |

| Evaluation Data | Clean, IID test datasets | Noisy, perturbed, or out-of-distribution datasets |

| Focus | Maximize prediction correctness | Maintain consistent predictions despite perturbations |

| Common Trade-off | High accuracy may reduce robustness | Improving robustness can lower accuracy |

| Use Cases | Standard classification and regression tasks | Security-sensitive applications, autonomous systems |

Understanding Model Accuracy in Machine Learning

Model accuracy in machine learning measures the proportion of correctly predicted instances over the total dataset, serving as a key indicator of a model's predictive performance. High accuracy often reflects effective pattern recognition in training and testing data, but it does not guarantee robustness against out-of-distribution samples or adversarial attacks. Evaluating both accuracy and robustness is essential for deploying reliable and generalizable machine learning models in real-world applications.

Defining Model Robustness: Key Concepts

Model robustness refers to a machine learning model's ability to maintain high performance across diverse, noisy, or adversarial inputs, ensuring consistent predictions beyond the training distribution. Unlike model accuracy, which measures performance on a specific test set, robustness evaluates resilience to perturbations, data shifts, and unanticipated scenarios. Key concepts include adversarial robustness, generalization under domain shifts, and stability against input variations, critical for deploying reliable AI systems in real-world applications.

Accuracy vs. Robustness: Fundamental Differences

Model accuracy measures how well a machine learning model performs on a specific, often clean dataset, reflecting its ability to make correct predictions under normal conditions. In contrast, model robustness evaluates the model's resilience to adversarial examples, noisy inputs, or distributional shifts, emphasizing stability and reliability in varied or adversarial environments. Understanding the fundamental difference between accuracy and robustness is essential for developing models that not only perform well in ideal settings but also maintain consistent performance in real-world scenarios.

Metrics for Evaluating Model Accuracy

Model accuracy metrics such as precision, recall, F1 score, and area under the ROC curve (AUC-ROC) provide quantitative measures of a machine learning model's performance on labeled datasets. These metrics assess how well the model predicts correct labels but may not fully capture its ability to perform under data distribution shifts or adversarial conditions. Evaluating model robustness requires complementary metrics like robustness accuracy or adversarial robustness scores to understand model reliability beyond traditional accuracy measures.

Assessing Robustness Against Adversarial Attacks

Evaluating model robustness against adversarial attacks requires examining how perturbations in input data impact prediction accuracy under worst-case scenarios. Techniques such as adversarial training, gradient masking, and robust optimization enhance a model's ability to maintain performance despite crafted input manipulations designed to deceive. Robustness metrics, including certified defenses and empirical attack success rates, provide critical benchmarks beyond standard accuracy measures to assess a machine learning model's resilience in adversarial environments.

Trade-offs Between Accuracy and Robustness

Model accuracy often improves through fine-tuning on specific datasets, but this can reduce robustness by making models sensitive to noise or adversarial attacks. Enhancing robustness typically involves regularization techniques, adversarial training, or data augmentation, which may slightly decrease accuracy on clean data. Balancing the trade-off between accuracy and robustness requires optimizing model architectures and training processes to achieve reliable performance across diverse, real-world scenarios.

Improving Model Accuracy: Best Practices

Improving model accuracy involves selecting high-quality, representative datasets and employing feature engineering techniques to enhance input relevance. Hyperparameter tuning and cross-validation methods systematically optimize model performance while preventing overfitting. Incorporating ensemble learning techniques such as bagging and boosting further increases accuracy by reducing bias and variance across predictions.

Enhancing Model Robustness: Techniques and Strategies

Enhancing model robustness involves techniques such as adversarial training, data augmentation, and regularization methods that improve a machine learning model's ability to perform reliably under diverse and noisy conditions. Robustness optimization aims at minimizing performance degradation when facing unseen or perturbed data, complementing traditional accuracy-focused metrics. Techniques like ensemble learning, dropout, and gradient masking further strengthen model resilience, ensuring consistent prediction quality across varied input distributions.

Real-World Case Studies: Accuracy vs. Robustness

Real-world case studies demonstrate that high model accuracy on controlled datasets often fails to translate into robustness against noisy or adversarial inputs in diverse environments. For instance, autonomous driving systems may achieve over 90% accuracy in simulations but struggle with unexpected weather conditions or sensor failures, highlighting a trade-off between accuracy and robustness. Incorporating robustness evaluation metrics alongside accuracy in benchmark datasets enhances the deployment reliability of machine learning models in practical applications.

Future Trends: Balancing Accuracy and Robustness in Machine Learning

Future trends in machine learning prioritize developing models that achieve high accuracy while maintaining robustness against adversarial attacks and data distribution shifts. Techniques such as adversarial training, ensemble methods, and domain adaptation are gaining traction to create resilient models without compromising predictive performance. Research continues to explore the optimal trade-offs, aiming to deploy machine learning systems that perform reliably in real-world, dynamic environments.

model accuracy vs model robustness Infographic

techiny.com

techiny.com