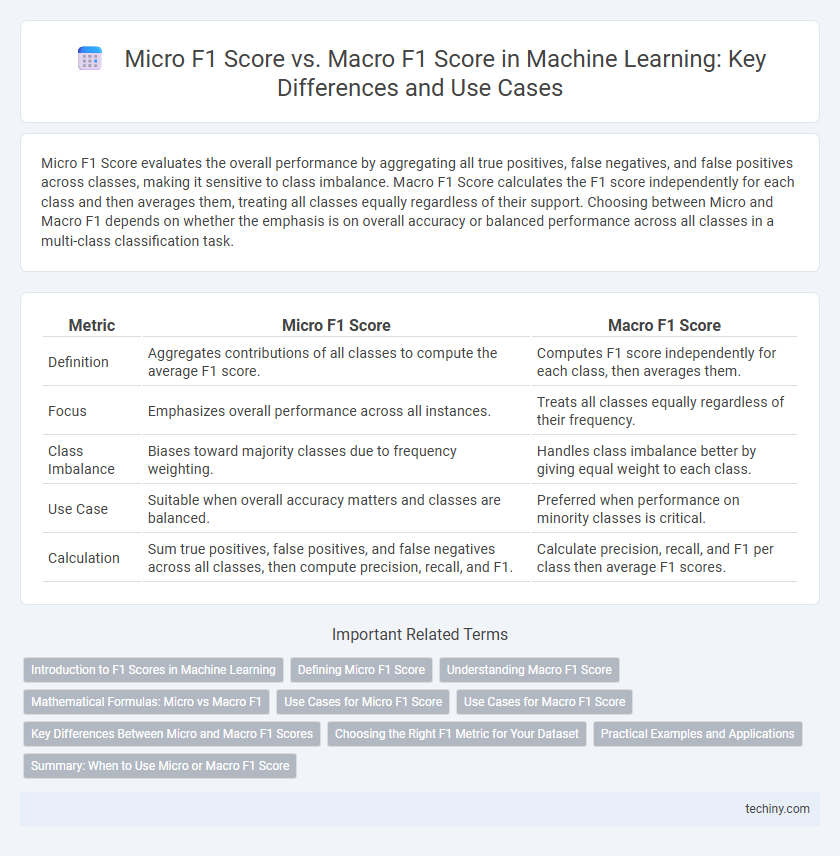

Micro F1 Score evaluates the overall performance by aggregating all true positives, false negatives, and false positives across classes, making it sensitive to class imbalance. Macro F1 Score calculates the F1 score independently for each class and then averages them, treating all classes equally regardless of their support. Choosing between Micro and Macro F1 depends on whether the emphasis is on overall accuracy or balanced performance across all classes in a multi-class classification task.

Table of Comparison

| Metric | Micro F1 Score | Macro F1 Score |

|---|---|---|

| Definition | Aggregates contributions of all classes to compute the average F1 score. | Computes F1 score independently for each class, then averages them. |

| Focus | Emphasizes overall performance across all instances. | Treats all classes equally regardless of their frequency. |

| Class Imbalance | Biases toward majority classes due to frequency weighting. | Handles class imbalance better by giving equal weight to each class. |

| Use Case | Suitable when overall accuracy matters and classes are balanced. | Preferred when performance on minority classes is critical. |

| Calculation | Sum true positives, false positives, and false negatives across all classes, then compute precision, recall, and F1. | Calculate precision, recall, and F1 per class then average F1 scores. |

Introduction to F1 Scores in Machine Learning

F1 Score in machine learning evaluates the balance between precision and recall, providing a single metric for model performance on classification tasks. Micro F1 Score aggregates contributions of all classes to calculate the average metric globally, making it sensitive to class imbalance by weighting classes according to their frequency. Macro F1 Score computes the metric independently for each class and averages them, treating all classes equally, which is essential for assessing performance on imbalanced datasets.

Defining Micro F1 Score

Micro F1 score calculates the harmonic mean of precision and recall by aggregating the contributions of all classes, treating every instance equally regardless of class imbalance. It is especially useful in multi-class classification tasks where overall performance across all classes needs to be measured. Unlike Macro F1 score, which averages F1 scores of each class independently, Micro F1 focuses on the global count of true positives, false negatives, and false positives.

Understanding Macro F1 Score

Macro F1 Score calculates the F1 score independently for each class and then averages them, treating all classes equally regardless of their support. This metric is especially useful in imbalanced datasets where minority classes need equal consideration alongside majority classes. By focusing on per-class performance, Macro F1 Score provides a clearer understanding of a model's ability to perform consistently across all categories.

Mathematical Formulas: Micro vs Macro F1

Micro F1 Score calculates harmonic mean of precision and recall by aggregating all true positives, false positives, and false negatives across classes, expressed as \( \text{Micro F1} = \frac{2 \times \sum TP}{2 \times \sum TP + \sum FP + \sum FN} \). Macro F1 Score computes F1 individually for each class and averages them, represented mathematically as \( \text{Macro F1} = \frac{1}{N} \sum_{i=1}^{N} \frac{2 \times TP_i}{2 \times TP_i + FP_i + FN_i} \), where \(N\) is the number of classes. Micro F1 emphasizes overall performance by weighting classes according to their support, while Macro F1 treats all classes equally regardless of size, highlighting balanced accuracy across diverse categories.

Use Cases for Micro F1 Score

Micro F1 Score is particularly useful in imbalanced datasets where classes have varying sample sizes, as it calculates metrics globally by aggregating true positives, false negatives, and false positives across all classes. This makes Micro F1 ideal for evaluating overall model performance in multi-class classification problems where the accuracy of dominant classes is critical. Use cases include fraud detection, spam filtering, and medical diagnosis systems where ensuring high true positive rates across all instances is more important than treating each class equally.

Use Cases for Macro F1 Score

Macro F1 Score is particularly valuable in multi-class classification problems with imbalanced datasets, as it calculates the F1 score independently for each class and then takes the average, ensuring that minority classes receive equal importance. Use cases include medical diagnosis where rare diseases must be detected accurately, and fraud detection systems where minority fraudulent cases cannot be overlooked. This metric helps optimize model performance across all classes, avoiding bias toward majority classes.

Key Differences Between Micro and Macro F1 Scores

Micro F1 Score calculates the harmonic mean of precision and recall by aggregating the contributions of all classes, emphasizing individual instance performance and effectively handling class imbalance. Macro F1 Score computes the unweighted average of F1 scores for each class, treating all classes equally regardless of size, which highlights performance across minority classes. The key difference lies in Micro F1's sensitivity to class frequencies versus Macro F1's focus on balanced class evaluation, impacting model assessment in multi-class classification tasks.

Choosing the Right F1 Metric for Your Dataset

Micro F1 Score calculates the overall precision and recall by aggregating contributions of all classes, making it ideal for imbalanced datasets where class frequency varies significantly. Macro F1 Score computes the F1 score independently for each class and averages them, giving equal importance to all classes regardless of their size, which is beneficial when all classes are equally important. Selecting the right F1 metric depends on your dataset's class distribution and the specific prioritization of minority versus majority classes in your machine learning task.

Practical Examples and Applications

Micro F1 Score aggregates contributions of all classes to compute the average metric, making it ideal for imbalanced datasets such as fraud detection where rare class accuracy is crucial. Macro F1 Score treats all classes equally by averaging F1 scores per class, suitable for multi-class problems like sentiment analysis with balanced class distribution. For instance, in medical diagnosis with uneven disease prevalence, Micro F1 highlights overall performance, whereas Macro F1 emphasizes effectiveness across all conditions.

Summary: When to Use Micro or Macro F1 Score

Micro F1 Score is ideal for imbalanced datasets where overall performance across all classes matters because it aggregates the contributions of all classes to compute the average metric. Macro F1 Score treats all classes equally by averaging F1 scores of each class individually, making it suitable for evaluating models on datasets with class imbalance to ensure minority class performance is emphasized. Choose Micro F1 for balanced evaluation of overall system accuracy, and Macro F1 for detailed class-wise performance insights.

Micro F1 Score vs Macro F1 Score Infographic

techiny.com

techiny.com