Xavier Initialization is designed for activation functions like sigmoid and tanh, maintaining the variance of activations throughout layers to prevent vanishing or exploding gradients. He Initialization is tailored for ReLU and its variants, scaling weights to account for rectifier nonlinearities, which enhances training stability and convergence speed. Choosing the appropriate initialization method significantly impacts model performance and training efficiency in deep neural networks.

Table of Comparison

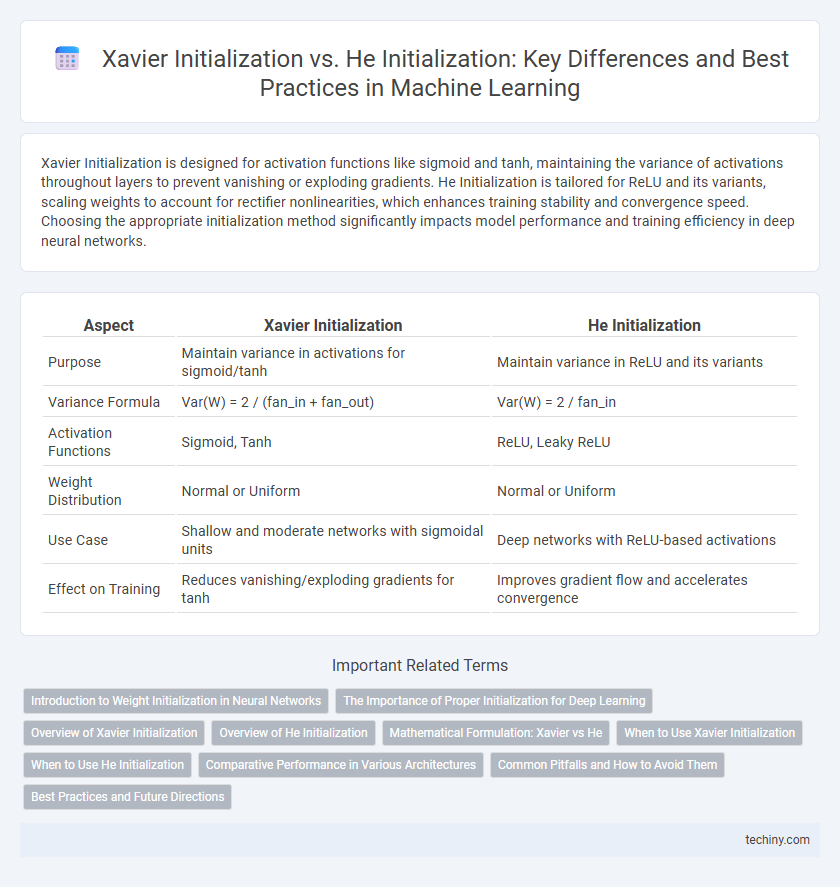

| Aspect | Xavier Initialization | He Initialization |

|---|---|---|

| Purpose | Maintain variance in activations for sigmoid/tanh | Maintain variance in ReLU and its variants |

| Variance Formula | Var(W) = 2 / (fan_in + fan_out) | Var(W) = 2 / fan_in |

| Activation Functions | Sigmoid, Tanh | ReLU, Leaky ReLU |

| Weight Distribution | Normal or Uniform | Normal or Uniform |

| Use Case | Shallow and moderate networks with sigmoidal units | Deep networks with ReLU-based activations |

| Effect on Training | Reduces vanishing/exploding gradients for tanh | Improves gradient flow and accelerates convergence |

Introduction to Weight Initialization in Neural Networks

Xavier Initialization and He Initialization are critical techniques designed to optimize weight initialization in neural networks, addressing the vanishing and exploding gradient problems during training. Xavier Initialization, suited for activation functions like sigmoid and tanh, scales weights based on the number of input and output neurons to maintain variance across layers. He Initialization, tailored for ReLU and its variants, adjusts weight variance considering only the number of input neurons, improving training efficiency and convergence in deep networks.

The Importance of Proper Initialization for Deep Learning

Proper initialization of neural network weights significantly impacts training stability and convergence speed in deep learning models. Xavier Initialization is optimized for activation functions like sigmoid and tanh, maintaining variance across layers to prevent vanishing or exploding gradients. He Initialization, designed for ReLU and its variants, sets weights to preserve forward signal propagation, enhancing performance in deeper networks by reducing gradient degradation.

Overview of Xavier Initialization

Xavier Initialization sets the weights in neural networks by drawing values from a distribution with a variance of 2 divided by the sum of the input and output neurons, optimizing signal flow in layers with sigmoid or tanh activations. This method helps mitigate the vanishing and exploding gradient problems by maintaining the variance of activations through each layer. It is widely used in deep learning frameworks to improve convergence speed and overall model performance in feedforward networks.

Overview of He Initialization

He Initialization is a weight initialization technique specifically designed for layers with ReLU activation functions, aiming to mitigate the vanishing gradient problem in deep neural networks. It sets the initial weights by drawing from a normalized distribution with variance scaled by 2 divided by the number of input units, improving convergence speed and training stability. This method outperforms traditional initialization approaches like Xavier Initialization in networks using ReLU, as it maintains variance across layers more effectively.

Mathematical Formulation: Xavier vs He

Xavier Initialization samples weights from a distribution with variance 2/(n_in + n_out), optimizing signal propagation for activation functions like sigmoid and tanh by balancing input and output neuron counts. He Initialization, designed specifically for ReLU activations, uses a variance of 2/n_in to maintain variance of activations throughout layers, preventing vanishing or exploding gradients. Both methods adapt the weight initialization variance based on layer sizes but differ in scaling factors aligned with their target activation functions.

When to Use Xavier Initialization

Xavier Initialization is ideal for neural networks using sigmoid or tanh activation functions, as it helps maintain the variance of activations across layers, preventing vanishing or exploding gradients. This method initializes weights by drawing from a distribution with variance scaled by the average of the input and output layer sizes, ensuring stable signal propagation. It is particularly effective in shallow to moderately deep networks where preserving activation variance is critical for convergence.

When to Use He Initialization

He Initialization is preferred for deep neural networks using ReLU or its variants as activation functions, ensuring stable gradients during training. It adapts variance scaling based on the number of input neurons to maintain signal flow and prevent vanishing or exploding gradients. This initialization technique improves convergence speed and model performance in architectures such as convolutional neural networks targeting image recognition tasks.

Comparative Performance in Various Architectures

Xavier Initialization is optimal for activation functions like tanh and logistic sigmoid, as it maintains variance across layers, preventing vanishing or exploding gradients predominantly in shallow neural networks. He Initialization, designed specifically for ReLU and its variants, improves convergence speed and stability in deep architectures by scaling weights to account for the rectifier's nonlinearity. Empirical studies demonstrate He Initialization consistently outperforms Xavier Initialization in deep convolutional and residual networks, enhancing training efficiency and final accuracy.

Common Pitfalls and How to Avoid Them

Xavier Initialization often underperforms in deep networks with ReLU activations due to variance reduction in forward passes, leading to vanishing gradients, while He Initialization addresses this by scaling weights with a variance of 2/fan_in. A common pitfall is applying Xavier Initialization indiscriminately regardless of activation function, resulting in slower convergence or training instability. To avoid this, match initialization techniques to activation functions: use Xavier for sigmoid or tanh and He Initialization for ReLU or its variants to maintain stable gradients throughout training.

Best Practices and Future Directions

Xavier Initialization is best suited for activation functions like sigmoid and tanh, ensuring variance is maintained across layers to prevent vanishing or exploding gradients. He Initialization is optimized for ReLU and its variants, scaling weights to accommodate the rectifier's properties and improve gradient flow in deep networks. Future directions involve adaptive initialization techniques that dynamically adjust based on layer activation statistics and network architecture for enhanced training stability and performance.

Xavier Initialization vs He Initialization Infographic

techiny.com

techiny.com