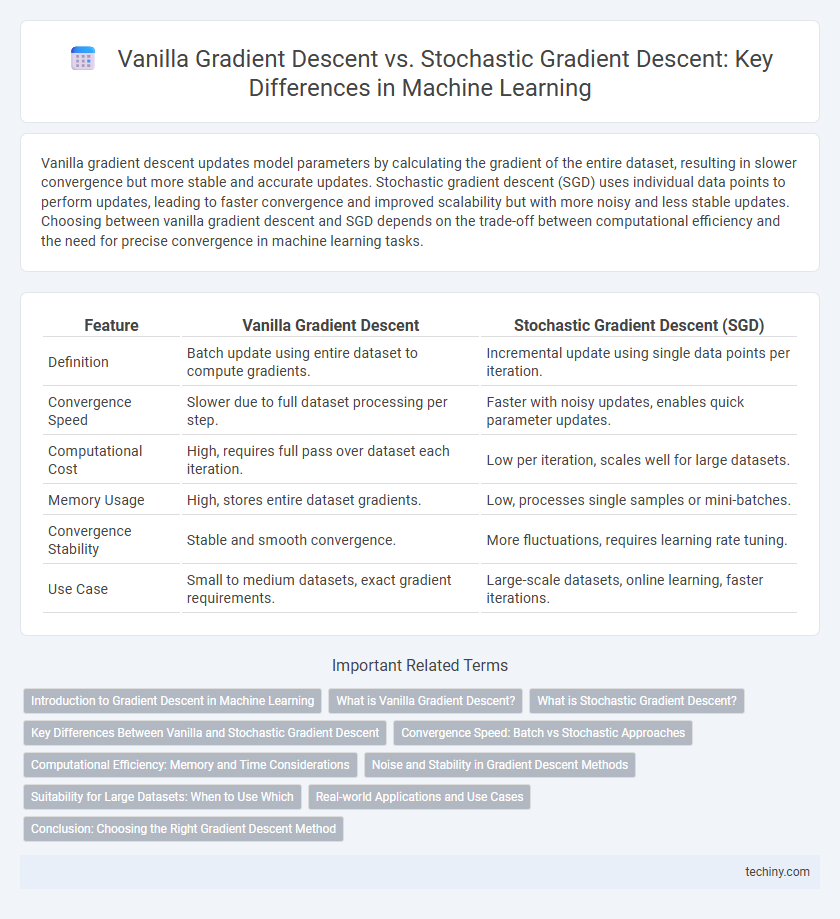

Vanilla gradient descent updates model parameters by calculating the gradient of the entire dataset, resulting in slower convergence but more stable and accurate updates. Stochastic gradient descent (SGD) uses individual data points to perform updates, leading to faster convergence and improved scalability but with more noisy and less stable updates. Choosing between vanilla gradient descent and SGD depends on the trade-off between computational efficiency and the need for precise convergence in machine learning tasks.

Table of Comparison

| Feature | Vanilla Gradient Descent | Stochastic Gradient Descent (SGD) |

|---|---|---|

| Definition | Batch update using entire dataset to compute gradients. | Incremental update using single data points per iteration. |

| Convergence Speed | Slower due to full dataset processing per step. | Faster with noisy updates, enables quick parameter updates. |

| Computational Cost | High, requires full pass over dataset each iteration. | Low per iteration, scales well for large datasets. |

| Memory Usage | High, stores entire dataset gradients. | Low, processes single samples or mini-batches. |

| Convergence Stability | Stable and smooth convergence. | More fluctuations, requires learning rate tuning. |

| Use Case | Small to medium datasets, exact gradient requirements. | Large-scale datasets, online learning, faster iterations. |

Introduction to Gradient Descent in Machine Learning

Gradient descent is a fundamental optimization algorithm used to minimize the loss function in machine learning models by iteratively adjusting parameters in the direction of the negative gradient. Vanilla gradient descent calculates the gradient using the entire training dataset, which can be computationally expensive for large datasets, while stochastic gradient descent (SGD) updates parameters using one training example at a time, leading to faster but noisier convergence. Understanding the differences between these methods helps in selecting the appropriate algorithm for efficient and effective model training.

What is Vanilla Gradient Descent?

Vanilla Gradient Descent is a fundamental optimization algorithm in machine learning that iteratively updates model parameters by calculating the gradient of the loss function with respect to the entire training dataset. This batch approach ensures a stable and accurate gradient estimate but can be computationally expensive and slow for large datasets. It contrasts with stochastic gradient descent, which updates parameters using individual data points for faster but noisier convergence.

What is Stochastic Gradient Descent?

Stochastic Gradient Descent (SGD) is an optimization algorithm that updates model parameters using the gradient of the loss function calculated from a single randomly selected data point or a small mini-batch, rather than the entire dataset. This approach significantly reduces computational cost and speeds up convergence in training large-scale machine learning models. SGD introduces noise into the parameter updates, which can help escape local minima and improve generalization performance.

Key Differences Between Vanilla and Stochastic Gradient Descent

Vanilla Gradient Descent computes the gradient using the entire dataset, leading to stable but slower convergence, especially with large datasets. Stochastic Gradient Descent updates parameters using one data point at a time, offering faster iterations but more variance in convergence paths. Key differences include computational efficiency, convergence speed, and noise in parameter updates, impacting model training dynamics.

Convergence Speed: Batch vs Stochastic Approaches

Vanilla gradient descent processes the entire dataset to compute gradients, resulting in slower convergence but more stable and accurate parameter updates. Stochastic gradient descent updates parameters using individual data points, offering faster convergence in early iterations at the cost of higher variance and potential oscillations. The choice between batch and stochastic approaches depends on dataset size and convergence speed requirements in machine learning optimization tasks.

Computational Efficiency: Memory and Time Considerations

Vanilla gradient descent requires computing gradients over the entire dataset, leading to high memory usage and longer computation times, especially for large datasets. Stochastic gradient descent processes one or a few samples at a time, significantly reducing memory demands and speeding up computation per iteration. This trade-off allows SGD to converge faster in practice, making it more suitable for large-scale machine learning tasks where computational efficiency is critical.

Noise and Stability in Gradient Descent Methods

Vanilla gradient descent calculates gradients using the entire dataset, resulting in stable and smooth convergence with low noise but slower updates. Stochastic gradient descent (SGD) computes gradients using a single data point or mini-batch, introducing higher noise that can help escape local minima but may cause more fluctuation and instability in convergence. The trade-off between noise and stability makes SGD preferable for large-scale and online learning, while vanilla gradient descent suits smaller datasets requiring consistent gradient estimates.

Suitability for Large Datasets: When to Use Which

Vanilla gradient descent processes the entire dataset to compute gradients, making it less suitable for large datasets due to high computational cost and memory usage. Stochastic gradient descent (SGD) updates parameters using a single or a few samples per iteration, enhancing scalability and faster convergence in large-scale machine learning problems. Choose vanilla gradient descent for smaller datasets requiring precise convergence, and prefer SGD for big data scenarios demanding efficiency and real-time updates.

Real-world Applications and Use Cases

Vanilla gradient descent is often used in scenarios with smaller datasets and smooth, convex optimization problems, such as linear regression and small-scale neural networks. Stochastic gradient descent (SGD) excels in real-world applications involving large-scale datasets and non-convex optimization, including deep learning for computer vision, natural language processing, and recommendation systems. Many practical machine learning tasks leverage SGD's ability to converge faster and handle noisy gradients, making it ideal for training complex models in dynamic environments.

Conclusion: Choosing the Right Gradient Descent Method

Vanilla gradient descent processes the entire dataset to compute gradients, ensuring stable convergence but often resulting in slower updates and higher computation costs. Stochastic gradient descent updates parameters using individual data points, enabling faster iterations and better scalability for large datasets but introducing more variance in the convergence path. Selecting the appropriate gradient descent method depends on dataset size, computational resources, and the need for convergence speed versus stability.

vanilla gradient descent vs stochastic gradient descent Infographic

techiny.com

techiny.com