Dimensionality reduction techniques transform high-dimensional data into a lower-dimensional space to improve computational efficiency and visualization while preserving essential features. Manifold learning is a type of nonlinear dimensionality reduction that uncovers the intrinsic structure of data by assuming it lies on a low-dimensional manifold embedded in a higher-dimensional space. Unlike linear methods such as PCA, manifold learning algorithms like t-SNE and Isomap capture complex geometric relationships for more accurate representations of complex datasets.

Table of Comparison

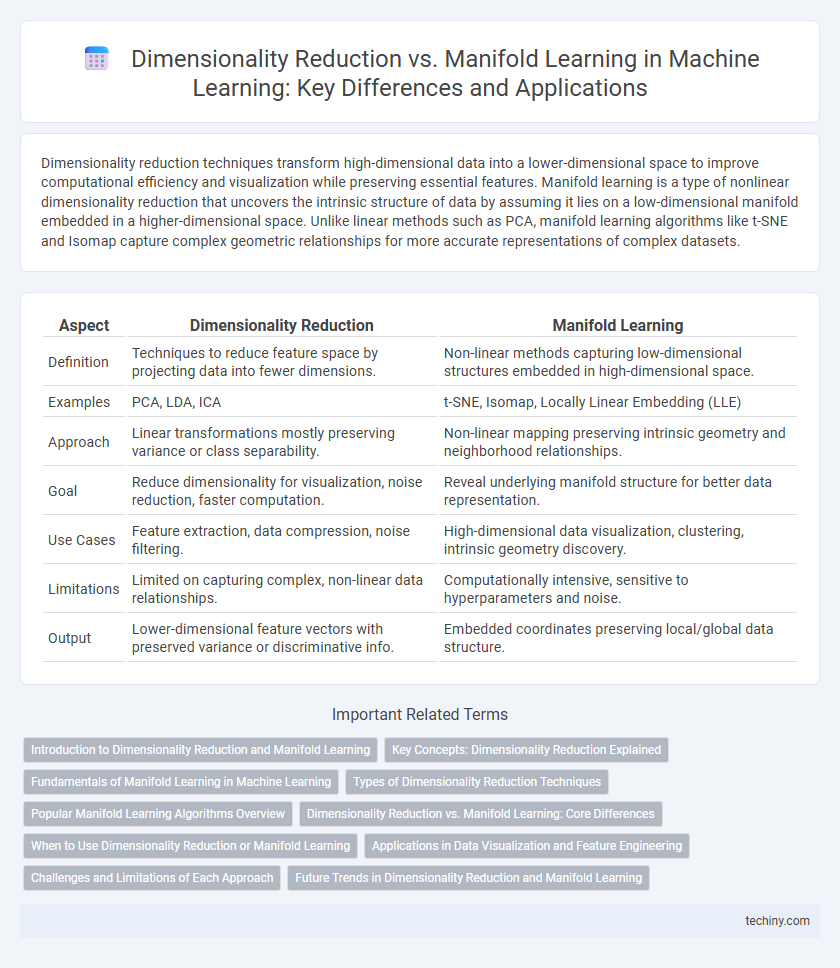

| Aspect | Dimensionality Reduction | Manifold Learning |

|---|---|---|

| Definition | Techniques to reduce feature space by projecting data into fewer dimensions. | Non-linear methods capturing low-dimensional structures embedded in high-dimensional space. |

| Examples | PCA, LDA, ICA | t-SNE, Isomap, Locally Linear Embedding (LLE) |

| Approach | Linear transformations mostly preserving variance or class separability. | Non-linear mapping preserving intrinsic geometry and neighborhood relationships. |

| Goal | Reduce dimensionality for visualization, noise reduction, faster computation. | Reveal underlying manifold structure for better data representation. |

| Use Cases | Feature extraction, data compression, noise filtering. | High-dimensional data visualization, clustering, intrinsic geometry discovery. |

| Limitations | Limited on capturing complex, non-linear data relationships. | Computationally intensive, sensitive to hyperparameters and noise. |

| Output | Lower-dimensional feature vectors with preserved variance or discriminative info. | Embedded coordinates preserving local/global data structure. |

Introduction to Dimensionality Reduction and Manifold Learning

Dimensionality reduction techniques simplify high-dimensional data by transforming it into a lower-dimensional space, preserving essential features for efficient analysis. Manifold learning, a subset of nonlinear dimensionality reduction methods, uncovers the intrinsic geometry of data by assuming it lies on an embedded low-dimensional manifold. Algorithms like PCA, t-SNE, and Isomap exemplify approaches that improve visualization, noise reduction, and computational performance in machine learning workflows.

Key Concepts: Dimensionality Reduction Explained

Dimensionality reduction is a key technique in machine learning that simplifies high-dimensional data by transforming it into a lower-dimensional space while preserving essential structures and relationships. Unlike traditional methods such as Principal Component Analysis (PCA), manifold learning techniques, including t-SNE and Isomap, capture the intrinsic geometric structure of non-linear data manifolds. These methods enhance data visualization, improve computational efficiency, and support better model performance by reducing noise and redundancy in complex datasets.

Fundamentals of Manifold Learning in Machine Learning

Manifold learning is a nonlinear dimensionality reduction technique that assumes high-dimensional data lie on a low-dimensional manifold embedded within the higher-dimensional space. It focuses on capturing the intrinsic geometry of data by preserving local neighborhood relationships, unlike traditional linear methods such as Principal Component Analysis (PCA). Common manifold learning algorithms include Isomap, Locally Linear Embedding (LLE), and t-SNE, which are effective for visualizing complex, nonlinear structures in data while reducing dimensionality.

Types of Dimensionality Reduction Techniques

Dimensionality reduction techniques include linear methods like Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA), which simplify data by projecting it onto lower-dimensional subspaces. Nonlinear techniques such as t-Distributed Stochastic Neighbor Embedding (t-SNE) and Isomap capture complex data structures by preserving manifold geometry during reduction. Manifold learning techniques emphasize discovering intrinsic low-dimensional structures, making them ideal for datasets where data points lie on a curved manifold within a higher-dimensional space.

Popular Manifold Learning Algorithms Overview

Popular manifold learning algorithms include t-SNE, Isomap, and Locally Linear Embedding (LLE), each designed to uncover the low-dimensional structures embedded within high-dimensional data. t-SNE excels in visualizing complex patterns by preserving local similarities, while Isomap captures global geometric relationships through geodesic distances. LLE maintains local neighborhood information by reconstructing data points from their nearest neighbors, facilitating meaningful dimensionality reduction beyond linear methods like PCA.

Dimensionality Reduction vs. Manifold Learning: Core Differences

Dimensionality reduction techniques like Principal Component Analysis (PCA) primarily focus on linear transformations to reduce the number of features by projecting data onto lower-dimensional subspaces. Manifold learning methods, such as t-SNE and Isomap, emphasize capturing the underlying nonlinear structure of high-dimensional data by assuming it lies on a lower-dimensional manifold. The core difference lies in linear versus nonlinear assumptions and their ability to preserve global versus local data geometry during the embedding process.

When to Use Dimensionality Reduction or Manifold Learning

Dimensionality reduction techniques like PCA are ideal for datasets where linear correlations dominate and noise reduction is crucial, enabling simpler models and faster computations. Manifold learning excels when data lies on a nonlinear, low-dimensional manifold embedded in a higher-dimensional space, preserving intrinsic geometric structures for accurate representation. Choose dimensionality reduction for scalable, interpretable transformations in predictive modeling, and manifold learning for capturing complex patterns in visualization or unsupervised analysis.

Applications in Data Visualization and Feature Engineering

Dimensionality reduction techniques such as Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are widely used in data visualization to transform high-dimensional datasets into interpretable two or three-dimensional plots, revealing hidden patterns and clusters. Manifold learning methods, including Isomap and Locally Linear Embedding (LLE), excel in capturing nonlinear structures within data, enhancing feature engineering by preserving intrinsic geometries that improve model performance on complex tasks like image and speech recognition. Both approaches optimize feature spaces for machine learning algorithms, facilitating efficient data exploration and improving predictive accuracy in classification and regression models.

Challenges and Limitations of Each Approach

Dimensionality reduction techniques like PCA face challenges with linearity assumptions, limiting their effectiveness on complex datasets with nonlinear structures. Manifold learning methods such as t-SNE and Isomap excel in capturing nonlinear relationships but struggle with scalability and computational cost on large datasets. Both approaches can suffer from information loss, where important data variance may be overlooked, impacting model accuracy and interpretability.

Future Trends in Dimensionality Reduction and Manifold Learning

Emerging trends in dimensionality reduction and manifold learning emphasize integrating deep learning techniques to enhance scalability and interpretability in high-dimensional data analysis. Advances in unsupervised and semi-supervised manifold learning algorithms aim to uncover complex data geometries, facilitating improved representations for tasks like image recognition and natural language processing. Future developments will likely focus on hybrid models combining manifold assumptions with neural architectures to tackle increasingly large and heterogeneous datasets.

dimensionality reduction vs manifold learning Infographic

techiny.com

techiny.com