Balancing model interpretability and model performance is a critical challenge in machine learning, as highly complex models often achieve better accuracy but lack transparency. Interpretable models such as decision trees and linear regression provide insights into decision-making processes but may sacrifice predictive power on complex datasets. Techniques like SHAP values and LIME help bridge this gap by explaining predictions of black-box models without compromising their performance.

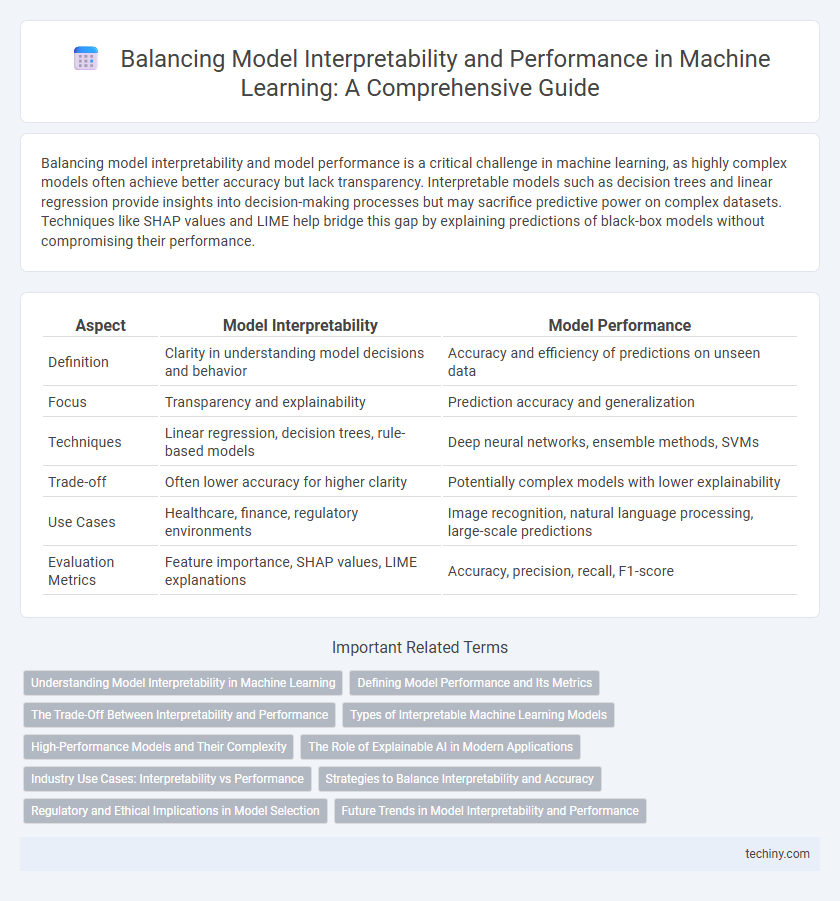

Table of Comparison

| Aspect | Model Interpretability | Model Performance |

|---|---|---|

| Definition | Clarity in understanding model decisions and behavior | Accuracy and efficiency of predictions on unseen data |

| Focus | Transparency and explainability | Prediction accuracy and generalization |

| Techniques | Linear regression, decision trees, rule-based models | Deep neural networks, ensemble methods, SVMs |

| Trade-off | Often lower accuracy for higher clarity | Potentially complex models with lower explainability |

| Use Cases | Healthcare, finance, regulatory environments | Image recognition, natural language processing, large-scale predictions |

| Evaluation Metrics | Feature importance, SHAP values, LIME explanations | Accuracy, precision, recall, F1-score |

Understanding Model Interpretability in Machine Learning

Model interpretability in machine learning refers to the extent to which human users can comprehend the internal mechanics and decision-making processes of a model. Techniques such as SHAP values, LIME, and feature importance analysis enable practitioners to explain predictions, fostering trust and facilitating debugging. Balancing interpretability with performance often involves trade-offs, especially between simple, transparent models like linear regression and complex, high-performing models like deep neural networks.

Defining Model Performance and Its Metrics

Model performance in machine learning is quantified by metrics that evaluate how accurately a model predicts or classifies data, with common measures including accuracy, precision, recall, F1 score, and area under the receiver operating characteristic curve (AUC-ROC). These metrics provide insight into different aspects of prediction quality, such as true positive rates and the balance between false positives and false negatives. Selecting appropriate performance metrics depends on the specific task and domain, ensuring that the model's effectiveness aligns with practical requirements and objectives.

The Trade-Off Between Interpretability and Performance

High model performance often relies on complex algorithms like deep neural networks, which can sacrifice interpretability due to their black-box nature. Interpretable models, such as linear regression or decision trees, provide clearer insights into feature importance but may deliver lower predictive accuracy. Balancing this trade-off requires selecting models that meet both the accuracy needed for practical applications and the transparency required for stakeholder trust and regulatory compliance.

Types of Interpretable Machine Learning Models

Interpretable machine learning models include linear regression, decision trees, and rule-based models, which offer clear insights into feature importance and decision-making processes. These models prioritize transparency, allowing users to understand how input variables influence predictions, which is critical for applications requiring accountability. While simpler models enhance interpretability, they may sacrifice predictive performance compared to complex models like deep neural networks.

High-Performance Models and Their Complexity

High-performance machine learning models such as deep neural networks and ensemble methods often exhibit increased complexity, making interpretability challenging. This complexity arises from numerous parameters, non-linear relationships, and layered architectures, which obscure the decision-making process. Balancing model interpretability with performance requires specialized techniques like SHAP values or LIME to provide insights without compromising predictive accuracy.

The Role of Explainable AI in Modern Applications

Explainable AI enhances model interpretability by providing transparent insights into complex machine learning algorithms, enabling stakeholders to understand decision-making processes. This transparency is critical in high-stakes industries such as healthcare, finance, and legal sectors where trust and accountability are paramount. Balancing model interpretability with performance requires leveraging explainable AI techniques like SHAP and LIME to maintain accuracy while ensuring actionable explanations.

Industry Use Cases: Interpretability vs Performance

In healthcare diagnostics, model interpretability ensures clinicians trust AI-driven decisions, while achieving high performance is crucial for accurate disease prediction. Financial institutions prioritize interpretability to meet regulatory compliance, although sacrificing some performance may limit fraud detection efficacy. In manufacturing, balancing interpretability and performance aids in fault diagnosis, enabling experts to understand model insights while maintaining predictive accuracy.

Strategies to Balance Interpretability and Accuracy

Balancing model interpretability and accuracy in machine learning involves employing strategies such as using inherently interpretable models like decision trees or linear regression for simpler tasks, while applying model-agnostic interpretation techniques such as SHAP or LIME for complex models like deep neural networks. Incorporating feature selection and dimensionality reduction can enhance both transparency and predictive performance by focusing on the most informative variables. Hybrid approaches like combining interpretable surrogate models with black-box algorithms enable stakeholders to gain insights without significantly sacrificing model accuracy.

Regulatory and Ethical Implications in Model Selection

Model interpretability is crucial in regulated industries such as finance and healthcare, where compliance with transparency standards mandates clear explanations of AI decisions. High-performing models like deep neural networks often sacrifice interpretability, posing ethical risks related to bias, fairness, and accountability. Balancing model performance with interpretability ensures adherence to regulatory frameworks like GDPR and promotes ethical AI deployment by enabling auditability and stakeholder trust.

Future Trends in Model Interpretability and Performance

Future trends in model interpretability and performance emphasize the development of hybrid techniques that balance transparency with accuracy, leveraging explainable AI frameworks and advanced neural architectures. Emerging research prioritizes integrating causal inference and domain-specific knowledge to enhance interpretability without compromising predictive power. The evolution of automated machine learning (AutoML) tools aims to optimize both model explainability and performance through adaptive, context-aware algorithm selection and tuning.

Model Interpretability vs Model Performance Infographic

techiny.com

techiny.com