k-Fold Cross-Validation divides the dataset into k subsets, training the model on k-1 folds and validating on the remaining fold, which balances bias and variance while being computationally efficient. Leave-One-Out Cross-Validation (LOOCV) uses each individual data point as the validation set, offering an almost unbiased estimate but with significantly higher computational cost. Choosing between k-Fold and LOOCV depends on dataset size, with k-Fold preferred for larger datasets and LOOCV suited for smaller ones to maximize training data utilization.

Table of Comparison

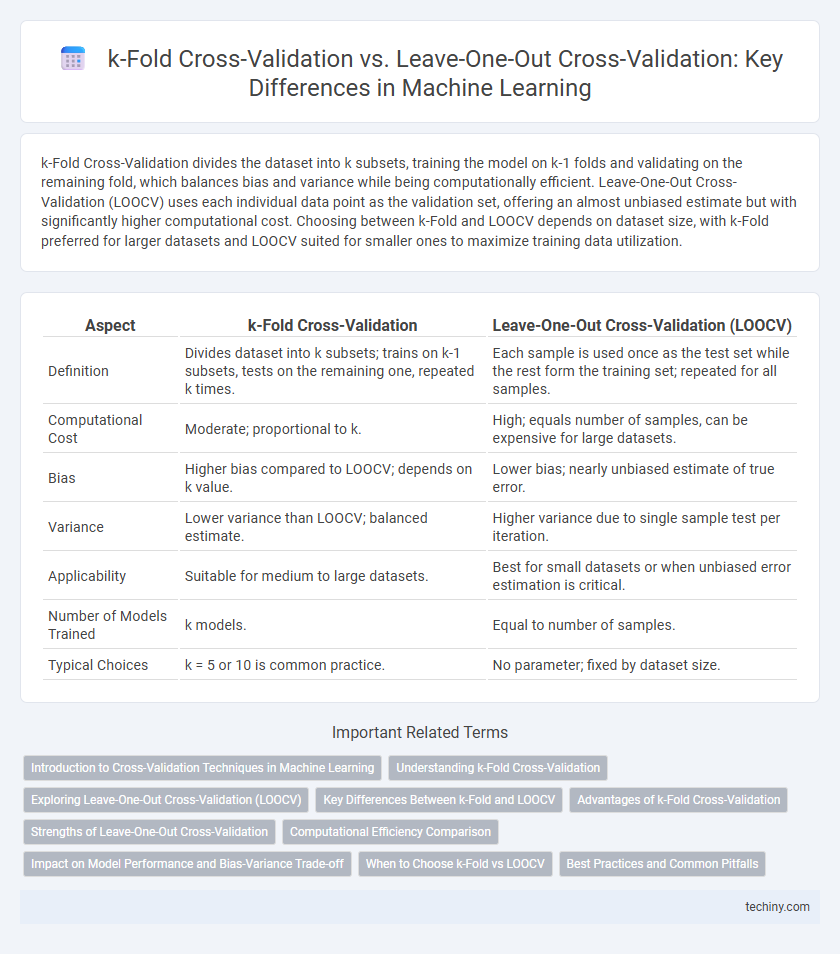

| Aspect | k-Fold Cross-Validation | Leave-One-Out Cross-Validation (LOOCV) |

|---|---|---|

| Definition | Divides dataset into k subsets; trains on k-1 subsets, tests on the remaining one, repeated k times. | Each sample is used once as the test set while the rest form the training set; repeated for all samples. |

| Computational Cost | Moderate; proportional to k. | High; equals number of samples, can be expensive for large datasets. |

| Bias | Higher bias compared to LOOCV; depends on k value. | Lower bias; nearly unbiased estimate of true error. |

| Variance | Lower variance than LOOCV; balanced estimate. | Higher variance due to single sample test per iteration. |

| Applicability | Suitable for medium to large datasets. | Best for small datasets or when unbiased error estimation is critical. |

| Number of Models Trained | k models. | Equal to number of samples. |

| Typical Choices | k = 5 or 10 is common practice. | No parameter; fixed by dataset size. |

Introduction to Cross-Validation Techniques in Machine Learning

Cross-validation techniques in machine learning, such as k-Fold Cross-Validation and Leave-One-Out Cross-Validation (LOOCV), are essential for assessing model performance and generalization. K-Fold Cross-Validation involves partitioning the dataset into k subsets, training the model on k-1 folds, and validating it on the remaining fold, which balances bias and variance effectively. Leave-One-Out Cross-Validation, a special case of k-Fold where k equals the number of samples, offers an almost unbiased estimate of model performance but at a higher computational cost.

Understanding k-Fold Cross-Validation

k-Fold Cross-Validation partitions the dataset into k equally sized subsets, training the model on k-1 folds and validating on the remaining fold iteratively to ensure robust performance estimation. This method balances bias and variance effectively by averaging results across folds, providing a more reliable assessment compared to single holdout validation. Common choices for k, such as 5 or 10, offer a practical compromise between computational efficiency and accuracy in model evaluation.

Exploring Leave-One-Out Cross-Validation (LOOCV)

Leave-One-Out Cross-Validation (LOOCV) is a model validation technique where each data point is used once as a test set while the remaining points form the training set, ideal for small datasets. LOOCV provides an almost unbiased estimate of model performance but can be computationally expensive for large datasets due to repeated training cycles. This method is particularly effective in minimizing overfitting and ensuring robust evaluation of machine learning algorithms.

Key Differences Between k-Fold and LOOCV

k-Fold Cross-Validation divides the dataset into k subsets, training the model k times, each time using one subset as the test set and the rest for training, offering a balance between bias and variance. Leave-One-Out Cross-Validation (LOOCV) uses a single observation as the test set and the remaining observations as the training set, repeating this process for each data point, resulting in low bias but high variance and computational cost. The key differences include computational efficiency, with k-Fold being less expensive than LOOCV, and the trade-off between bias and variance, where LOOCV provides almost unbiased estimates but can suffer from high variance.

Advantages of k-Fold Cross-Validation

k-Fold Cross-Validation offers a balanced trade-off between bias and variance, providing more reliable model performance estimates compared to Leave-One-Out Cross-Validation (LOOCV), which can lead to high variance. By partitioning data into k subsets, k-Fold Cross-Validation reduces computational cost significantly, making it more scalable for larger datasets. This method allows for flexible fold sizing, improving model evaluation stability and generalization capabilities over LOOCV.

Strengths of Leave-One-Out Cross-Validation

Leave-One-Out Cross-Validation (LOOCV) maximizes training data usage by iteratively training on all samples except one, leading to low bias in model evaluation. It is particularly effective for small datasets where data scarcity demands extensive testing on every individual instance. LOOCV's exhaustive approach provides highly reliable estimates of model performance, especially in scenarios requiring precise error analysis and model tuning.

Computational Efficiency Comparison

K-Fold Cross-Validation offers significantly better computational efficiency compared to Leave-One-Out Cross-Validation (LOOCV) by partitioning the dataset into K subsets, reducing the number of training iterations from N (in LOOCV) to K, where N is the total number of data points. LOOCV's exhaustive approach entails training the model N times, making it computationally expensive and often impractical for large datasets. K-Fold balances bias-variance tradeoff with fewer model training cycles, resulting in lower runtime and resource consumption.

Impact on Model Performance and Bias-Variance Trade-off

k-Fold Cross-Validation balances bias and variance by partitioning data into k subsets, providing a robust estimate of model performance with moderate computational cost, suitable for large datasets. Leave-One-Out Cross-Validation (LOOCV) minimizes bias by using nearly all data points for training in each iteration but increases variance and computational expense, often leading to higher sensitivity to outliers. The choice between k-Fold and LOOCV directly impacts model generalization, with k-Fold offering a practical trade-off and LOOCV providing an almost unbiased but potentially high-variance performance estimate.

When to Choose k-Fold vs LOOCV

k-Fold Cross-Validation is preferred for large datasets due to its balance between computational efficiency and reliable model evaluation, as it splits data into k subsets to reduce variance without excessive computation. Leave-One-Out Cross-Validation (LOOCV) is ideal for smaller datasets where maximizing training data usage is critical, providing nearly unbiased estimates despite higher computational cost. Choose k-Fold when efficiency is key and dataset size is large, opt for LOOCV when dataset size is limited and precision in error estimation is essential.

Best Practices and Common Pitfalls

k-Fold Cross-Validation balances bias and variance by partitioning data into k subsets, offering reliable model evaluation with reduced computational cost compared to Leave-One-Out Cross-Validation (LOOCV), which uses a single observation as the validation set for each iteration. Best practices include selecting an appropriate k value (commonly 5 or 10) to optimize training stability and generalization while avoiding overly small folds that can increase variance. Common pitfalls involve using LOOCV on large datasets, which can lead to high computational overhead and noisy estimates, and neglecting to shuffle data before fold creation, potentially causing biased performance metrics.

k-Fold Cross-Validation vs Leave-One-Out Cross-Validation Infographic

techiny.com

techiny.com