Balancing model interpretability and accuracy is crucial in machine learning, as highly accurate models like deep neural networks often function as black boxes, making their decisions hard to explain. Interpretable models, such as decision trees and linear regression, provide clearer insights into the decision-making process but may sacrifice some predictive performance. Choosing between interpretability and accuracy depends on the application's need for transparency, trust, and regulatory compliance alongside performance.

Table of Comparison

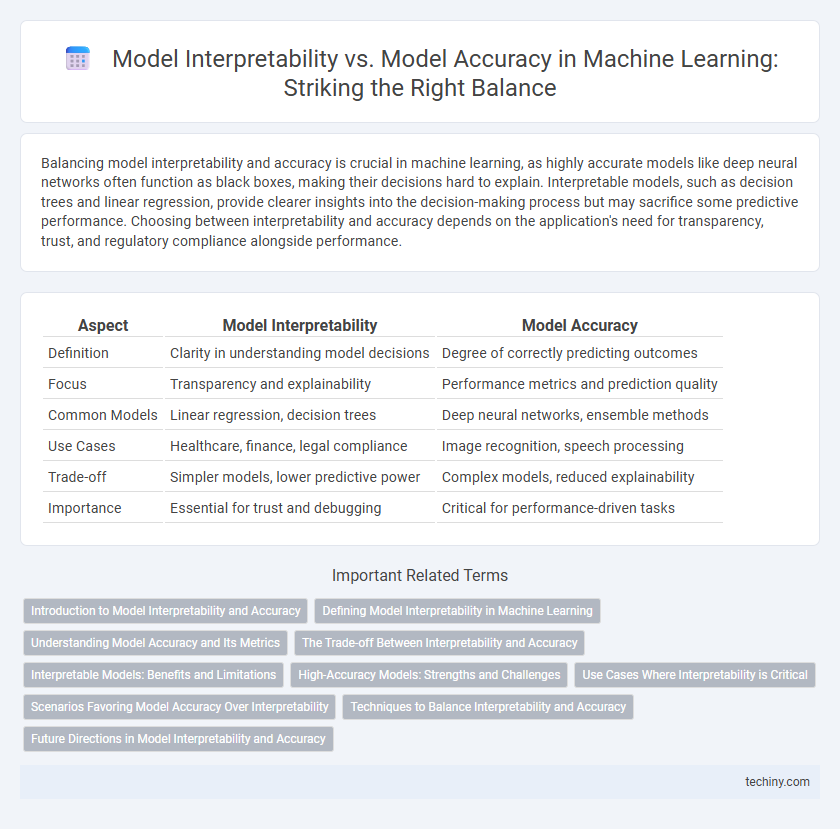

| Aspect | Model Interpretability | Model Accuracy |

|---|---|---|

| Definition | Clarity in understanding model decisions | Degree of correctly predicting outcomes |

| Focus | Transparency and explainability | Performance metrics and prediction quality |

| Common Models | Linear regression, decision trees | Deep neural networks, ensemble methods |

| Use Cases | Healthcare, finance, legal compliance | Image recognition, speech processing |

| Trade-off | Simpler models, lower predictive power | Complex models, reduced explainability |

| Importance | Essential for trust and debugging | Critical for performance-driven tasks |

Introduction to Model Interpretability and Accuracy

Model interpretability in machine learning refers to the extent to which a human can understand the internal mechanics and decision-making process of a model, often measured by clarity and simplicity of the model's structure. Model accuracy quantifies how well a model's predictions match true outcomes, typically evaluated using metrics such as precision, recall, F1-score, or mean squared error. Balancing interpretability and accuracy is crucial, as complex models like deep neural networks often achieve higher accuracy but lower interpretability compared to simpler models like linear regression or decision trees.

Defining Model Interpretability in Machine Learning

Model interpretability in machine learning refers to the extent to which a human can understand the internal mechanics and decision-making process of a predictive model. It involves techniques that elucidate how input features influence outputs, enabling transparency and trust in model predictions. High interpretability facilitates debugging, regulatory compliance, and informed decision-making, often at a trade-off with model complexity and accuracy.

Understanding Model Accuracy and Its Metrics

Model accuracy measures the proportion of correctly predicted outcomes over total predictions, providing a straightforward metric for model performance. Common accuracy-related metrics include precision, recall, F1-score, and ROC-AUC, which capture different aspects of prediction quality and error types. Understanding these metrics is crucial for evaluating how well a machine learning model generalizes to unseen data while balancing trade-offs between false positives and false negatives.

The Trade-off Between Interpretability and Accuracy

Model interpretability often requires simpler algorithms like linear regression or decision trees, which provide clear insights into decision-making but may sacrifice predictive accuracy. Complex models such as deep neural networks typically achieve higher accuracy on large datasets yet operate as black boxes, limiting transparency and understanding. Balancing this trade-off involves selecting models that meet accuracy requirements while ensuring sufficient interpretability for stakeholder trust and regulatory compliance.

Interpretable Models: Benefits and Limitations

Interpretable models in machine learning, such as decision trees and linear regression, offer clear insights into feature importance and decision-making processes, enhancing trust and facilitating regulatory compliance. These models enable easier debugging and better understanding of model behavior, which is crucial in high-stakes environments like healthcare and finance. However, interpretable models often sacrifice predictive accuracy compared to complex models like deep neural networks, limiting their effectiveness in capturing intricate data patterns.

High-Accuracy Models: Strengths and Challenges

High-accuracy machine learning models, such as deep neural networks and ensemble methods, excel at capturing complex patterns and delivering superior predictive performance on large, high-dimensional datasets. These models often face challenges in interpretability due to their intricate architectures, making it difficult for practitioners to understand the decision-making process and trust the results fully. Balancing model accuracy with transparency requires employing techniques like SHAP values or LIME to explain predictions without sacrificing performance significantly.

Use Cases Where Interpretability is Critical

In healthcare diagnostics, model interpretability is critical for ensuring clinicians trust and understand predictions guiding treatment decisions, despite sometimes sacrificing accuracy. Financial institutions rely on interpretable models to comply with regulatory requirements and explain credit scoring or fraud detection outcomes. Interpretability is essential in high-stakes environments where transparency impacts ethical considerations and stakeholder confidence.

Scenarios Favoring Model Accuracy Over Interpretability

Scenarios favoring model accuracy over interpretability often include complex tasks such as image recognition, speech processing, and fraud detection where deep learning models like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) deliver superior performance. High-stakes applications in autonomous driving and medical diagnosis prioritize predictive accuracy to minimize errors, even when model decisions are less transparent. In these cases, sacrificing interpretability enables leveraging large datasets and advanced algorithms to achieve optimal predictive outcomes and robustness.

Techniques to Balance Interpretability and Accuracy

Techniques to balance interpretability and accuracy in machine learning include the use of surrogate models, which approximate complex models with simpler interpretable ones. Methods such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) provide local interpretability while preserving overall model performance. Hybrid models combining transparent algorithms like decision trees with black-box models also help maintain high accuracy alongside essential model understanding.

Future Directions in Model Interpretability and Accuracy

Emerging research in machine learning emphasizes developing models that balance high accuracy with enhanced interpretability through techniques like explainable AI (XAI) and inherently interpretable architectures. Advances in neural-symbolic integration and causal inference are paving the way for transparent models that maintain robust predictive performance across diverse datasets. Future directions prioritize scalable interpretability frameworks and real-time explanation methods to support trustworthy AI deployment in critical applications.

Model Interpretability vs Model Accuracy Infographic

techiny.com

techiny.com