Hard labeling assigns a single, definitive class to each data point, which simplifies model training but may ignore underlying uncertainty. Soft labeling provides a probability distribution over classes, capturing ambiguity and improving model robustness and generalization. Utilizing soft labels often leads to better performance in scenarios with noisy or overlapping data.

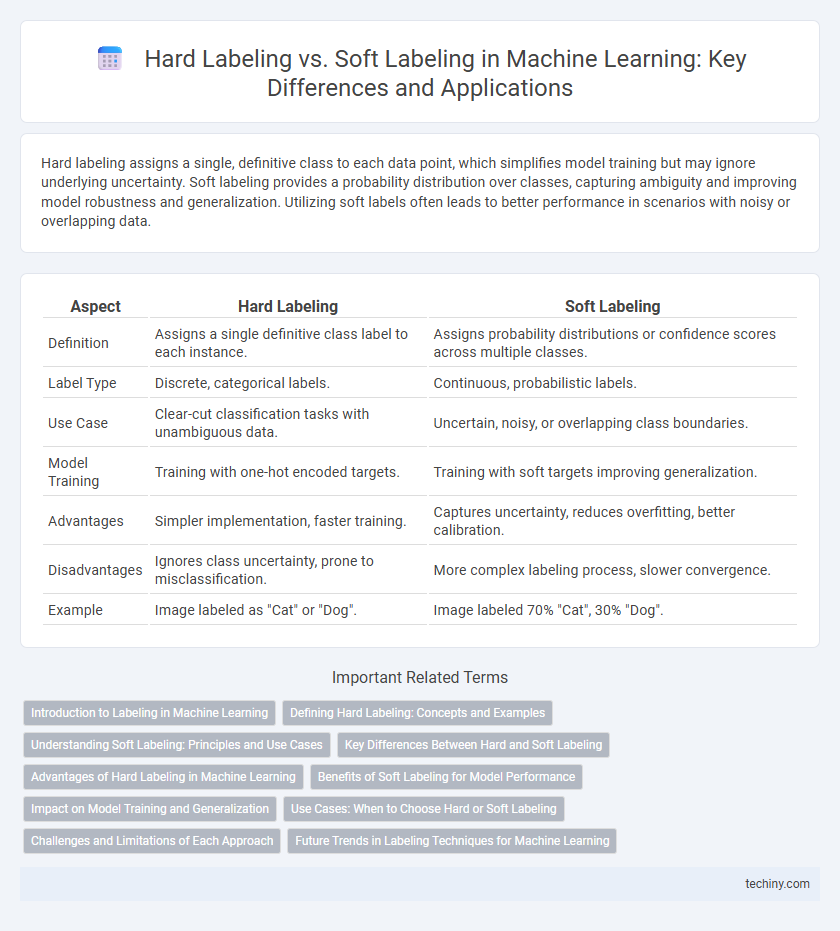

Table of Comparison

| Aspect | Hard Labeling | Soft Labeling |

|---|---|---|

| Definition | Assigns a single definitive class label to each instance. | Assigns probability distributions or confidence scores across multiple classes. |

| Label Type | Discrete, categorical labels. | Continuous, probabilistic labels. |

| Use Case | Clear-cut classification tasks with unambiguous data. | Uncertain, noisy, or overlapping class boundaries. |

| Model Training | Training with one-hot encoded targets. | Training with soft targets improving generalization. |

| Advantages | Simpler implementation, faster training. | Captures uncertainty, reduces overfitting, better calibration. |

| Disadvantages | Ignores class uncertainty, prone to misclassification. | More complex labeling process, slower convergence. |

| Example | Image labeled as "Cat" or "Dog". | Image labeled 70% "Cat", 30% "Dog". |

Introduction to Labeling in Machine Learning

Hard labeling assigns a single, definitive class label to each data point, often represented as one-hot vectors, which simplifies model training but can overlook uncertainty. Soft labeling provides probabilistic labels or confidence scores that reflect uncertainty, allowing models to learn nuanced decision boundaries and improve generalization. The choice between hard and soft labeling impacts supervised learning performance, especially in tasks involving ambiguous or noisy data.

Defining Hard Labeling: Concepts and Examples

Hard labeling in machine learning refers to the process of assigning a single, definitive class label to each training example, representing absolute certainty about the instance's class membership. Typical examples include binary classification tasks where an image is labeled strictly as "cat" or "not cat," with no probability distribution over possible classes. This approach contrasts with soft labeling, where each example is associated with class probabilities or confidence scores that reflect uncertainty or overlapping class boundaries.

Understanding Soft Labeling: Principles and Use Cases

Soft labeling represents data points with probabilistic distributions over classes, capturing uncertainty and ambiguity in training data. This technique enhances model robustness and generalization by providing nuanced information beyond binary hard labels. Common use cases include semi-supervised learning, knowledge distillation, and handling noisy or imbalanced datasets.

Key Differences Between Hard and Soft Labeling

Hard labeling assigns each data point to a single, definitive class, providing clear, categorical outputs essential for traditional classification tasks. Soft labeling generates probabilistic outputs representing the likelihood of belonging to multiple classes, which enhances model calibration and helps in handling ambiguous or overlapping data. Soft labels improve generalization in models by incorporating uncertainty, while hard labels simplify decision-making but may lead to overconfident predictions.

Advantages of Hard Labeling in Machine Learning

Hard labeling in machine learning ensures clear and unambiguous class assignments, which simplifies model training and evaluation by providing definitive target outputs. This precise categorization enhances model interpretability and accelerates convergence during optimization processes. It also reduces computational complexity compared to soft labeling, making it efficient for large-scale classification tasks where definitive labels improve accuracy and consistency.

Benefits of Soft Labeling for Model Performance

Soft labeling enhances model performance by conveying nuanced class probabilities, allowing algorithms to learn from uncertainty and ambiguous data more effectively. This probabilistic approach improves generalization and robustness, especially in complex tasks where class boundaries are not well-defined. Models trained with soft labels often achieve higher accuracy and reduced overfitting compared to hard-labeled counterparts.

Impact on Model Training and Generalization

Hard labeling assigns a single, definitive class to each training example, which can lead to faster convergence but may cause the model to overfit and reduce generalization on ambiguous or noisy data. Soft labeling represents data points with probability distributions over classes, enabling the model to capture uncertainty and subtle patterns, improving robustness and generalization performance. Incorporating soft labels, especially in semi-supervised or noisy datasets, often enhances model training by providing richer supervisory signals that mitigate overconfidence and promote smoother decision boundaries.

Use Cases: When to Choose Hard or Soft Labeling

Hard labeling is ideal for classification tasks with clearly defined categories, such as image recognition in medical diagnostics where accuracy and interpretability are critical. Soft labeling benefits scenarios involving ambiguous or overlapping classes, like natural language processing for sentiment analysis, where probabilistic outputs capture uncertainty and improve model generalization. Use hard labeling to enforce strict decision boundaries and soft labeling to leverage nuanced information and uncertainty in training data.

Challenges and Limitations of Each Approach

Hard labeling in machine learning often struggles with capturing uncertainty and nuanced information, leading to rigid decision boundaries that may reduce model generalization. Soft labeling, while providing probabilistic insights and richer data representation, introduces challenges such as increased computational complexity and potential calibration errors. Both approaches face limitations in scenarios with ambiguous or noisy data, impacting overall model robustness and performance.

Future Trends in Labeling Techniques for Machine Learning

Future trends in labeling techniques for machine learning emphasize the integration of hybrid labeling methods, combining hard and soft labels to enhance model robustness and interpretability. Advances in semi-supervised learning and active learning foster the generation of high-quality soft labels through probability distributions, reducing reliance on costly manual annotations. Innovations in algorithmic label refinement and uncertainty quantification are expected to drive more adaptive and scalable labeling frameworks, enabling efficient training on complex and ambiguous datasets.

hard labeling vs soft labeling Infographic

techiny.com

techiny.com