Loss function measures the error for a single training example, quantifying the difference between the predicted output and actual label. Cost function aggregates the loss over the entire training dataset, providing a single scalar value used to guide the optimization process. Understanding the distinction between loss and cost functions is essential for designing and tuning machine learning models effectively.

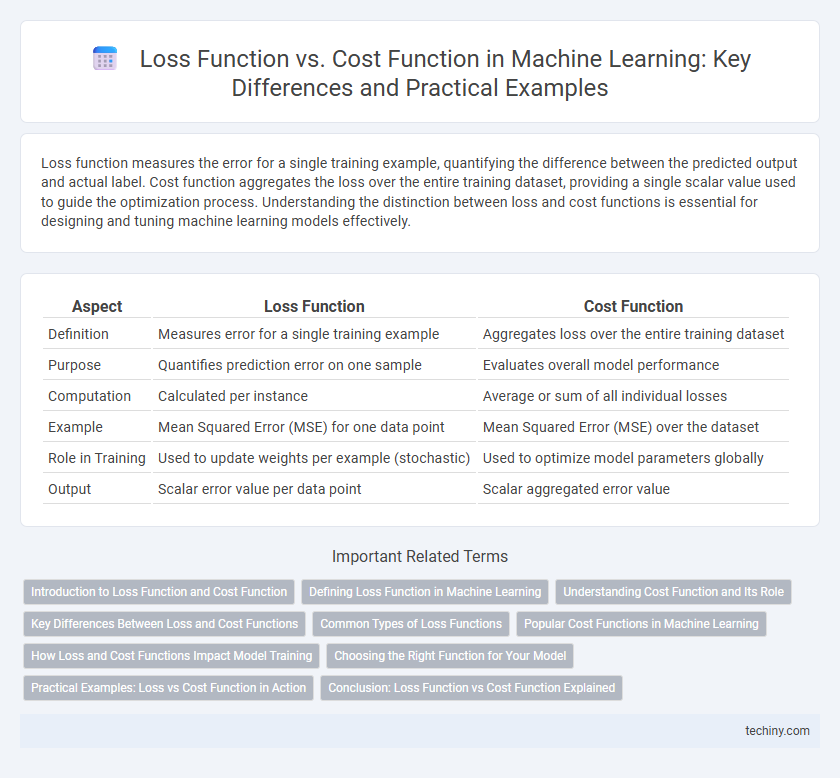

Table of Comparison

| Aspect | Loss Function | Cost Function |

|---|---|---|

| Definition | Measures error for a single training example | Aggregates loss over the entire training dataset |

| Purpose | Quantifies prediction error on one sample | Evaluates overall model performance |

| Computation | Calculated per instance | Average or sum of all individual losses |

| Example | Mean Squared Error (MSE) for one data point | Mean Squared Error (MSE) over the dataset |

| Role in Training | Used to update weights per example (stochastic) | Used to optimize model parameters globally |

| Output | Scalar error value per data point | Scalar aggregated error value |

Introduction to Loss Function and Cost Function

Loss function quantifies the error for a single training example by measuring the difference between predicted and actual values, guiding model adjustments during training. Cost function aggregates these individual losses across the entire dataset, providing an overall performance metric that optimization algorithms seek to minimize. Understanding the distinction between loss functions and cost functions is essential for effective model evaluation and optimization in machine learning.

Defining Loss Function in Machine Learning

A loss function in machine learning quantifies the error between predicted values and actual target values for a single data point, guiding the model's learning process by indicating how well it performs. Common examples include Mean Squared Error (MSE) for regression tasks and Cross-Entropy Loss for classification problems. The loss function directly influences weight updates during training, making it essential for model optimization.

Understanding Cost Function and Its Role

Cost function in machine learning quantifies the overall error by aggregating the loss values from all training examples, guiding the optimization process toward minimizing prediction errors. It serves as a key metric for evaluating model performance during training, enabling algorithms like gradient descent to adjust parameters effectively. Understanding the cost function is crucial for selecting appropriate optimization techniques and improving model accuracy.

Key Differences Between Loss and Cost Functions

Loss functions measure the error for a single training example by quantifying the discrepancy between predicted and actual values. Cost functions aggregate the loss across the entire training dataset, providing a comprehensive metric for model performance evaluation. While loss functions guide updates in individual predictions, cost functions drive overall optimization during model training.

Common Types of Loss Functions

Loss functions measure the error for a single training example, guiding the optimization process in machine learning models by quantifying the difference between the predicted output and actual label. Common types of loss functions include Mean Squared Error (MSE) for regression tasks, which calculates the average squared differences between predicted and true values, and Cross-Entropy Loss frequently used in classification tasks to measure the performance of probabilistic models. These loss functions serve as fundamental tools for minimizing prediction error and enhancing model accuracy during training.

Popular Cost Functions in Machine Learning

Popular cost functions in machine learning include Mean Squared Error (MSE), Cross-Entropy Loss, and Hinge Loss. MSE measures the average squared difference between predicted and actual values, making it ideal for regression tasks. Cross-Entropy Loss effectively evaluates classification models by quantifying the difference between predicted probability distributions and true labels.

How Loss and Cost Functions Impact Model Training

Loss functions measure the error for individual predictions, directly influencing the model's weight updates during training to minimize discrepancies between predicted and actual values. Cost functions aggregate the loss over the entire training dataset, providing a single scalar value that guides overall model optimization and convergence. Effective selection and tuning of loss and cost functions significantly impact gradient descent efficiency, model accuracy, and generalization performance in machine learning.

Choosing the Right Function for Your Model

Selecting the appropriate loss function and cost function is crucial for optimizing machine learning models, as loss functions measure error on individual data points while cost functions represent the average error across the dataset. Common loss functions include Mean Squared Error for regression and Cross-Entropy Loss for classification, with the cost function often derived as the aggregate loss to minimize during training. Understanding the problem type and data distribution guides the choice, ensuring improved model accuracy and convergence speed.

Practical Examples: Loss vs Cost Function in Action

Loss function measures the error for a single training example, such as Mean Squared Error (MSE) calculating the difference between predicted and actual values in regression tasks. Cost function aggregates the loss over the entire training dataset, for instance, the average MSE across all examples to evaluate overall model performance. In neural networks, the cross-entropy loss evaluates prediction error per sample, while the cost function sums these losses for backpropagation and parameter updates.

Conclusion: Loss Function vs Cost Function Explained

Loss function quantifies the error for a single training example, measuring how well the model predicts individual outputs. Cost function aggregates the losses over the entire training dataset, providing a comprehensive metric to optimize model parameters. Understanding the distinction between loss and cost functions is essential for effectively tuning machine learning algorithms and improving predictive accuracy.

Loss Function vs Cost Function Infographic

techiny.com

techiny.com