Hard Margin SVM strictly separates classes with a clear margin but requires data to be perfectly linearly separable, making it sensitive to outliers. Soft Margin SVM allows some misclassification by introducing slack variables, thus better handling noisy or overlapping data for improved generalization. Choosing between the two depends on the presence of noise and the trade-off between margin maximization and classification errors.

Table of Comparison

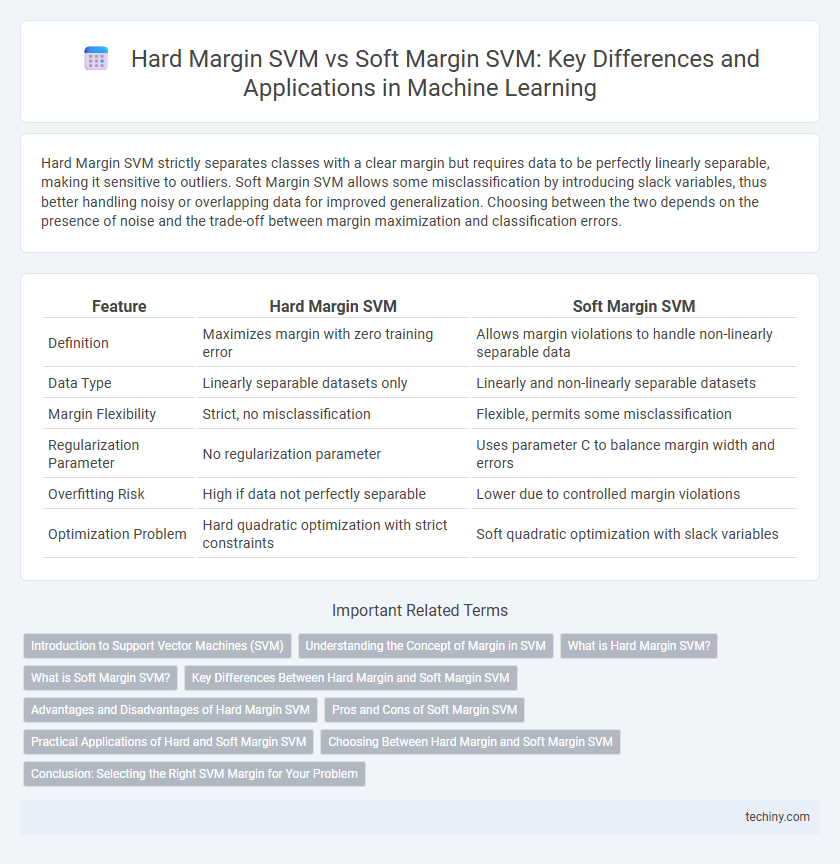

| Feature | Hard Margin SVM | Soft Margin SVM |

|---|---|---|

| Definition | Maximizes margin with zero training error | Allows margin violations to handle non-linearly separable data |

| Data Type | Linearly separable datasets only | Linearly and non-linearly separable datasets |

| Margin Flexibility | Strict, no misclassification | Flexible, permits some misclassification |

| Regularization Parameter | No regularization parameter | Uses parameter C to balance margin width and errors |

| Overfitting Risk | High if data not perfectly separable | Lower due to controlled margin violations |

| Optimization Problem | Hard quadratic optimization with strict constraints | Soft quadratic optimization with slack variables |

Introduction to Support Vector Machines (SVM)

Support Vector Machines (SVM) are supervised learning models used for classification and regression tasks by finding the optimal hyperplane that separates data points of different classes. Hard Margin SVM requires data to be linearly separable and enforces a strict boundary with no misclassifications, which limits its use in noisy or overlapping datasets. Soft Margin SVM introduces slack variables to allow some misclassifications, improving generalization and robustness on non-linearly separable or noisy data.

Understanding the Concept of Margin in SVM

Hard Margin SVM enforces a strict separation between classes by maximizing the margin without allowing misclassifications, making it suitable only for linearly separable data. Soft Margin SVM introduces a flexibility parameter that permits some misclassifications to handle non-linearly separable or noisy data, balancing margin maximization and classification error. The margin in SVM represents the distance between the decision boundary and the closest training points, which fundamentally influences model generalization and robustness.

What is Hard Margin SVM?

Hard Margin SVM is a type of Support Vector Machine that aims to find a perfect linear separator between classes by maximizing the margin while allowing no misclassification on the training data. It assumes that the data is linearly separable without noise, ensuring all points lie outside the margin boundaries. This approach is sensitive to outliers since any misclassification can prevent the existence of a feasible hard margin solution.

What is Soft Margin SVM?

Soft Margin SVM is a type of support vector machine that allows some misclassifications to achieve better generalization on noisy or non-linearly separable data. It introduces a slack variable to permit margin violations, balancing the trade-off between maximizing the margin and minimizing classification errors. This flexibility makes Soft Margin SVM effective for real-world datasets where perfect separation is impractical.

Key Differences Between Hard Margin and Soft Margin SVM

Hard Margin SVM strictly enforces no misclassifications by creating a maximum margin hyperplane only when data is linearly separable, resulting in high sensitivity to outliers. Soft Margin SVM, however, allows some misclassifications by introducing a regularization parameter C that balances margin width and classification error, making it suitable for non-separable and noisy data. The key difference lies in Hard Margin's inflexible boundary versus Soft Margin's adaptability through slack variables that control the trade-off between margin maximization and error minimization.

Advantages and Disadvantages of Hard Margin SVM

Hard Margin SVM excels in perfectly separating linearly separable data with maximum margin, resulting in clear decision boundaries and low bias. Its major disadvantage lies in sensitivity to noisy data and outliers, which can lead to poor generalization and overfitting. This model is unsuitable for datasets with overlapping classes or non-separable instances where Soft Margin SVM provides better flexibility by allowing margin violations.

Pros and Cons of Soft Margin SVM

Soft Margin SVM offers greater flexibility by allowing some misclassification to improve generalization on noisy or non-linearly separable data, enhancing robustness in real-world applications. It can handle overlapping classes more effectively than Hard Margin SVM, preventing overfitting by introducing a slack variable and a regularization parameter (C) that balances margin size and training error. However, tuning the parameter C is critical, as a low value increases tolerance to misclassifications but may lead to underfitting, whereas a high value prioritizes fewer misclassifications but risks overfitting.

Practical Applications of Hard and Soft Margin SVM

Hard Margin SVM is best suited for linearly separable data without noise, commonly applied in scenarios like spam detection or image classification with clear boundaries. Soft Margin SVM handles non-linearly separable and noisy data by allowing misclassifications, making it ideal for real-world applications such as medical diagnosis and financial fraud detection. The flexibility of Soft Margin SVM improves generalization and robustness, addressing practical challenges of imperfect data distributions.

Choosing Between Hard Margin and Soft Margin SVM

Choosing between Hard Margin and Soft Margin SVM depends on the dataset's characteristics and noise level. Hard Margin SVM works well for linearly separable data with no outliers, ensuring a strict separation with maximum margin. Soft Margin SVM introduces a tolerance for misclassification, making it suitable for real-world noisy data by balancing margin maximization and classification errors.

Conclusion: Selecting the Right SVM Margin for Your Problem

Choosing between Hard Margin SVM and Soft Margin SVM depends on dataset characteristics such as noise level and linear separability. Hard Margin SVM is ideal for perfectly separable, noise-free data but often overfits when outliers exist. Soft Margin SVM offers flexibility by allowing misclassifications, making it better suited for real-world, noisy datasets to achieve a balance between margin maximization and classification error.

Hard Margin SVM vs Soft Margin SVM Infographic

techiny.com

techiny.com