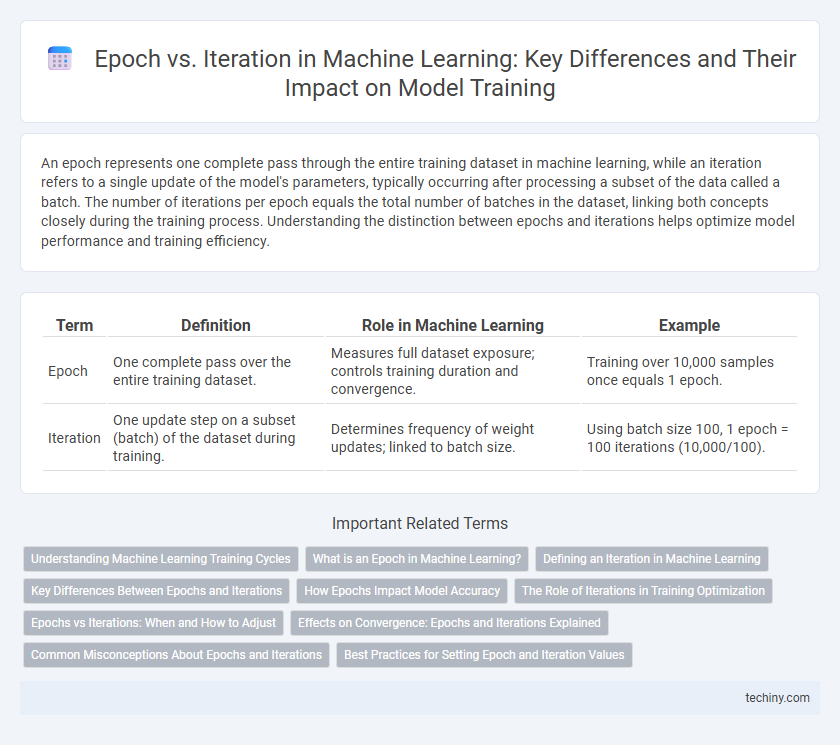

An epoch represents one complete pass through the entire training dataset in machine learning, while an iteration refers to a single update of the model's parameters, typically occurring after processing a subset of the data called a batch. The number of iterations per epoch equals the total number of batches in the dataset, linking both concepts closely during the training process. Understanding the distinction between epochs and iterations helps optimize model performance and training efficiency.

Table of Comparison

| Term | Definition | Role in Machine Learning | Example |

|---|---|---|---|

| Epoch | One complete pass over the entire training dataset. | Measures full dataset exposure; controls training duration and convergence. | Training over 10,000 samples once equals 1 epoch. |

| Iteration | One update step on a subset (batch) of the dataset during training. | Determines frequency of weight updates; linked to batch size. | Using batch size 100, 1 epoch = 100 iterations (10,000/100). |

Understanding Machine Learning Training Cycles

An epoch in machine learning represents one complete pass through the entire training dataset, while an iteration refers to a single update of the model's parameters typically performed on a batch of data. Training cycles consist of multiple iterations grouped into epochs, enabling the model to gradually optimize weights through repeated exposure to input data. Precise tuning of epochs and iterations is crucial for balancing underfitting and overfitting in supervised learning tasks.

What is an Epoch in Machine Learning?

An epoch in machine learning refers to one complete pass through the entire training dataset during the training process of a model. It allows the algorithm to update the learning parameters based on the loss calculated across all samples. Multiple epochs are often required to optimize model performance and minimize prediction errors.

Defining an Iteration in Machine Learning

An iteration in machine learning refers to a single update cycle of the model's parameters during training, typically after processing a batch of data. It represents one step through the optimization algorithm, such as gradient descent, using the computed gradients from the current batch. Multiple iterations constitute one epoch, which is a complete pass through the entire training dataset.

Key Differences Between Epochs and Iterations

An epoch in machine learning represents one complete pass through the entire training dataset, while an iteration refers to a single update of the model's parameters using a subset of data called a batch. The key difference lies in scale: multiple iterations make up one epoch, with each iteration processing a batch that contributes to the gradual learning of the model. Understanding the distinction between epochs and iterations is crucial for tuning hyperparameters such as batch size and learning rate to optimize model performance.

How Epochs Impact Model Accuracy

Epochs significantly influence model accuracy by ensuring the neural network sees the entire training dataset multiple times, allowing the model to learn more comprehensive feature representations. Increasing the number of epochs can improve accuracy by reducing underfitting, but excessive epochs may lead to overfitting, where the model performs well on training data but poorly on unseen data. Optimal epoch selection, often determined through validation loss monitoring, balances training completeness and generalization capability for robust machine learning models.

The Role of Iterations in Training Optimization

Iterations in machine learning training represent the number of batches processed within a single epoch, playing a critical role in optimization by enabling incremental updates to model weights. Each iteration calculates gradients and adjusts parameters using a subset of the training data, contributing to faster convergence compared to batch processing of the entire dataset. Effective tuning of iterations and batch size directly impacts the model's ability to generalize and reduces overfitting during gradient descent optimization.

Epochs vs Iterations: When and How to Adjust

Epochs represent complete passes through the entire training dataset, while iterations refer to the number of batches processed within each epoch during machine learning training. Adjust the number of epochs to control overall training duration and model convergence, increasing epochs can help prevent underfitting but may lead to overfitting if set too high. Modify iteration size primarily by changing batch size, which impacts computational efficiency and gradient stability, balancing these parameters is key to optimizing model performance.

Effects on Convergence: Epochs and Iterations Explained

Epochs represent the number of complete passes through the entire training dataset, while iterations refer to the number of batches processed within each epoch. Increasing epochs allows the model to learn more comprehensive patterns, improving convergence but risking overfitting if too high. Optimizing the balance between epochs and iterations is crucial for efficient convergence, ensuring the model generalizes well without excessive training time.

Common Misconceptions About Epochs and Iterations

Epochs represent complete passes through the entire training dataset, while iterations refer to the number of batches processed within an epoch, a distinction often misunderstood in machine learning. Many beginners mistakenly equate epochs with iterations, not realizing that multiple iterations constitute a single epoch depending on the batch size. Clarifying this difference is crucial for correctly interpreting training progress and tuning model performance metrics.

Best Practices for Setting Epoch and Iteration Values

Setting optimal epoch and iteration values in machine learning ensures efficient model training and prevents overfitting. A best practice involves monitoring validation loss to determine the ideal number of epochs, often employing early stopping techniques. Iterations depend on batch size and dataset size, where smaller batches increase iteration count per epoch but can improve model convergence and generalization.

Epoch vs Iteration Infographic

techiny.com

techiny.com