Instance-based learning stores training data and makes predictions by comparing new inputs to these examples, enabling flexible adaptation to changing data patterns without explicit generalization. Model-based learning constructs a generalized model by extracting patterns from the training data, allowing faster predictions on new instances but requiring retraining when data distributions shift. Selecting between these approaches depends on the balance between computational efficiency, adaptability, and the nature of the data.

Table of Comparison

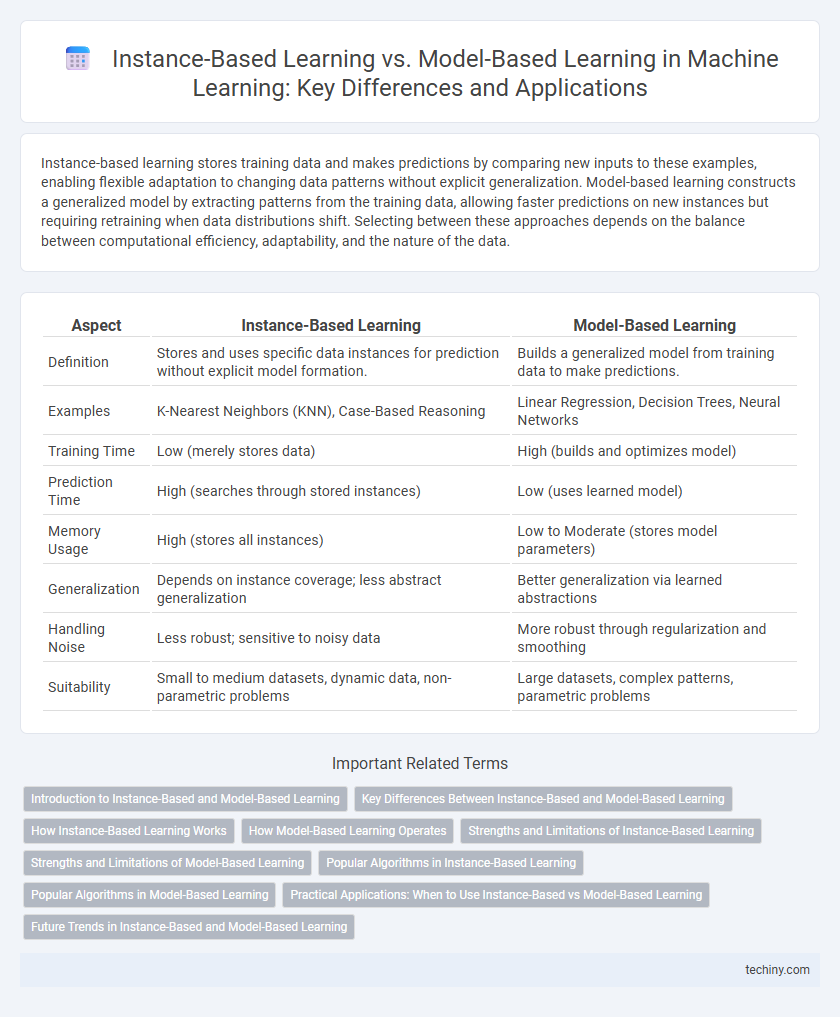

| Aspect | Instance-Based Learning | Model-Based Learning |

|---|---|---|

| Definition | Stores and uses specific data instances for prediction without explicit model formation. | Builds a generalized model from training data to make predictions. |

| Examples | K-Nearest Neighbors (KNN), Case-Based Reasoning | Linear Regression, Decision Trees, Neural Networks |

| Training Time | Low (merely stores data) | High (builds and optimizes model) |

| Prediction Time | High (searches through stored instances) | Low (uses learned model) |

| Memory Usage | High (stores all instances) | Low to Moderate (stores model parameters) |

| Generalization | Depends on instance coverage; less abstract generalization | Better generalization via learned abstractions |

| Handling Noise | Less robust; sensitive to noisy data | More robust through regularization and smoothing |

| Suitability | Small to medium datasets, dynamic data, non-parametric problems | Large datasets, complex patterns, parametric problems |

Introduction to Instance-Based and Model-Based Learning

Instance-based learning algorithms, such as k-nearest neighbors, store and utilize specific training examples to make predictions by comparing new instances directly to stored data points. Model-based learning methods, including decision trees and neural networks, create generalizations and explicit models from the training data, enabling predictions through learned parameters and structures. Understanding the fundamental differences helps in selecting appropriate techniques based on data size, computational resources, and problem complexity.

Key Differences Between Instance-Based and Model-Based Learning

Instance-based learning stores and utilizes specific examples from training data for prediction, emphasizing memorization over generalization, while model-based learning creates an abstract representation or function inferred from the data, allowing for faster predictions. Instance-based methods, such as k-nearest neighbors, require significant memory and computation at query time, whereas model-based techniques like neural networks or decision trees invest more resources during training but enable efficient inference. The choice depends on the trade-off between training time, prediction speed, memory usage, and the ability to generalize across unseen data.

How Instance-Based Learning Works

Instance-based learning stores training examples and makes predictions by comparing new inputs to these stored instances using similarity metrics like Euclidean distance or cosine similarity. It defers generalization until query time, relying on algorithms such as k-nearest neighbors (k-NN) to classify or regress based on the closest examples. This approach excels in scenarios with complex or irregular decision boundaries, where explicit model construction is difficult or computationally expensive.

How Model-Based Learning Operates

Model-based learning constructs an abstract representation or function from training data, enabling predictions for unseen instances. It typically involves algorithms such as linear regression, neural networks, or decision trees that generalize patterns within the dataset. This approach contrasts with instance-based learning by leveraging a global model for inference, reducing storage requirements and improving prediction speed.

Strengths and Limitations of Instance-Based Learning

Instance-based learning excels in adaptability, requiring no explicit model training by storing and comparing new data to existing instances, which enables quick updates and handles complex, non-linear patterns effectively. Its limitations include high storage demands and slower prediction times as dataset size grows, along with vulnerability to noisy data and irrelevant features that can degrade accuracy. This method performs best in scenarios with abundant memory and real-time adaptability needs but struggles in large-scale or high-dimensional feature spaces.

Strengths and Limitations of Model-Based Learning

Model-based learning excels in capturing general patterns from training data, enabling efficient prediction on unseen instances and reducing memory requirements compared to instance-based methods. Its reliance on a predefined model structure can lead to limitations in flexibility, potentially causing underfitting if the model is too simple or overfitting if overly complex. The training phase can be computationally intensive, but once trained, model-based approaches offer faster inference times and better scalability for large datasets.

Popular Algorithms in Instance-Based Learning

Instance-based learning algorithms such as K-Nearest Neighbors (KNN), Radius Neighbors, and Locally Weighted Regression focus on using training instances directly to make predictions without an explicit model. These algorithms rely on distance metrics, like Euclidean or Manhattan, to identify the most relevant data points for classification or regression tasks. The approach excels in scenarios with complex decision boundaries and small to medium-sized datasets, where model interpretability and adaptability to new data are crucial.

Popular Algorithms in Model-Based Learning

Popular algorithms in model-based learning include decision trees, support vector machines (SVM), and neural networks, which construct generalized models from training data. These algorithms focus on capturing underlying patterns to make predictions on unseen instances by optimizing parameters and minimizing error functions. Model-based learning thrives in environments where data distribution is complex and requires generalization beyond memorizing specific examples.

Practical Applications: When to Use Instance-Based vs Model-Based Learning

Instance-based learning excels in scenarios requiring quick adaptation to new data without extensive training, such as recommendation systems and anomaly detection in dynamic environments. Model-based learning is preferred for complex pattern recognition and prediction tasks, like image classification and natural language processing, where generalization from large datasets is critical. Choosing between these approaches depends on factors like dataset size, computational resources, and the need for interpretability versus accuracy.

Future Trends in Instance-Based and Model-Based Learning

Future trends in instance-based learning emphasize scalability improvements through advanced indexing techniques and hybrid algorithms that combine memory efficiency with rapid retrieval. In model-based learning, developments prioritize explainable AI and continual learning frameworks to enhance adaptability and interpretability across diverse applications. Integration of both paradigms is expected to drive robust, context-aware systems leveraging the strengths of direct data utilization and generalized models.

instance-based learning vs model-based learning Infographic

techiny.com

techiny.com