PCA reduces dimensionality by projecting data onto principal components that capture the most variance, making it effective for linear patterns and large datasets. t-SNE excels in visualizing complex, non-linear structures by modeling pairwise similarities in a low-dimensional space, often revealing clusters. While PCA is computationally efficient and interpretable, t-SNE provides more detailed insights into data topology at the cost of higher computational demands.

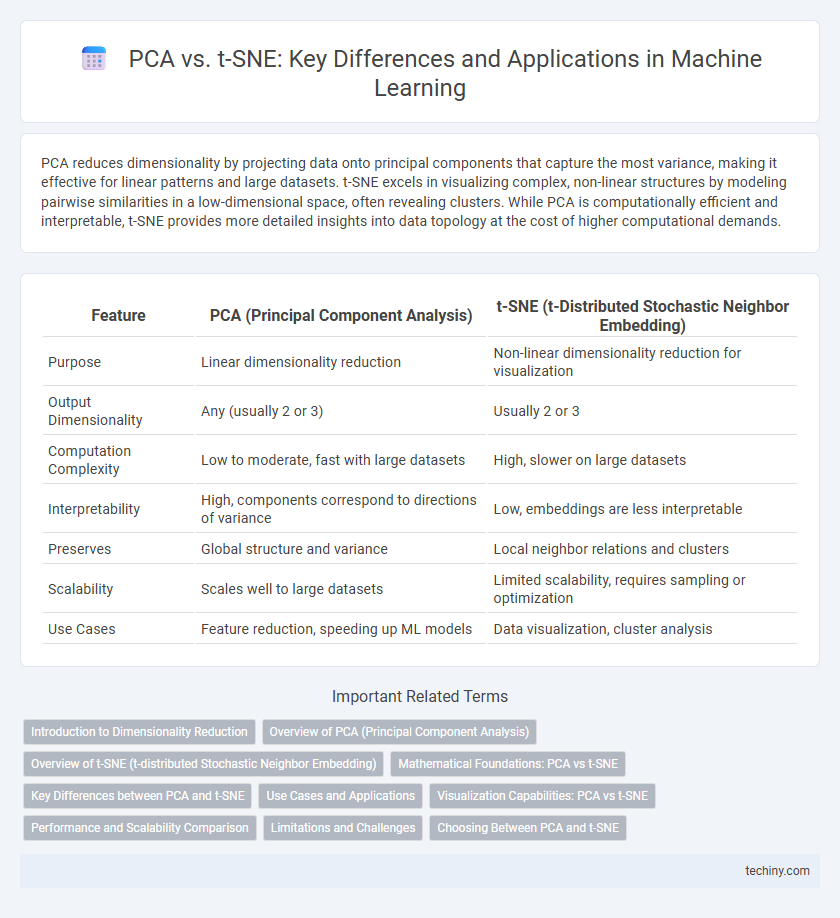

Table of Comparison

| Feature | PCA (Principal Component Analysis) | t-SNE (t-Distributed Stochastic Neighbor Embedding) |

|---|---|---|

| Purpose | Linear dimensionality reduction | Non-linear dimensionality reduction for visualization |

| Output Dimensionality | Any (usually 2 or 3) | Usually 2 or 3 |

| Computation Complexity | Low to moderate, fast with large datasets | High, slower on large datasets |

| Interpretability | High, components correspond to directions of variance | Low, embeddings are less interpretable |

| Preserves | Global structure and variance | Local neighbor relations and clusters |

| Scalability | Scales well to large datasets | Limited scalability, requires sampling or optimization |

| Use Cases | Feature reduction, speeding up ML models | Data visualization, cluster analysis |

Introduction to Dimensionality Reduction

Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are key techniques in dimensionality reduction, essential for simplifying high-dimensional machine learning data. PCA focuses on linear transformations to maximize variance and preserve global structure, making it ideal for initial data exploration and feature extraction. In contrast, t-SNE excels in preserving local relationships through nonlinear embeddings, often used for visualizing complex datasets in two or three dimensions.

Overview of PCA (Principal Component Analysis)

Principal Component Analysis (PCA) is a linear dimensionality reduction technique that transforms high-dimensional data into a lower-dimensional space by identifying the principal components with the highest variance. It reduces redundancy and noise by projecting data onto orthogonal axes, maximizing variance retention while simplifying the dataset. PCA is computationally efficient, making it widely used for preprocessing, visualization, and feature extraction in machine learning tasks.

Overview of t-SNE (t-distributed Stochastic Neighbor Embedding)

t-SNE (t-distributed Stochastic Neighbor Embedding) is a nonlinear dimensionality reduction technique designed for visualizing high-dimensional data by modeling pairwise similarities with probability distributions. It converts similarities between data points into joint probabilities and minimizes the Kullback-Leibler divergence between these distributions in low-dimensional space, effectively preserving local neighborhood structures. t-SNE excels at revealing complex clusters and patterns that linear methods like PCA cannot capture, making it ideal for exploratory data analysis and visualization tasks.

Mathematical Foundations: PCA vs t-SNE

Principal Component Analysis (PCA) operates through linear algebra by identifying orthogonal eigenvectors of the covariance matrix, projecting high-dimensional data onto lower-dimensional subspaces that maximize variance. In contrast, t-Distributed Stochastic Neighbor Embedding (t-SNE) employs a probabilistic approach by modeling pairwise similarities using conditional probabilities in high- and low-dimensional spaces, optimizing a Kullback-Leibler divergence objective to preserve local neighborhood structures. While PCA relies on spectral decomposition for dimensionality reduction, t-SNE uses gradient descent to minimize divergence, offering non-linear embeddings suited for complex manifold structures.

Key Differences between PCA and t-SNE

PCA (Principal Component Analysis) is a linear dimensionality reduction technique that projects data onto orthogonal components to maximize variance, making it suitable for preserving global structure in high-dimensional datasets. In contrast, t-SNE (t-distributed Stochastic Neighbor Embedding) is a non-linear technique designed to capture local relationships and cluster formations by modeling pairwise similarities in lower-dimensional space. PCA is computationally faster and easier to interpret, whereas t-SNE provides better visualization for complex manifolds but is computationally intensive and sensitive to hyperparameters such as perplexity and learning rate.

Use Cases and Applications

PCA excels in dimensionality reduction for linear data structures, commonly applied in preprocessing tasks like feature extraction, data visualization, and noise reduction in high-dimensional datasets. t-SNE is preferred for nonlinear dimensionality reduction, effectively capturing complex patterns and local structures, making it ideal for visualizing high-dimensional data such as images, genomics, and speech embeddings. Use cases for PCA include finance and genomics, whereas t-SNE is widely used in exploratory data analysis, clustering, and deep learning model interpretation.

Visualization Capabilities: PCA vs t-SNE

PCA excels in preserving global data structure by projecting high-dimensional data onto principal components, making it ideal for identifying large-scale patterns. t-SNE specializes in capturing local similarities, providing superior visualization of clusters and subgroups by emphasizing local neighborhood relationships. While PCA offers faster computation and linear transformation, t-SNE's nonlinear approach reveals intricate data manifold details critical for nuanced cluster exploration.

Performance and Scalability Comparison

PCA performs well with large-scale datasets due to its linear nature and computational efficiency, making it suitable for dimensionality reduction in high-dimensional data with minimal processing time. In contrast, t-SNE, a nonlinear technique, offers superior visualization of complex data structures but suffers from higher computational costs and limited scalability, often becoming impractical for datasets exceeding tens of thousands of samples. For performance-critical applications with massive data, PCA is preferred, while t-SNE is ideal for smaller datasets where detailed cluster separation and structure preservation are prioritized.

Limitations and Challenges

PCA struggles with non-linear data structures as it relies on linear transformations, limiting its effectiveness in capturing complex patterns in high-dimensional datasets. t-SNE, while excellent for visualizing local data neighborhoods, often suffers from high computational costs and difficulty preserving global data structure, which complicates interpretation. Both techniques require careful parameter tuning and can produce misleading results if used without understanding their inherent limitations.

Choosing Between PCA and t-SNE

Choosing between PCA and t-SNE depends on the specific machine learning task and dataset characteristics. PCA is preferred for linear dimensionality reduction, preserving global variance and requiring less computational power, making it suitable for large-scale datasets. t-SNE excels at capturing complex, non-linear structures and local relationships in high-dimensional data but is computationally intensive and better suited for smaller datasets or visualization purposes.

PCA vs t-SNE Infographic

techiny.com

techiny.com