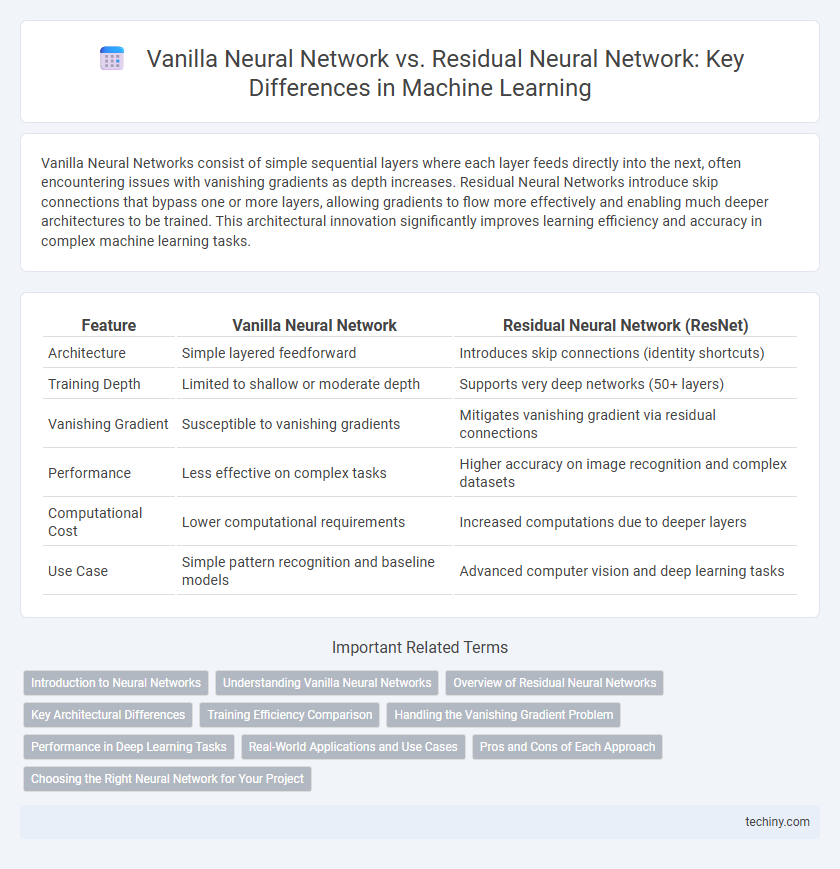

Vanilla Neural Networks consist of simple sequential layers where each layer feeds directly into the next, often encountering issues with vanishing gradients as depth increases. Residual Neural Networks introduce skip connections that bypass one or more layers, allowing gradients to flow more effectively and enabling much deeper architectures to be trained. This architectural innovation significantly improves learning efficiency and accuracy in complex machine learning tasks.

Table of Comparison

| Feature | Vanilla Neural Network | Residual Neural Network (ResNet) |

|---|---|---|

| Architecture | Simple layered feedforward | Introduces skip connections (identity shortcuts) |

| Training Depth | Limited to shallow or moderate depth | Supports very deep networks (50+ layers) |

| Vanishing Gradient | Susceptible to vanishing gradients | Mitigates vanishing gradient via residual connections |

| Performance | Less effective on complex tasks | Higher accuracy on image recognition and complex datasets |

| Computational Cost | Lower computational requirements | Increased computations due to deeper layers |

| Use Case | Simple pattern recognition and baseline models | Advanced computer vision and deep learning tasks |

Introduction to Neural Networks

Vanilla Neural Networks consist of straightforward feedforward architectures where each layer is fully connected to the next without any shortcuts, making them effective for simple pattern recognition tasks but prone to vanishing gradient problems in deeper models. Residual Neural Networks (ResNets) introduce skip connections that allow gradients to flow directly across layers, significantly improving training efficiency and enabling the construction of much deeper neural networks. These architectural innovations directly address the limitations of traditional Vanilla Neural Networks, enhancing model accuracy and convergence speed in complex machine learning tasks.

Understanding Vanilla Neural Networks

Vanilla Neural Networks consist of multiple layers of interconnected neurons where each layer transforms its input through weighted connections and activation functions. These networks suffer from the vanishing gradient problem, limiting depth and making it challenging to learn complex representations in deep architectures. Understanding the architecture and limitations of Vanilla Neural Networks is essential before exploring advanced models like Residual Neural Networks, which address these challenges by introducing skip connections.

Overview of Residual Neural Networks

Residual Neural Networks (ResNets) introduce shortcut connections that bypass one or more layers, effectively addressing the vanishing gradient problem common in traditional vanilla neural networks. By enabling the network to learn residual functions rather than direct mappings, ResNets facilitate the training of much deeper architectures with improved accuracy and convergence. This innovation allows for enhanced feature extraction and better performance in complex machine learning tasks such as image recognition and natural language processing.

Key Architectural Differences

Vanilla Neural Networks consist of sequential layers where each layer feeds directly into the next without bypassing any information, which can lead to vanishing gradients and hinder training in very deep networks. Residual Neural Networks (ResNets) introduce skip connections that allow the input of one layer to bypass intermediate layers and be added to later layers, facilitating gradient flow and enabling much deeper architectures. This key architectural difference in ResNets addresses degradation problems by preserving identity mappings and improving convergence rates during training.

Training Efficiency Comparison

Vanilla Neural Networks often face challenges like vanishing gradients, leading to slower convergence and less efficient training, especially in deep architectures. Residual Neural Networks (ResNets) use skip connections to alleviate these issues, enabling faster training and improved gradient flow. Empirical studies show ResNets achieve higher accuracy with fewer epochs, demonstrating superior training efficiency over vanilla architectures.

Handling the Vanishing Gradient Problem

Vanilla Neural Networks often suffer from the vanishing gradient problem, where gradients become extremely small during backpropagation, hindering effective weight updates in deep layers. Residual Neural Networks (ResNets) address this by incorporating skip connections that allow gradients to flow directly through the network, maintaining gradient strength and enabling the training of much deeper architectures. This design significantly improves convergence rates and performance in complex machine learning tasks like image recognition and natural language processing.

Performance in Deep Learning Tasks

Vanilla Neural Networks often face degradation in performance as depth increases due to vanishing gradients, limiting their effectiveness in complex deep learning tasks. Residual Neural Networks (ResNets) introduce identity shortcut connections that enable training of substantially deeper models by mitigating gradient vanishing and improving convergence. Empirical results on benchmarks like ImageNet demonstrate ResNets consistently outperform Vanilla Neural Networks in accuracy and training stability across various deep learning applications.

Real-World Applications and Use Cases

Vanilla Neural Networks excel in straightforward tasks like image classification and basic pattern recognition where model simplicity and interpretability are crucial. Residual Neural Networks (ResNets) outperform vanilla models in complex real-world applications such as deep image recognition, medical diagnosis, and autonomous driving by enabling much deeper architectures without gradient vanishing problems. ResNets' skip connections facilitate learning intricate features, making them ideal for large-scale datasets and tasks requiring high accuracy.

Pros and Cons of Each Approach

Vanilla neural networks offer simplicity and ease of implementation, making them suitable for straightforward tasks but often suffer from vanishing gradient problems in deep architectures. Residual neural networks (ResNets) introduce skip connections that alleviate vanishing gradients, enabling the training of much deeper models with improved accuracy and convergence speed. However, ResNets are more complex and computationally intensive, which can increase training time and resource requirements compared to vanilla models.

Choosing the Right Neural Network for Your Project

Vanilla Neural Networks excel in straightforward tasks with limited data, offering simplicity and faster training, while Residual Neural Networks (ResNets) address the vanishing gradient problem, enabling deeper architectures for complex image recognition or natural language processing projects. Project requirements such as dataset size, computational resources, and the need for model depth should guide the choice between these architectures. For high-performance scenarios involving deep layers, ResNets often provide superior accuracy and stability compared to standard Vanilla Neural Networks.

Vanilla Neural Network vs Residual Neural Network Infographic

techiny.com

techiny.com