Grid Search systematically explores a predefined hyperparameter space by evaluating all possible combinations, ensuring thorough coverage but often requiring significant computational resources. Random Search samples hyperparameter combinations randomly, which can be more efficient in high-dimensional spaces and frequently discovers optimal or near-optimal configurations faster. Both methods balance exploration and computational cost differently, making the choice dependent on the problem size and resource constraints.

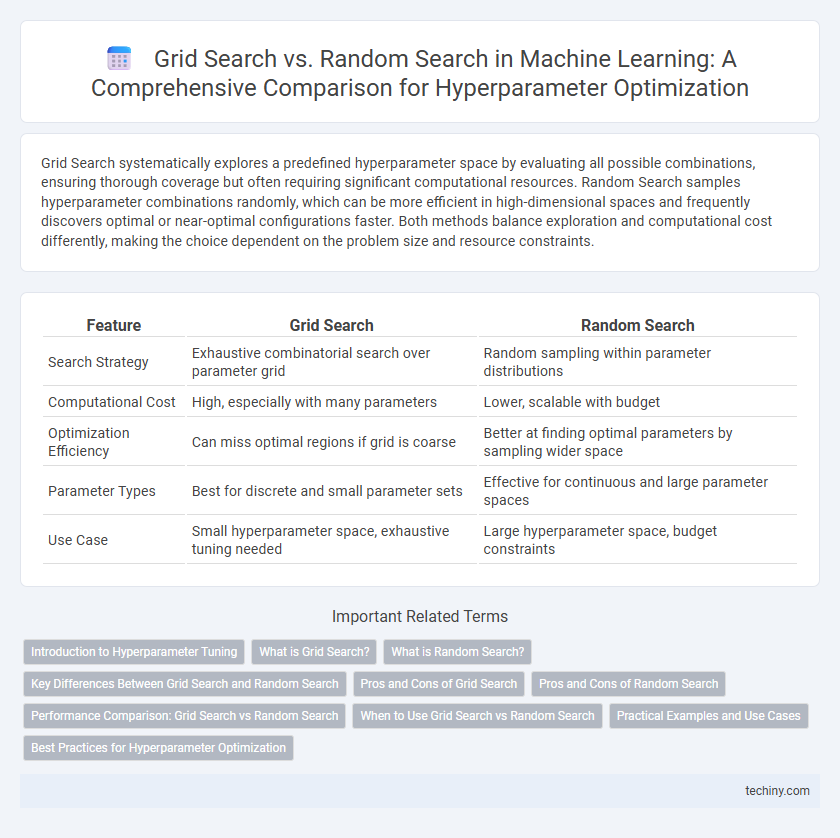

Table of Comparison

| Feature | Grid Search | Random Search |

|---|---|---|

| Search Strategy | Exhaustive combinatorial search over parameter grid | Random sampling within parameter distributions |

| Computational Cost | High, especially with many parameters | Lower, scalable with budget |

| Optimization Efficiency | Can miss optimal regions if grid is coarse | Better at finding optimal parameters by sampling wider space |

| Parameter Types | Best for discrete and small parameter sets | Effective for continuous and large parameter spaces |

| Use Case | Small hyperparameter space, exhaustive tuning needed | Large hyperparameter space, budget constraints |

Introduction to Hyperparameter Tuning

Hyperparameter tuning is essential in machine learning to optimize model performance by selecting the best combination of hyperparameters. Grid Search exhaustively evaluates all possible parameter combinations within a predefined set, ensuring comprehensive coverage but often requiring significant computational resources. Random Search samples a fixed number of parameter settings from specified distributions, offering a more efficient approach for high-dimensional spaces and complex models while still identifying near-optimal solutions.

What is Grid Search?

Grid Search is a systematic hyperparameter optimization technique in machine learning that exhaustively explores a predefined set of parameter values by evaluating every possible combination. This method ensures a comprehensive search across the specified hyperparameter space, enhancing model performance by identifying the optimal configuration. Although computationally intensive, Grid Search is effective for tuning algorithms like support vector machines, decision trees, and neural networks when the parameter grid is relatively small.

What is Random Search?

Random Search is a hyperparameter optimization technique that samples parameter combinations randomly from a defined search space, enabling efficient exploration without exhaustive evaluation. Unlike Grid Search, which systematically evaluates all parameter combinations, Random Search can discover high-performing configurations with fewer iterations, especially in high-dimensional spaces. This method leverages stochastic sampling to optimize machine learning model performance, making it suitable for complex models with many hyperparameters.

Key Differences Between Grid Search and Random Search

Grid Search systematically explores all possible hyperparameter combinations within a specified grid, ensuring exhaustive coverage but often resulting in high computational cost. Random Search selects random combinations from the parameter space, providing faster convergence and better performance in high-dimensional or large search spaces. The efficiency of Random Search in identifying optimal hyperparameters improves significantly when only a few parameters influence model performance.

Pros and Cons of Grid Search

Grid Search systematically explores all possible hyperparameter combinations, ensuring exhaustive coverage to identify the optimal model configuration but often results in high computational cost and longer training times. Its deterministic nature guarantees reproducibility and thoroughness, yet it can be inefficient in high-dimensional spaces where many parameter values may be irrelevant. Grid Search is advantageous for smaller, well-defined parameter grids but may struggle with scalability and resource constraints in complex machine learning tasks.

Pros and Cons of Random Search

Random Search excels in hyperparameter tuning by efficiently exploring a wide range of values, making it particularly effective when only a few hyperparameters significantly impact model performance. It avoids the exhaustive and computationally expensive nature of Grid Search by randomly sampling combinations, often leading to quicker convergence on optimal or near-optimal parameters. However, its stochastic nature can result in inconsistent outcomes and might miss the best parameters if the random samples do not cover critical regions of the hyperparameter space adequately.

Performance Comparison: Grid Search vs Random Search

Grid Search exhaustively evaluates all possible hyperparameter combinations, ensuring optimal model performance but requiring extensive computational resources. Random Search samples a fixed number of random hyperparameter configurations, often achieving comparable or better results with significantly reduced computation time. Studies demonstrate that Random Search is more efficient for high-dimensional hyperparameter spaces, making it suitable for large-scale machine learning tasks where performance speed is critical.

When to Use Grid Search vs Random Search

Grid Search is optimal when the hyperparameter space is small and computational resources are sufficient to exhaustively evaluate all combinations, ensuring thorough model tuning. Random Search excels in high-dimensional or large hyperparameter spaces, providing efficient coverage by sampling random combinations and often finding near-optimal solutions faster. Choose Grid Search for precise optimization of critical parameters and Random Search for faster exploration in complex or computationally constrained scenarios.

Practical Examples and Use Cases

Grid Search exhaustively evaluates a predefined set of hyperparameter combinations, making it ideal for smaller, well-defined search spaces such as tuning the number of trees and depth in Random Forest models for credit scoring. Random Search samples hyperparameter configurations randomly, proving more efficient in high-dimensional spaces like deep learning hyperparameters where the interaction effects are complex and unknown, exemplified by tuning learning rates and dropout rates in convolutional neural networks for image classification. Practical use cases show Grid Search excels in scenarios with limited parameters and compute resources, while Random Search benefits projects requiring quick exploration across vast, uncertain parameter ranges.

Best Practices for Hyperparameter Optimization

Grid Search systematically explores all combinations of hyperparameters, ensuring thorough coverage but can be computationally expensive with high-dimensional spaces. Random Search samples hyperparameter combinations randomly, often finding good results faster and more efficiently in large search spaces. Employing cross-validation and adaptive resource allocation techniques, such as early stopping and successive halving, enhances the effectiveness of both methods for hyperparameter optimization in machine learning models.

Grid Search vs Random Search Infographic

techiny.com

techiny.com