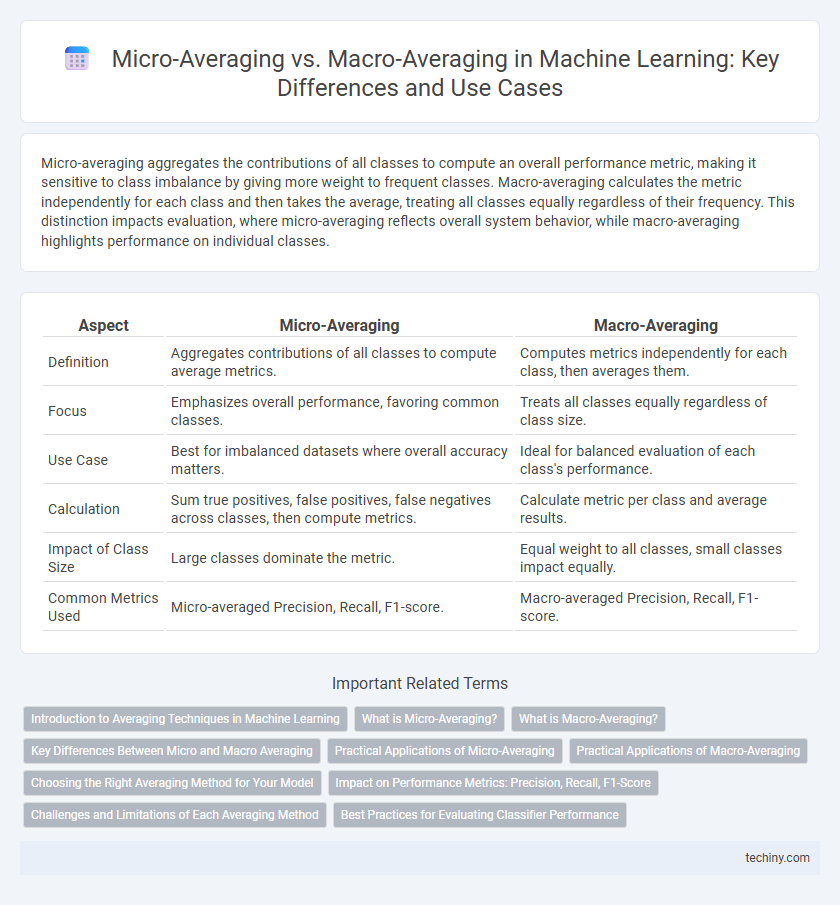

Micro-averaging aggregates the contributions of all classes to compute an overall performance metric, making it sensitive to class imbalance by giving more weight to frequent classes. Macro-averaging calculates the metric independently for each class and then takes the average, treating all classes equally regardless of their frequency. This distinction impacts evaluation, where micro-averaging reflects overall system behavior, while macro-averaging highlights performance on individual classes.

Table of Comparison

| Aspect | Micro-Averaging | Macro-Averaging |

|---|---|---|

| Definition | Aggregates contributions of all classes to compute average metrics. | Computes metrics independently for each class, then averages them. |

| Focus | Emphasizes overall performance, favoring common classes. | Treats all classes equally regardless of class size. |

| Use Case | Best for imbalanced datasets where overall accuracy matters. | Ideal for balanced evaluation of each class's performance. |

| Calculation | Sum true positives, false positives, false negatives across classes, then compute metrics. | Calculate metric per class and average results. |

| Impact of Class Size | Large classes dominate the metric. | Equal weight to all classes, small classes impact equally. |

| Common Metrics Used | Micro-averaged Precision, Recall, F1-score. | Macro-averaged Precision, Recall, F1-score. |

Introduction to Averaging Techniques in Machine Learning

Micro-averaging calculates metrics globally by aggregating contributions of all classes to compute the average metric, making it suitable for imbalanced datasets with varied class distributions. Macro-averaging computes the metric independently for each class and then takes the average, treating all classes equally regardless of their size. Choosing between micro and macro averaging affects the evaluation of classification models, particularly in multi-class and multi-label machine learning scenarios.

What is Micro-Averaging?

Micro-averaging aggregates the contributions of all classes to compute the average metric, treating every instance equally regardless of its class. This technique is particularly useful in multi-class classification problems where class imbalance exists, as it emphasizes the overall performance across all instances. It calculates metrics such as precision, recall, or F1-score by summing true positives, false positives, and false negatives globally before applying the formula.

What is Macro-Averaging?

Macro-averaging in machine learning evaluates model performance by calculating metrics independently for each class and then averaging these results, treating all classes equally regardless of their size. This method is particularly useful in imbalanced datasets, ensuring that minority classes contribute equally to the overall evaluation. Macro-averaging provides a balanced view of model effectiveness across all categories without bias toward more frequent classes.

Key Differences Between Micro and Macro Averaging

Micro-averaging aggregates the contributions of all classes to compute the average metric, making it sensitive to class imbalance by giving more weight to larger classes. Macro-averaging calculates the metric independently for each class and then averages these values, treating all classes equally regardless of their size. The key difference lies in micro-averaging's emphasis on global performance across instances, while macro-averaging focuses on per-class performance, highlighting the impact of minority classes.

Practical Applications of Micro-Averaging

Micro-averaging aggregates true positives, false positives, and false negatives across all classes to compute overall precision, recall, and F1-score, making it ideal for evaluating imbalanced datasets in multi-class classification problems. This approach provides a more granular assessment by weighting each instance equally, which is particularly useful in natural language processing tasks such as spam detection or sentiment analysis where class distribution varies significantly. Micro-averaged metrics enable practitioners to optimize models based on global performance rather than per-class performance, supporting better decision-making in real-world applications.

Practical Applications of Macro-Averaging

Macro-averaging calculates performance metrics by averaging scores across all classes, treating each class equally regardless of size, making it ideal for imbalanced datasets common in fraud detection and medical diagnostics. This method ensures minority classes receive proportional attention, enhancing the evaluation of models in scenarios with uneven class distributions. Practical applications include multi-class classification tasks where balanced assessment across all categories is critical for model reliability.

Choosing the Right Averaging Method for Your Model

Micro-averaging aggregates contributions of all classes to compute an overall performance metric, making it ideal for imbalanced datasets where class frequency matters. Macro-averaging calculates metrics independently for each class and then averages them, providing equal weight to all classes regardless of their size. Selecting between micro and macro-averaging depends on whether the evaluation prioritizes overall accuracy or balanced class performance in a multi-class or multi-label machine learning model.

Impact on Performance Metrics: Precision, Recall, F1-Score

Micro-averaging aggregates contributions of all classes to compute overall precision, recall, and F1-score, giving more weight to classes with a larger number of instances and thus reflecting the model's performance on the majority class. Macro-averaging calculates precision, recall, and F1-score independently for each class and then averages them, treating all classes equally regardless of prevalence, which helps highlight performance on minority classes. The choice between micro-averaging and macro-averaging significantly impacts the interpretability of performance metrics, especially in imbalanced datasets common in machine learning.

Challenges and Limitations of Each Averaging Method

Micro-averaging in machine learning tends to bias results toward classes with more samples, often overshadowing the performance on minority classes and leading to misleading overall metrics. Macro-averaging treats all classes equally but can disproportionately amplify the impact of poorly performing rare classes, which may not reflect the model's general effectiveness. Both methods struggle to balance the trade-off between class imbalance and interpretive fairness across diverse datasets.

Best Practices for Evaluating Classifier Performance

Micro-averaging aggregates contributions of all classes to compute average metrics, making it suitable for datasets with class imbalance by emphasizing overall performance. Macro-averaging calculates metrics independently for each class and then averages them, providing equal weight to all classes and highlighting performance on minority classes. Best practices recommend using both approaches to gain a comprehensive understanding of classifier performance across diverse class distributions.

micro-averaging vs macro-averaging Infographic

techiny.com

techiny.com