Cross Entropy Loss is ideal for classification tasks as it measures the difference between two probability distributions, making it effective in evaluating model predictions against true categorical labels. Mean Squared Error is better suited for regression problems because it quantifies the average squared difference between predicted and actual continuous values, providing a clear metric for prediction accuracy. Choosing between Cross Entropy Loss and MSE depends on the nature of the problem: classification requires Cross Entropy for probability outputs, while regression benefits from MSE's sensitivity to numerical deviations.

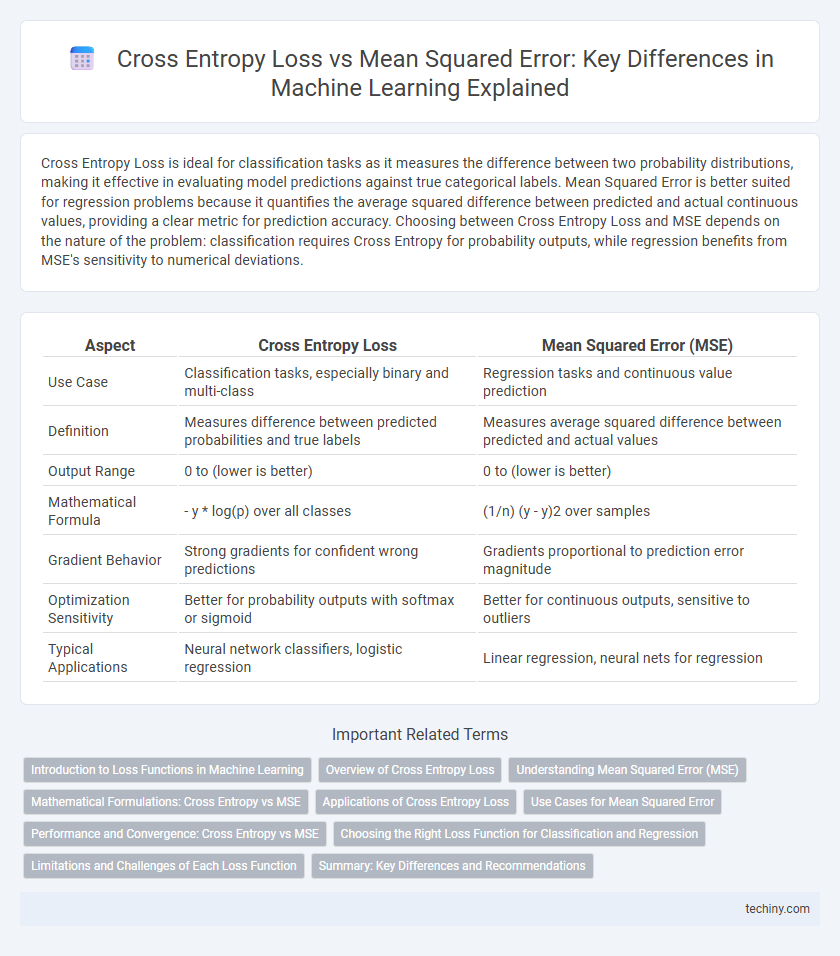

Table of Comparison

| Aspect | Cross Entropy Loss | Mean Squared Error (MSE) |

|---|---|---|

| Use Case | Classification tasks, especially binary and multi-class | Regression tasks and continuous value prediction |

| Definition | Measures difference between predicted probabilities and true labels | Measures average squared difference between predicted and actual values |

| Output Range | 0 to (lower is better) | 0 to (lower is better) |

| Mathematical Formula | - y * log(p) over all classes | (1/n) (y - y)2 over samples |

| Gradient Behavior | Strong gradients for confident wrong predictions | Gradients proportional to prediction error magnitude |

| Optimization Sensitivity | Better for probability outputs with softmax or sigmoid | Better for continuous outputs, sensitive to outliers |

| Typical Applications | Neural network classifiers, logistic regression | Linear regression, neural nets for regression |

Introduction to Loss Functions in Machine Learning

Loss functions quantify the error between predicted outputs and actual targets, guiding model optimization in machine learning. Cross entropy loss measures the dissimilarity between two probability distributions, making it ideal for classification tasks by penalizing incorrect predictions more heavily. Mean squared error calculates the average squared difference between predicted and actual values, commonly used in regression problems to minimize variance and improve accuracy.

Overview of Cross Entropy Loss

Cross Entropy Loss measures the performance of classification models by quantifying the difference between predicted probabilities and actual class labels, making it ideal for tasks involving discrete categories. It penalizes incorrect confident predictions more heavily than Mean Squared Error, which calculates the average squared difference between predicted and actual values and is typically used for regression problems. Cross Entropy Loss's foundation in information theory allows it to effectively optimize probabilistic outputs in neural networks, improving convergence and accuracy in classification tasks.

Understanding Mean Squared Error (MSE)

Mean Squared Error (MSE) measures the average squared difference between predicted values and actual target values, making it ideal for regression problems in machine learning. MSE penalizes larger errors more severely, encouraging models to focus on minimizing significant deviations from true values. This loss function provides a smooth gradient, which facilitates efficient optimization during the training process of neural networks.

Mathematical Formulations: Cross Entropy vs MSE

Cross Entropy Loss is mathematically defined as -yi log(pi), where yi represents the true label and pi the predicted probability, making it ideal for classification tasks involving probabilistic outputs. Mean Squared Error (MSE) is expressed as (1/n)(yi - yi)2, focusing on the squared difference between actual and predicted continuous values, thus suited for regression problems. The distinct mathematical formulations reflect their optimization objectives: Cross Entropy minimizes the distance between probability distributions, while MSE quantifies direct variance in continuous predictions.

Applications of Cross Entropy Loss

Cross Entropy Loss is widely used in classification tasks, particularly for multi-class problems in deep learning frameworks like convolutional neural networks and recurrent neural networks. Its ability to quantify the difference between predicted probability distributions and true labels makes it ideal for applications such as image recognition, natural language processing, and speech recognition. This loss function effectively handles probabilistic outputs, ensuring better convergence during training compared to Mean Squared Error in classification scenarios.

Use Cases for Mean Squared Error

Mean Squared Error (MSE) is widely used in regression tasks within machine learning to measure the average squared difference between predicted and actual continuous values, making it ideal for problems requiring precise estimation such as housing price prediction and temperature forecasting. MSE's sensitivity to larger errors helps models focus on minimizing significant prediction deviations in continuous output scenarios. Its simplicity and differentiability facilitate smooth optimization during gradient descent, thus enhancing model convergence in regression algorithms.

Performance and Convergence: Cross Entropy vs MSE

Cross Entropy Loss generally outperforms Mean Squared Error (MSE) in classification tasks due to its ability to better handle probabilistic outputs and provide stronger gradients, leading to faster convergence. MSE can result in slower learning and suboptimal performance because it treats predictions as continuous values, which is less appropriate for classification boundaries. Models trained with Cross Entropy Loss often achieve higher accuracy and more stable convergence compared to those using MSE on categorical data.

Choosing the Right Loss Function for Classification and Regression

Cross Entropy Loss is preferred for classification tasks because it effectively measures the difference between predicted probabilities and actual class labels, optimizing model performance in categorical outcomes. Mean Squared Error (MSE) is ideal for regression problems, minimizing the average squared differences between predicted values and continuous target variables. Selecting the appropriate loss function is critical for model accuracy, with Cross Entropy enhancing convergence in classification and MSE ensuring precise regression predictions.

Limitations and Challenges of Each Loss Function

Cross Entropy Loss often struggles with class imbalance, leading to biased predictions toward majority classes, while Mean Squared Error (MSE) can produce overly smooth gradients that hinder convergence in classification tasks. MSE is sensitive to outliers, causing instability in model updates, whereas Cross Entropy Loss may suffer from numerical instability with very small predicted probabilities. Both loss functions face challenges in accurately representing uncertainty, with Cross Entropy Loss being less interpretable for regression problems and MSE often failing to capture complex class boundaries effectively.

Summary: Key Differences and Recommendations

Cross Entropy Loss is preferred for classification tasks because it measures the difference between two probability distributions, effectively handling categorical outputs and providing better gradient signals for model optimization. Mean Squared Error (MSE) is more suited for regression problems, as it calculates the average of squared differences between predicted and actual values, emphasizing larger errors. For neural networks, Cross Entropy Loss accelerates convergence in classification, while MSE is recommended when predicting continuous outcomes with Gaussian noise assumptions.

Cross Entropy Loss vs Mean Squared Error Infographic

techiny.com

techiny.com